20+ AI Business Trends For 2025!

The business trends which are shaping the AI industry in 2025!

In the next couple of decades, we will be able to do things that would have seemed like magic to our grandparents.

In his most recent piece, “The Intelligence Age,” OpenAI’s co-founder Sam Altman emphasized this passage!

Whether you like it or not, I believe this will become a fundamental truth we can't ignore, and in this research, I'll show you why.

Indeed, the AI industry has been hectic since we got the famous "ChatGPT moment" on November 30, 2022!

Since then, there has been an immense amount of progress and an even more impressive amount of noise.

That is why, when ChatGPT came out on November 30, 2022, it was obvious that the whole business landscape had changed. As someone who had built a small yet successful digital business, I knew that everything was about to change.

So, starting that day, I thought about how the field would progress. As I played with all the AI tools out there, launched several AI apps, added value to my community, and experimented as much as possible within the AI industry as an executive and entrepreneur, I developed my own internal compass.

This internal compass has matured into what I like to call "AI Convergence."

Or how the AI industry will develop in the next 10-30 years, taking a long-term view into the field by looking back at how the Internet had gone from the commercial Internet to the Web.

With that compass in mind, let's explore some critical trends that will take shape in 2025.

In the AI industry, we got to have a double-edged approach:

On the one hand, look at the very short term to see which emerging trends enable us to take a step forward.

Conversely, a very long-term perspective is necessary to avoid getting caught up in the noise of all the short-term events affecting the AI industry.

This is my approach, and this is what the research below is about.

For the trends below, you can consult Business Trends AI, one of the tools I've launched for the community.

There, you can keep track of all the trends mentioned below.

Let's start with some trends to help you understand where we go in 2024, the roadblocks ahead, and the immense possibilities.

AI scaling

AI Scaling refers to expanding the capabilities and deployment of Artificial Intelligence systems from initial proofs of concept or small-scale implementations to widespread, enterprise-level applications.

At a foundational level, It involves increasing computational power, data volume, and the ability of AI models to handle larger datasets and more complex tasks, ensuring efficient and reliable operation across the organization.

As we end 2024, there is a massive debate about whether AI can keep up with the current level of innovation or whether we have run out of the abilities to scale these models.

Countless journals have reported a massive slowdown in progress from these foundational models (like OpenAI GPTs or Anthropic's Claude models), highlighting and emphasizing how these former AI labs might be in deep trouble.

Honestly, while this concern does sell many subscriptions for these publications, it's highly unfounded.

Sam Altman highlighted how "there is no wall" to point out something critical in the AI space right now...

To recap, there has been a lot of buzz in recent weeks about the slowing down of AI progress.

While that is attention-grabbing, I don’t think these concerns are founded, at least for now.

Why?

We still have many angles from which these AI models can be improved.

Indeed, outside the foundational architecture (transformer), these models have already been massively improved in the last two years with new architectures on top (e.g., RAG) and post-training techniques (like Chain of Thought Reasoning).

We’re only at the beginning, so we’ll see many improvements from a commercial standpoint.

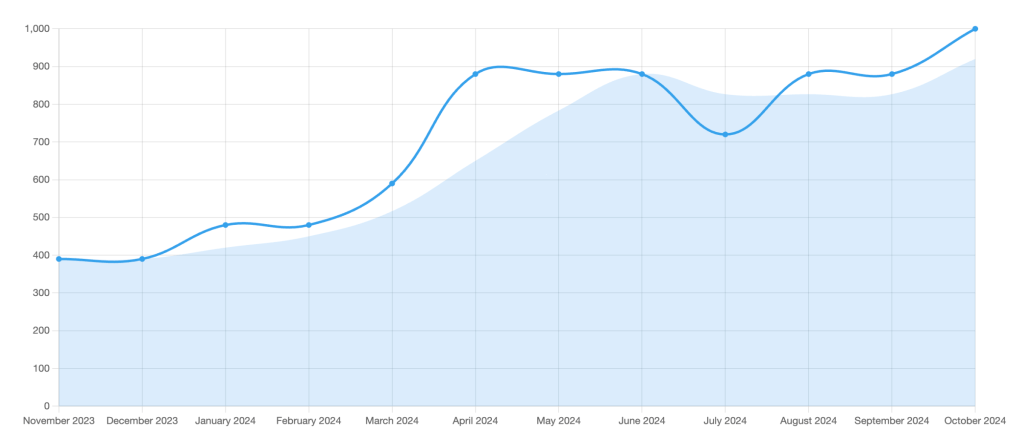

This is a reminder that, as of November 2024, according to SimilarWeb data, ChatGPT has become the 8th largest website in the world, passing sites like Yahoo and Reddit and moving toward Wikipedia!

Indeed, OpenAI’s CEO Sam Altman reassured that AI isn’t hitting a “performance wall,” countering concerns over diminishing returns in model advancements.

Of course, Sam Altman is conflicted because he has a massive amount of money at stake with OpenAI. So why trust him?

Despite reports of only moderate gains in upcoming models, Altman and others remain optimistic. AI labs are exploring new techniques like synthetic data to push AI’s limits further.

In other words, as someone who has been looking at this industry for years, now there are four angles to look at the problem:

Pre-training

At a pre-training level, there are three primary levers:

Data,

Computing power,

And algorithms.

That's pretty much it.

I can go much deeper into this part of the issue here, but the main point is that we'll eventually figure out what the wall is for the current AI architecture (the Transformer).

When, by mixing and re-mixing data, computing, and algorithms, we figure out there is no further progress, then we'll know that a structural change (an architectural one) will be needed. Until that point, it's very hard to know.

Also, we still have to leverage many levers on the three sides.

For data, both synthetic data (generated by simulations), curated data (generated by humans), and hybrid data (a mixture of the two) can still be progressed.

For the computing part, we're only at the beginning of scaling Chip infrastructures to see how far we can go.

We still need to explore all the possible ways to improve the underlying pre-training techniques for algorithms.

Inference Architectures

On the inference side, once a model has been pre-trained, different architectures can be built on top of it.

Take the case of Retrieval-Augmented Generation (RAG):

Retrieval-augmented generation (RAG) is a technique that enhances the accuracy and reliability of generative AI models by referencing external knowledge sources.

It involves linking large language models (LLMs) to a specified set of documents, allowing the models to augment their responses with current and domain-specific information not included in their original training data.

This method improves the timeliness, context, and accuracy of AI outputs by incorporating real-time, verifiable facts from external resources.

This is another angle that has quickly developed in the last two years to enable LLMs to become more specialized, accurate, and safe (as they are enabled selectively on a set of documents).

In short, we're at the stage where we initially got generalist AIs, like ChatGPT, to specialized generalist AI systems, which, while still generalists, can be pretty effective by becoming verticalized (e.g., AI lawyer, AI accountant, AI analyst) and so forth).

RAG itself is an industry already worth billions...

Post-Training

A lot has been achieved in the last two years, even at the post-training level.

This is a reminder that Chain-of-Thought Prompting (CoT), the paper that spurred the current Agentic AI wave, only came out in early 2022.

Like the Transformer paper (Attention Is All You Need), which came out in 2017 and led to ChatGPT, CoT was also an effort of the Google Research and Brain Teams!

I'll touch more on chain-of-thought later in this research, but for now, it's worth remembering that a lot of it is about post-training techniques that enabled the rise of something like ChatGPT 4o:

ChatGPT 4o refers to a specific version of the OpenAI GPT model, a GPT-4 model variant known for its advanced capabilities in generating human-like text responses and handling complex tasks.

Performance Evaluation

As Sam Altman has highlighted, there are no walls yet on the scaling side. The question remains whether we're hitting a wall in measuring/evaluating the performance of the models (on the evaluation side) rather than their capabilities!

What does it mean?

This progress might move so quickly, thanks to further scaling and other post-training optimization techniques, that we're not hitting a wall from a scaling perspective but rather a wall from a performance evaluation perspective!

And if that's the case, it's a severe issue, as there is no new release; if you can't assess the performance, it becomes concerning to do so.

I'll touch on this point further down here...

AI Benchmarking

AI Benchmarking is about evaluating and comparing the performance of different AI models or systems using standardized metrics and tests.

This practice helps organizations and researchers determine the most effective and efficient approaches, refine AI algorithms, and identify areas for improvement, thereby driving innovation and advancement in artificial intelligence.

As I explained above, a significant problem to solve right now is not just on the scaling side of AI but instead on the benchmarking side to ensure we have solid benchmarks to evaluate the performance improvements of these AI models.

In short, we may be at a turning point where these AI models keep improving fast, but we don't have proper benchmarking to evaluate these improvements.

This phenomenon might be called an "evaluation gap" or a "moving target problem," as benchmarks must continuously evolve to keep pace with advancing AI capabilities.

That's the challenge of evaluating and improving models when the goals, benchmarks, or criteria used for assessment constantly evolve or become obsolete due to rapid progress.

In essence, as AI models become more capable, the standards by which we measure their performance must also advance, creating a "moving target."

That's where we are right now, I believe. AI models are advancing so rapidly that evaluation benchmarks often become outdated before they can effectively measure new capabilities.

This creates a gap where models excel on existing tests but lack comprehensive assessment for generalization, safety, or emergent behaviors, challenging our ability to track and guide progress reliably.

AI Benchmarking alone might turn into a massive industry in the coming decade!

AI Spending

One key takeaway from the end of 2024 is that existing Big Tech players could not keep up with AI demand!

That's an exciting issue, as it points out a complete lack of infrastructure to even serve the pent-up demand in the AI space.

Sure, in the short term, it is due to the massive buzz around AI. However, this paradigm is entirely different, calling for a whole new infrastructure.

However, there is much more to it; I'll touch on it in the coming paragraphs. But for now, keep this short-term number in mind:

The Existing Cloud Infrastructure Is The Initial Backbone for AI, But It's Not Enough!

The major Big Tech players are spending $200 billion in 2024 alone to ramp up the infrastructure and meet increasing AI demand!

The Cloud Wars are on, as AI demand outpaces supply. The major Big Tech players will spend $200 billion in 2024 alone to ramp up the infrastructure and pick up the pace for AI demand!

In the last quarter, Google Cloud won the race in terms of relative growth, surging 35% in Q3, outpacing AWS and Azure as AI demand drives growth.

Amazon retains profit leadership, while Microsoft invests to boost AI capacity.

As custom AI chips and high demand shape fierce competition, Oracle partners with rivals, expanding its database reach across major cloud platforms.

Still, a reminder, though, that Amazon AWS (this year reaching $100 billion in revenue):

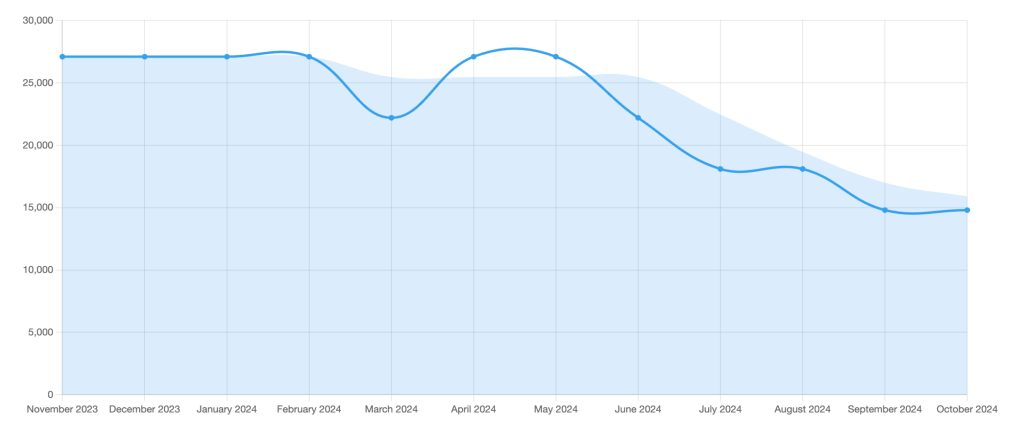

• Amazon Web Services (AWS): 31% market share.

• Microsoft Azure: 25% market share.

• Google Cloud: 11% market share.

These figures highlight AWS and Azure’s lead in the cloud market, with Google Cloud growing rapidly but still holding a smaller share.

In the meantime, other players like Oracle, who are relatively smaller, are also coming in aggressively!

In the meantime, as Q3 of 2024 clearly showed:

Google Cloud’s Growth: Google Cloud led with 35% growth year-over-year in Q3, outpacing Amazon and Microsoft. This growth is seen as a key shift for Alphabet, diversifying its revenue beyond advertising.

AWS Remains Profitable: Amazon Web Services (AWS) maintains its leadership in revenue, growing 19% to $27.45 billion with a strong 38% operating margin. The company benefits from cost efficiencies and extended server life.

Microsoft and AI Demand: Microsoft reported 33% growth in Azure, fueled by AI services and investments in OpenAI. Due to high demand, capacity is limited, but AI infrastructure investment aims to expand availability by early 2025.

Supply Constraints: Both AWS and Microsoft are constrained by AI chip supply, with Amazon relying partly on its custom chips, like Trainium 2, and Google advancing its custom TPUs.

Oracle’s Position: Although smaller, Oracle saw 45% growth in cloud infrastructure and has partnered with Amazon, Microsoft, and Google to expand its database reach.

AI Competition and Innovation: Each cloud giant is developing proprietary AI chips and expanding AI capacity to meet demand, underscoring intensifying competition in the AI-driven cloud market.

Let me show you why AI Data Centers will be critical...

AI data centers

An AI data center is a specialized facility designed to accommodate the intense computational demands of artificial intelligence (AI) workloads.

These data centers support high-density deployments, innovative cooling solutions, advanced networking infrastructure, and modern data center management tools to handle AI operations' significant power and storage requirements efficiently.

As Bloomberg reported, 2024 was the year of the “data center gold rush.” Big tech players collectively invest as much as $200 billion in this fiscal year alone to keep up with AI demand!

Indeed, AI’s explosive demand has ignited unprecedented capital spending, with Amazon, Microsoft, Meta, and Alphabet set to invest over $200 billion in 2024.

Racing to build data centers and secure high-end chips, these tech giants see AI as a “once-in-a-lifetime” opportunity that will reshape their businesses and future revenue potential.

And all Big Tech players with an existing cloud infrastructure are pretty clear about this opportunity:

Record AI Spending: Amazon, Microsoft, Meta, and Alphabet are set to exceed $200 billion in AI investments this year, aiming to secure scarce chips and build extensive data centers.

Long-Term Opportunity: Amazon CEO Andy Jassy described AI as a “once-in-a-lifetime” chance, driving Amazon’s projected $75 billion capex in 2024.

Capacity Challenges: Microsoft’s cloud growth hit supply bottlenecks, with data center constraints impacting near-term cloud revenue.

Meta’s AI Ambitions: Meta CEO Mark Zuckerberg committed to AI and AR investments despite $4.4 billion in operating losses in its Reality Labs.

Wall Street’s Mixed Reaction: Despite optimism for long-term AI returns, some tech stocks wavered due to high costs, while Amazon and Alphabet surged on strong cloud earnings.

Intensifying Competition: Companies are betting on AI to outpace traditional digital ad and software revenue, making AI-driven infrastructure a strategic necessity amidst escalating demand.

But why do you need an AI data center in the first place?

Well, while the current data center infrastructure was functional to host demand across the web, AI data centers are specialized facilities designed to meet the unique needs of artificial intelligence workloads, distinguishing them from traditional data centers in several key aspects:

Hardware Requirements: AI tasks like machine learning and deep learning require high-performance computing resources. Consequently, AI data centers are equipped with specialized hardware like Graphics Processing Units (GPUs) and Tensor Processing Units (TPUs) to handle intensive computations efficiently.

Power Density: The advanced hardware in AI data centers leads to significantly higher power consumption per rack than traditional data centers. This increased power density necessitates robust power delivery systems to ensure consistent and reliable operation.

Cooling Systems: The elevated power usage generates substantial heat, requiring advanced cooling solutions. AI data centers often implement liquid cooling systems, which are more effective than traditional air cooling methods in managing the thermal output of high-density equipment.

Network Infrastructure: AI workloads involve processing large datasets, demanding high-bandwidth, low-latency networking to facilitate rapid data transfer between storage and compute resources. This necessitates a more robust and efficient network infrastructure than that of traditional data centers.

Scalability and Flexibility: AI applications often require dynamic scaling to accommodate varying computational loads. AI data centers are designed with modular architectures that allow for flexible resource scaling, ensuring they can adapt to the evolving needs of AI workloads.

Adapting the existing data centers at Amazon AWS, Microsoft Azure, Google Cloud, and many other providers might require a trillion-dollar investment in the coming decade!

Indeed, AI demand further pushes Big Tech to ramp up its data center infrastructure, making energy demand massively unsustainable in the short term. So, what alternatives are tech players looking into?

In the short term, as these big tech players build up the long-term infrastructure, they are already exploring a few potential energy alternatives to power these AI Data Centers.

Big Tech is racing to power AI’s energy demands sustainably, three major avenues have been identified:

Nuclear energy for stable power,

Liquid cooling for efficient data centers,

And quantum computing for future breakthroughs.

Will these be enough? Probably not. But this is where we are:

Nuclear Energy Investments

• Advantages: It provides consistent, large-scale power, which is critical for AI data centers that require stable, round-the-clock energy.

• Drawbacks: High initial costs, regulatory hurdles, and long-term environmental concerns associated with nuclear waste.

• Timeline: Major deals by Microsoft, Google, and Amazon are already in progress, with nuclear energy expected to support AI operations soon.

Liquid Cooling Technology

• Advantages: Increases energy efficiency by effectively reducing server temperatures, allowing data centers to handle higher power densities.

• Drawbacks: Initial installation costs are high, and maintaining water systems in data centers requires additional resources and planning.

• Timeline: Already being implemented, Schneider Electric’s recent acquisition of Motivair Corp to expand liquid cooling capabilities suggests broader adoption in the coming years.

Quantum Computing

• Advantages: Promises vastly increased processing efficiency, allowing complex AI computations with less power and potentially lowering the environmental footprint.

• Drawbacks: Quantum technology is still in its early stages, and practical, scalable applications for commercial use are likely years away.

• Timeline: According to Quantinuum CEO Raj Hazra, a commercial shift combining high-performance computing, AI, and quantum could emerge within three to five years.

These massive efforts to create a whole new infrastructure for AI might drive massive energy waste in the short term and impressive innovation in the energy sector to come up with alternatives to power up the pent-up AI demand.

And guess what? In the long term, this might also prompt an energy revolution that will provide cheap energy sources for everything else.

As OpenAI's co-founder, Sam Altman, highlighted in his latest piece entitled "The Intelligence Age:"

If we want to put AI into the hands of as many people as possible, we need to drive down the cost of compute and make it abundant (which requires lots of energy and chips). If we don’t build enough infrastructure, AI will be a very limited resource that wars get fought over and that becomes mostly a tool for rich people.

Before I discuss the key trends shaping the next 2-3 years in AI, I want to touch on two other significant trends: multimodal AI and Chain-of-Thought.

Multimodal AI

Multimodal AI is an artificial intelligence system that integrates and processes multiple data inputs, including text, images, audio, and video.

This capability allows the system to generate more accurate and contextually aware outputs by combining diverse data modalities, making it more versatile and effective in various applications.

Multimodality started as a trend in 2023 and consolidated in 2024. In short, all Generative AI systems must combine multimodal elements to achieve a level of usefulness for the next phase of scale.

Chain-of-Thought Prompting

As explained, Chain-of-thought (CoT) Prompting is a technique used to enhance the reasoning capabilities of large language models (LLMs) by requiring them to break down complex problems into a series of logical, intermediate steps.

This approach mimics human reasoning by guiding the model through problems step-by-step, leading to more accurate and interpretable results.

The combination of multimodality and chain of thought has also propelled us toward what's defined as Agentic AI.

Agentic AI

Agentic AI refers to artificial intelligence systems capable of autonomous action and decision-making.

These systems, often called AI agents, can pursue goals independently, make decisions, handle complex situations, and adapt to changing environments without direct human intervention.

They leverage advanced techniques such as reinforcement learning and evolutionary algorithms to optimize their behavior and achieve specific objectives set by their human creators.

Keep in mind that there is no single definition of Agentic AI.

Agentic AI in academic settings might be more about "agency" or the ability of these AI agents to make complex decisions independently.

In business, for the next couple of years, agentic AI will primarily concern specific business outcomes and tasks that these agents can achieve in a very constrained environment to ensure their accuracy, reliability, and security are a priority.

What made Agentic AI something else compared to the initial wave of AI?

Since the launch of GPT-2 in 2019, the Gen AI paradigm has been based on prompting - for the last five years.

In short, the LLM completed any task based on a given instruction. The quality of the output highly depended upon the quality of the input (prompt).

However, in the last few weeks, we’ve finally seen the rise of Agentic AI, a new type of artificial intelligence that can solve complex problems independently using advanced reasoning and planning.

Unlike regular AI, which responds to single requests, agentic AI can handle multi-step tasks like improving supply chains, finding cybersecurity risks, or helping doctors with paperwork.

It works by gathering data, devising solutions, carrying out tasks, and learning from the results to improve over time.

What are the critical features of Agentic AI vs. Prompting?

• Autonomous Problem-Solving: Agentic AI uses sophisticated reasoning and iterative planning to solve complex, multi-step tasks independently.

• Four-Step Process: Perceive (gathers data), Reason (generates solutions), Act (executes tasks via APIs), and Learn (continuously improve through feedback).

• Enhanced Productivity: Automates routine tasks, allowing professionals to focus on more complex challenges, improving efficiency.

• Data Integration: This technique uses techniques like Retrieval-Augmented Generation (RAG) to access a wide range of data for accurate outputs and continuous improvement.

When did this Agentic AI wave start?

It all started two years back.

Indeed, Chain-of-Thought Prompting (CoT), the paper that spurred the current Agentic AI wave, only came out in early 2022.

Like the Transformer paper (Attention Is All You Need), which came out in 2017 and led to ChatGPT, CoT was also an effort of the Google Research and Brain Teams!

The "Chain-of-Thought Prompting" (CoT) paper, published in early 2022 by researchers from Google's Research and Brain Teams, has been pivotal in advancing the capabilities of large language models (LLMs).

This technique enhances LLMs' reasoning abilities by guiding them to generate intermediate steps that mirror human problem-solving processes by:

Enhanced Reasoning Capabilities: CoT prompting enables LLMs to tackle complex tasks by breaking them down into sequential steps, improving performance in areas like arithmetic, commonsense reasoning, and symbolic manipulation.

Emergent Abilities with Scale: The research demonstrated that as LLMs increase in size, their capacity for chain-of-thought reasoning naturally emerges, allowing them to handle more intricate problems effectively.

Influence on Agentic AI Development: The CoT paper has inspired the development of agentic AI systems capable of more autonomous and sophisticated decision-making by showcasing how LLMs can perform complex reasoning through structured prompting.

This progression mirrors the impact of the 2017 "Attention Is All You Need" paper, which introduced the transformer architecture and laid the groundwork for models like ChatGPT.

Both papers underscore the significant role of Google's research teams in propelling advancements in AI, particularly in enhancing the reasoning and comprehension abilities of language models.

And yet, guess what?

Most of them pushed OpenAI forward from a commercial application standpoint. OpenAI's GPT-4o, released in May 2024, incorporates principles from CoT to improve its reasoning capabilities.

By structuring prompts to encourage step-by-step thinking, GPT-4o can more effectively handle complex tasks such as mathematical problem-solving and logical reasoning.

This approach allows the model to break down intricate problems into manageable steps, leading to more accurate and coherent responses.

The race has heated up so much that, as rumors came out of OpenAI Orion, a next-generation AI model developed by OpenAI, became a massive hit!

OpenAI Orion is the rumored next-generation AI model developed by OpenAI, designed to significantly enhance reasoning, language processing, and multimodal capabilities.

It is expected to be 100 times more potent than GPT-4, with the ability to handle text, images, and videos seamlessly.

Initially intended for key partner companies and not for broad public release, Orion aims to revolutionize various industries by providing advanced problem-solving and natural language understanding capabilities.

Thus, it advances OpenAI's vision towards artificial general intelligence (AGI) and strategic collaborations with Microsoft Azure.

And OpenAI is not alone there!

After rumors a few weeks back, it seems that Google has actually, even if briefly, leaked an AI prototype, “Jarvis,” designed to complete computer tasks like booking flights or shopping.

Despite being available temporarily on the Chrome extension store, the tool didn’t fully work and was quickly removed. Google planned to unveil Jarvis in December, joining competitors like Anthropic and OpenAI in AI assistance.

What happened there? As reported by The Information:

Accidental Release: Google briefly publicized an internal AI prototype, codenamed “Jarvis,” designed to complete tasks on a person’s computer.

Capabilities: Jarvis, a “computer-using agent,” aims to assist with tasks like purchasing products or booking flights.

Access Issue: The prototype, available via the Chrome extension store, didn’t function fully due to permission restrictions.

Removal: Google removed the product by midafternoon; it was intended for a December release alongside a new language model.

Competition: Anthropic and OpenAI are also developing similar AI task-assistance products.

What can we expect there?

Agentic AI: Personal, Persona-Based, Company Agents

The Academic Definition focuses on AI Agents as systems that reason and act autonomously, originating from the concept of "agency."

I love the business definition, which the CEO of Sierra Bret Taylor, gave on the podcast No Priors in episode number 82, where he explained there are, according to him, three main kinds of agents we'll see emerge there:

Personal Agents: Help individuals with tasks like managing calendars or triaging emails.

Persona-Based Agents: Specialized tools for specific jobs (e.g., coding or legal work).

Company Agents: Customer-facing AI that enables businesses to engage digitally with their users.

More precisely:

Here’s the breakdown of the three types of agents, along with potential business models for each:

Personal Agents

Agents assist individuals with tasks like managing calendars, triaging emails, scheduling vacations, and preparing for meetings.

State of Development: Early-stage; complex due to broad reasoning requirements and extensive systems integrations.

Challenges: High complexity in task diversity and integration with personal tools.

Potential Business Models:

Subscription-Based Services: Charge users a recurring fee for access to personal assistant functionalities (e.g., premium tiers for advanced features).

Freemium Models: Offer basic features for free, with paid upgrades for advanced integrations and additional automation.

B2B Partnerships: Collaborate with productivity tool providers (e.g., Google Workspace, Microsoft 365) to integrate and sell personalized solutions.

Licensing: License the technology to companies creating proprietary productivity tools or devices (e.g., smartwatches, phones).

Persona-Based Agents

Specialized agents tailored for specific professions or tasks, such as legal assistants, coding assistants, or medical advisors.

State of Development: Mature in certain niches with narrow but deep task scopes.

Examples: Harvey for legal functions and coding agents for software development.

Advantages: Focused engineering and benchmarks streamline development.

Potential Business Models:

Vertical SaaS (Software as a Service): Offer domain-specific AI tools as subscription-based services targeted at professionals (e.g., lawyers, developers).

Pay-Per-Use: Monetize by charging based on usage or the number of completed tasks.

Enterprise Licensing: Provide customized agents for large organizations in specific industries.

Marketplace Integration: Integrate with platforms like GitHub (for coding agents) or Clio (for legal agents) and earn through platform fees or partnerships.

Company Agents

Customer-facing agents represent companies, enabling tasks like product inquiries, commerce, and customer service.

State of Development: Ready for deployment with current conversational AI technology.

Vision: Essential for digital presence by 2025, akin to having a website in 1995.

Potential Business Models:

B2B SaaS: Offer branded AI agents as a service to companies, providing monthly or annual subscription plans based on features and scale.

Performance-Based Pricing: Charge companies based on metrics like customer satisfaction, retention rates, or reduced operational costs.

White-Label Solutions: Provide customizable AI agent templates that companies can brand as their own.

Integration Fees: Earn from integrating AI agents into companies’ existing CRM, e-commerce, or support systems.

Revenue Sharing: For commerce-related interactions, take a small percentage of sales the AI agent facilitates.

Sierra Bret Taylor's CEO also emphasized that we'll see these agents evolve at hardware and software levels.

What will be the next device to enable AI Agents?

The Smartphone will be the "Central Hub of AI" in the initial phase

While, over time, AI might enable a whole new hardware paradigm and form factor, it's worth remembering that the first step of integrating AI is happening within the existing smartphone ecosystem.

In short, the smartphone will remain the "Central Hub of AI" in the next few years until a new native form factor evolves.

However, in the next 3-5 years, the iPhone will remain a key platform for the initial development of AI.

Take the AI iPhone (trend data below):

In Apple's latest iPhone models, specifically the iPhone 16, Apple integrated advanced artificial intelligence (AI) capabilities known as "Apple Intelligence" (trend data below):

Apple Intelligence is a suite of generative AI capabilities developed by Apple, integrated across its products including iPhone, Mac, and iPad.

Still, this system will enhance features like Siri, writing, image creation, and personal assistant functionalities at the embryonal stages.

It aims to simplify and accelerate everyday tasks while prioritizing user privacy through on-device processing and Private Cloud computing.

In the meantime, the smartphone will be the first device to be completely revamped before we see the emergence of AI-native devices, such as combining AR with them.

For now, on the smartphone side, the AI revolution in smartphones is moving toward hyper-personalization, with each player giving it its own twist:

Apple champions privacy with on-device AI,

Samsung boosts performance through smart optimizations,

Google elevates photography with stunning enhancements,

Huawei adds practical tools for everyday ease.

Each brand brings unique AI-driven features, turning phones into powerful personal assistants.

Below is the breakdown of each smartphone player's AI strategy:

Apple iPhone: Apple focuses on blending privacy with advanced AI capabilities. With its Apple Intelligence platform, the iPhone offers tools like a language model for email and document management and creative features like Image Playground and Genmoji. Apple’s strong commitment to on-device processing minimizes data transmission, appealing to privacy-conscious users.

Samsung Galaxy: Samsung’s Galaxy S24 Ultra, featuring the Exynos chipset, emphasizes high performance with AI-optimized cores. Its Scene Optimizer camera feature automatically adjusts settings for various scenes, while intelligent performance optimization enhances responsiveness and extends battery life, making it a robust option for power users.

Google Pixel: Known for its photography, its Tensor chip powers features like Magic Eraser for object removal in photos, AI-enhanced zoom, and low-light photography. The Gemini chatbot enhances communication, providing real-time captions, transcription, and translation, positioning Pixel as the top choice for photo and communication aficionados.

Huawei Pura 70 Series: Huawei’s AI focuses on practical enhancements. With features like Image Expand for background filling, Sound Repair for call quality, and an upgraded Celia assistant for image recognition, Huawei offers real-world AI solutions for daily convenience.

Re-emergence of Smart Assistants to reduce screen time?

As I'll show you further down the research, as we're closing 2024, Apple, Google, and Amazon are all "secretly" working on revamping their smart assistants.

The wave started a decade ago when these big tech players tried to dominate the "voice assistant" market and ended up as a missed promise.

These assistants are not delivering on their promises. Take Siri, which turned out to be a long-term flop because of its lack of usefulness.

Yet, will we see the renaissance of these devices via Generative AI?

For instance, smart speakers (e.g., Alexa, Siri, Google Home) and headphones may become central to daily workflows.

Conversational interfaces in these devices could enable seamless, screen-free engagement for tasks like scheduling, reminders, or information retrieval.

Beyond the Smartphone form factor

Yet, we might figure out new form factors in the coming decade. Indeed, while the smartphone will remain the primary computing device for most users, how we interact with it is evolving.

Conversational AI and multimodal interfaces will integrate seamlessly into everyday experiences, reducing our dependence on screens.

Evolution of Customer Experiences

As Sierra Bret Taylor's CEO also emphasized, we might see these interesting trends with AI agents:

From Menus to Conversations:

The shift from rigid menu-driven systems to free-form conversational agents represents a significant evolution in customer interaction. Users can directly articulate their needs in natural language instead of navigating through predefined paths (e.g., website categories or phone menus). AI will process and act on these requests instantly.Agents as Digital Front Doors:

Just as websites became a company's digital front door in the 1990s, conversational AI agents will become the primary mode of engagement by 2025. These agents will handle customer service inquiries and eventually manage all interactions with businesses, such as product browsing, transactions, and post-sales support.Hyper-Personalized Interactions:

AI agents will offer tailored experiences, adjusting their tone, content, and functionality based on user preferences and history. For instance, an AI agent for a luxury brand might adopt a more formal and polished tone, while one for a casual retailer might use friendly, conversational language.Customer-Centric Ecosystems:

The real-time nature of conversational agents allows businesses to be more agile in responding to customer needs. For instance, if a retailer introduces a new product, an AI agent can instantly acquire the necessary knowledge and incorporate it into interactions—something that would take weeks to implement in a traditional call center.

Sovereign AI

With AI requiring massive investments, many countries leverage a sovereign AI strategy to quickly catch up in this hectic race.

Sovereign AI is a nation's capability to develop and utilize artificial intelligence (AI) technologies independently, relying on its own resources such as infrastructure, data, workforce, and business networks.

This approach promotes technological self-sufficiency, national security, and economic competitiveness by allowing countries to control and customize AI solutions tailored to their needs and regional characteristics.

The Denmark Model

Take the case of Denmark's “sovereign AI,” funded with an interesting model in which the success of drugs like Ozempic is being used to build a massive AI supercomputer to spur/accelerate healthcare research.

Denmark’s new AI supercomputer, Gefion, funded by Novo Nordisk’s weight-loss drug success, is set to transform national innovation.

Powered by Nvidia’s cutting-edge GPUs, Gefion will accelerate healthcare, biotech, and quantum computing breakthroughs, positioning Denmark as a leader in “sovereign AI” to drive economic and scientific growth.

Denmark used the following model:

Unique Funding Model: Denmark’s new AI supercomputer, Gefion, was funded through profits from Novo Nordisk’s blockbuster weight-loss drugs, Ozempic and Wegovy. Thus, it is the first AI supercomputer powered by pharmaceutical success.

Powerful AI Infrastructure: Built with Nvidia’s top-tier GPUs, Gefion aims to support Danish businesses, researchers, and entrepreneurs in fields like healthcare, biotechnology, and quantum computing, overcoming typical barriers of high costs and limited access to computing power.

Public-Private Partnership: The $100 million investment came from a collaboration between the Novo Nordisk Foundation and Denmark’s Export and Investment Fund, signaling AI's strategic importance to Denmark’s national innovation.

Sovereign AI Vision: Nvidia CEO Jensen Huang advocates for “sovereign AI,” seeing Gefion as a model for nations using AI infrastructure to harness national data as a resource and boost economic growth.

Impact on Drug Discovery: Novo Nordisk anticipates significant gains from Gefion in drug discovery and protein design, leveraging advanced computational capabilities to accelerate medical and scientific breakthroughs.

The Japanese Model

Or take the case of NVIDIA and SoftBank, which are transforming Japan’s AI landscape with a powerful new supercomputer and the world’s first AI-driven 5G telecom network.

This innovative AI-RAN unlocks billions in revenue by turning telecom networks into smart AI hubs, supporting applications from autonomous vehicles to robotics, and creating a secure national AI marketplace.

They are working on:

AI Supercomputer: SoftBank is building Japan’s most powerful AI supercomputer using NVIDIA’s Blackwell platform. The project aims to enhance sovereign AI capabilities and support industries across Japan.

AI-RAN Breakthrough: SoftBank and NVIDIA launched the world’s first AI and 5G telecom network, AI-RAN. This network allows telecom operators to transform base stations into AI revenue-generating assets by monetizing unused network capacity.

AI Marketplace: SoftBank plans an AI marketplace using NVIDIA AI Enterprise, offering localized, secure AI computing to meet national demand.

Real-World Applications: AI-RAN enables applications like remote support for autonomous vehicles and robotics control, demonstrating carrier-grade 5G and AI performance.

Revenue Potential: NVIDIA and SoftBank project up to 219% ROI for AI-RAN servers and $5 in AI revenue per $1 capex invested in the AI-RAN infrastructure.

The UAE Model

Or yet take the UAE model focused on:

"Regulatory Sandbox" for AI: According to OpenAI CEO Sam Altman, the UAE is positioned as a global testbed for AI technologies.

Microsoft’s $1.5 Billion Investment: Funding G42, a leading Emirati AI firm, showcasing significant U.S.-UAE collaboration.

Global AI Infrastructure Investment Partnership: Involves Microsoft, BlackRock, Mubadala, and others to drive AI-related growth.

Advanced Technology Focus: UAE and U.S. collaboration emphasizes AI as a key driver for economic innovation.

AI in Economic Realignment: AI is central to the UAE's shift from traditional sectors (oil and defense) to future-focused industries.

Strategic Partnerships in AI Development: Reinforces UAE’s commitment to aligning closely with U.S. expertise in AI and emerging technologies.

The UK Model

Conversely, other countries are trying to control AI development via regulation. For instance, the UK launched a new AI safety platform, aiming to lead globally in AI risk assessment.

Offering resources for responsible AI use, the initiative supports businesses in bias checks and impact assessments. With partnerships and a vision for growth, the UK seeks to become a hub for trusted AI assurance.

In short, we can learn from these as potential models of Sovereign AI:

Denmark: Unique in leveraging profits from pharmaceutical success (Ozempic and Wegovy) to fund AI infrastructure, reflecting a direct reinvestment strategy from industry gains.

Japan: Focuses on monetizing telecom infrastructure (AI-RAN) to transform network assets into revenue streams, showcasing innovation in leveraging existing sectors for AI funding.

UAE: Attracts foreign investment (Microsoft, BlackRock) and emphasizes partnerships to integrate global expertise, representing a model of international collaboration.

UK: Government-led funding emphasizing regulatory safety and partnerships, showcasing a cautious and risk-aware approach to AI development.

AI Robotics

AI Robotics is a field that combines artificial intelligence (AI) with robotics.

It enables robots to perform complex tasks autonomously by integrating AI algorithms for object recognition, navigation, and decision-making tasks.

This integration enhances robot capabilities, allowing them to mimic human-like intelligence and adapt to changing environments more effectively.

AI robotics is crucial for applications like autonomous vehicles, precision manufacturing, and advanced home automation systems.

This time, though, is quite different for a simple reason: We're also entering a general-purpose revolution in robotics!

Enter general-purpose robotics via world modeling

World modeling is a crucial stepping stone for the next step in the evolution of AI.

The next frontier of general-purpose robotics depends on the evolution of “world models” or AI-based environmental maps/representations, which will enable robots to predict interactions and navigate complex, dynamic settings effectively.

All major big tech players are massively investing in it.

For instance, NVIDIA just announced new advancements in world modeling that will transform how robots understand and interact with their surroundings.

Robots can now better anticipate and adapt to real-world scenarios by building detailed AI-powered representations of environments.

This breakthrough enables robots to handle tasks with greater awareness and precision, allowing smarter, more human-like automation across industries.

As a result, sectors like logistics, healthcare, and retail stand to benefit from robots that are more capable and more adaptable to diverse, complex environments.

Why does it matter?

Enhanced Environmental Understanding: Robots can build AI-powered representations of their surroundings, allowing them to predict how objects and environments will respond to their actions.

Adaptability: World modeling enables robots to navigate better and adapt to diverse, dynamic environments, making them suitable for complex, real-world applications.

Human-Like Precision: By “understanding” their environments, robots achieve more precise, natural movements, bringing them closer to human-like interactions.

Broad Industry Impact: This advancement holds transformative potential across logistics, healthcare, retail, and more, as robots can handle a wider range of tasks more accurately.

Scalable Automation: World modeling supports more intelligent, efficient automation, paving the way for robots that perform tasks and learn and adjust in real time.

Another aspect is dexterity.

Why has dexterity become the “holy grail” of general-purpose robotics?

We humans take our dexterity for granted, yet, at this stage, it is among the hardest challenges in robotics. If solved, this problem can create the next trillion-dollar industry, as it would open up the space to general-purpose robotics.

Indeed, robot dexterity is challenging because it requires robots to handle diverse, delicate objects in unpredictable environments—something we humans do instinctively.

Achieving this demands sophisticated sensors, machine learning, and real-time adaptability to avoid damaging items or failing tasks. Unlike repetitive, controlled tasks, dexterity involves adjusting to unique shapes, textures, and weights in dynamic settings.

This complexity has made robot dexterity a “holy grail” in robotics, as it’s essential for automating tasks like sorting, packing, or even assisting in healthcare, where human-like precision and adaptability are critical.

Solving it could unlock new levels of automation across industries, reshaping labor and efficiency.

That’s why a company like Physical Intelligence got $400 million in funding led by Jeff Bezos to try to revolutionize robotics by enabling robots to handle objects with human-like precision.

Its breakthrough pi-zero software empowers robots to adapt and perform complex tasks autonomously, promising transformative impacts across logistics, healthcare, and beyond but raising employment implications.

This shows impressive momentum in the field as:

Investment for Precision Robotics: Backed by Jeff Bezos and others, Physical Intelligence secured $400 million to advance robotic dexterity, aiming to give robots a human-like touch. This breakthrough could reshape logistics, retail, and other sectors by enabling robots to handle diverse objects.

Pi-zero Software: The startup’s new control software, pi-zero, uses machine learning to enable robots to perform complex tasks like folding laundry, bagging groceries, and even removing toast from a toaster. It allows robots to adjust in real time, enhancing their adaptability in unpredictable environments.

Broader Industry Impact: This innovation addresses key automation challenges as businesses seek solutions amid labor shortages, especially in warehousing and retail. The technology also holds potential for agriculture, healthcare, and hospitality, where robots could handle labor-intensive or support tasks, potentially reducing manual roles.

Industry Momentum in AI Robotics: Amazon, Walmart, and SoftBank are deploying intelligent robots to handle tasks in fulfillment, inventory, and customer service. These robots perform repetitive or labor-intensive duties, allowing human employees to focus on higher-level tasks.

Spatial intelligence is the next frontier

Spatial intelligence, through world modeling, is making impressive leaps.

Boston Dynamics’ latest Atlas robot showcases autonomous power in this video. It moves car parts with adaptive sensors and no teleoperation. Atlas performs real-time adjustments, targeting automotive factory work.

Boston Dynamics’ Atlas robot is impressive because it demonstrates true autonomy in complex tasks—picking and moving automotive parts without human guidance.

It adapts to environmental changes, like shifts in object positions or action failures, using advanced sensors and real-time adjustments.

This level of independence, especially in dynamic factory settings, sets a high bar for robotics, as most competitors still rely on pre-programmed or remote-controlled actions.

Atlas’s efficient, powerful movements save time, showcasing its potential to transform industrial automation with speed and adaptability.

But of course, a reminder that this is only a demo!

As I've shown you so far, general-purpose robotics will see incredible development in the coming decade.

However, this is a key reminder, as there are still many limitations, and we don't know yet at which stage of development of these world models we are!

An interesting study that came out from MIT and Harvard really “stress-tested” LLMs regarding world modeling.

From there, MIT and Harvard researchers reveal that large language models (LLMs) lack a coherent understanding of the world, performing well only within set parameters.

Using new metrics, they found that AI models can navigate tasks but fail when conditions change, underscoring the need for adaptable, rule-based world comprehension models.

As per the study:

Research Findings: MIT and Harvard researchers found that large language models (LLMs) can perform tasks like giving driving directions with high accuracy yet lack a true understanding of the underlying world structure. Model performance dropped significantly when faced with changes, such as street closures.

New Metrics for World Models: The team developed two metrics—sequence distinction and sequence compression—to test whether AI models have coherent “world models.” These metrics helped evaluate how well models understand differences and similarities between states in a structured environment.

Testing Real-World Scenarios: By applying these metrics, researchers discovered that even high-performing AI models generated flawed internal maps with imaginary streets and incorrect orientations when navigating New York City.

Implications: The study suggests that current AI models may perform well in specific contexts but fail if the environment changes. For real-world AI applications, models need a more robust, rule-based understanding.

Future Directions: Researchers aim to test these metrics on more diverse problems, including partially known rule sets, to build AI with accurate, adaptable world models, which could be valuable for scientific and real-world tasks.

So let's mind that...

And to recap:

AI Robotics Integration: Combines AI with robotics for tasks like object recognition, navigation, and decision-making across industries such as logistics and manufacturing.

General-Purpose Robotics: Focused on robots handling diverse tasks with adaptability across various industries.

World Modeling: Enables robots to create AI-powered environmental maps for better prediction and navigation in dynamic settings.

NVIDIA Advances: Developing AI-powered world models to enhance robotic awareness and precision.

Boston Dynamics Atlas: Showcased autonomous factory work with real-time adaptability in moving car parts.

Dexterity in Robotics: A key challenge requiring robots to handle diverse objects in unpredictable environments.

Physical Intelligence (pi-zero): $400M funded software enabling human-like robotic dexterity for tasks like packing and healthcare assistance.

MIT & Harvard Research: Found AI struggles with dynamic real-world changes, highlighting gaps in robust world comprehension.

Spatial Intelligence: Enhances robotic capabilities in tasks requiring precise environmental awareness and adaptation.

Broader Industry Impact: Applications in logistics, healthcare, retail, and agriculture, addressing labor shortages and improving efficiency.

In the meantime, as 2024 ends, we see an impressive explosion of "humanoids."

A humanoid robot is designed to resemble the human body in shape and function, typically featuring a torso, head, arms, and legs.

These robots are created to mimic human motion and interaction, allowing them to perform tasks that require a human-like form and motion, such as walking, talking, and interacting with environments.

As of 2024, the sector is already boasting a broad set of companies working on the problem!

This is where we are right now, with a list of the top players in the field of humanoid robots:

HD Atlas (Boston Dynamics)

NEO (1X, Norway)

GR-1 (Fourier, Singapore)

Figure 01 (USA)

Phoenix (Sanctuary AI, Canada)

Apollo (Apptronik, USA)

Digit (Agility, USA)

Atlas (Boston Dynamics, USA)

H1 (Unitree, China)

Optimus Gen 2 (Tesla, USA)

More precisely:

Atlas by Boston Dynamics: A highly dynamic, fully electric humanoid robot designed for real-world applications. Atlas features an advanced control system and state-of-the-art hardware, enabling it to perform complex movements and tasks with agility and precision.

Salvius: An open-source humanoid robot project focused on creating a versatile platform for research and development. Salvius is built with a versioned engineering specification to ensure each component meets a minimum standard of functionality before integration.

Digit by Agility Robotics: A bipedal humanoid robot with a unique leg design for dynamic movement. Digit has nimble limbs and a torso packed with sensors and computers, enabling it to navigate complex environments and perform tasks in warehouses and other settings.

Figure 02 by Figure AI: The second-generation humanoid robot developed by Figure AI, designed to set new standards in AI and robotics. Figure 02 combines human-like dexterity with cutting-edge AI to support various industries, including manufacturing, logistics, warehousing, and retail.

HRP-4: A humanoid robot developed as a successor to HRP-2 and HRP-3, focusing on a lighter and more capable design. HRP-4 aims to improve manipulation and navigation in human environments, making it suitable for various research and practical applications.

Optimus by Tesla: A humanoid robot designed by Tesla to perform unsafe, repetitive, or boring tasks for humans. Optimus intends to leverage Tesla's expertise in AI and robotics to create a versatile and capable robotic assistant.

H1 by Unitree Robotics: Unitree's first universal humanoid robot, H1, is a full-size bipedal robot capable of running. H1 represents a significant step forward in humanoid robotics, aiming to integrate into various applications with its advanced mobility and adaptability.

Roboy: An advanced humanoid robot developed at the Artificial Intelligence Laboratory of the University of Zurich. Roboy is designed to emulate human movements and interactions, with applications in research and development of soft robotics and human-robot interaction.

RH5: A series-parallel hybrid humanoid robot designed for high dynamic performance. RH5 can perform heavy-duty dynamic tasks with significant payloads, utilizing advanced control systems and trajectory optimization techniques.

NimbRo-OP2X: An affordable, adult-sized, 3D-printed open-source humanoid robot developed for research purposes. NimbRo-OP2X aims to lower the entry barrier for humanoid robot research, providing a flexible platform for various applications and studies.

Thus, the development of humanoid robots is progressing rapidly, with significant investments and technological advancements.

These robots have the potential to transform industries by automating tasks, addressing labor shortages, and increasing efficiency as initial use cases!

AI smart home

An AI (Artificial Intelligence) smart home refers to a residence equipped with internet-connected devices that use machine learning and artificial intelligence to automate and control various aspects of the house.

These devices can learn the habits and preferences of the inhabitants, providing personalized and efficient service.

Examples include voice-controlled systems that adjust lighting, temperature, and security settings based on user behavior and devices that optimize energy usage and offer proactive assistance through data analysis.

And as we reach the end of 2024, Apple might release an AI Smart Wall device.

Powered by Apple Intelligence, offering Siri-centric functionality and seamless smart home management, with sensors, including proximity sensors that adjust displayed information based on user distance.

Why does it matter?

When Apple tries to enter a market niche via the launch of a new device, it finds a "beachhead" that would enable it to create a new scalable market.

And it's doing it in a segment dominated by Google with its Nest devices.

Autonomous Vehicles

Autonomous Vehicles are equipped with advanced technologies, including sensors, cameras, radar, and artificial intelligence, that enable them to operate with minimal or no human input.

These vehicles can navigate roads, traffic, and environments without needing a driver, using data from various sensors to make decisions and control the vehicle's actions.

They are designed to reduce traffic congestion, lower accident rates, and enhance mobility for various groups. However, fully autonomous vehicles are still in the testing phase and are not yet widely available.

We're at a tipping point there.

Waymo is one of the hidden gems from Google, now Alphabet.

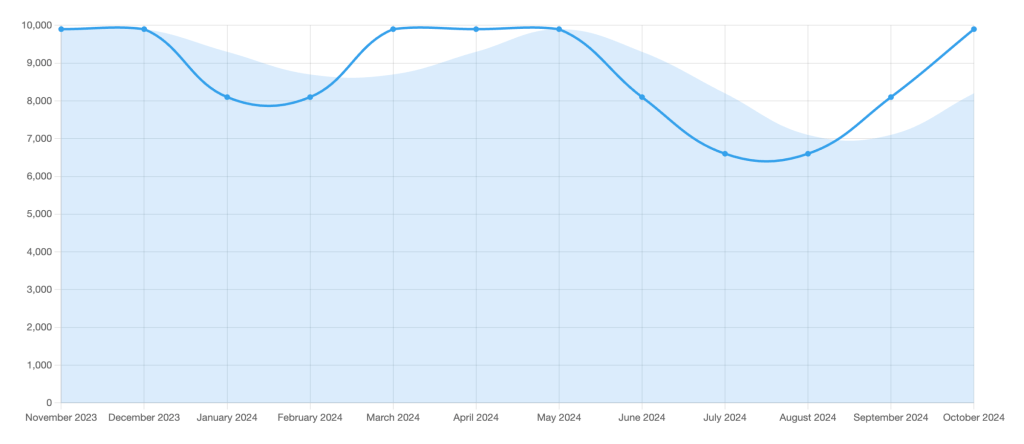

The self-driving company, born as part of Google’s other bets on the future, just raised $5.6 billion and hit a critical milestone of 150,000 paid autonomous trips a week!

Now, Waymo is entering fully autonomous AI by leveraging Google’s Gemini infrastructure via its End-to-End Multimodal Model for Autonomous Driving (EMMA).

LLMs like Waymo’s Gemini-powered EMMA could be game-changers for self-driving cars by offering a holistic “world knowledge” base beyond standard driving data, allowing cars to understand and predict complex scenarios.

In short, LLMs use advanced reasoning and adapt to unexpected environments, making them more flexible and effective in real-world conditions.

This shift from modular systems to end-to-end models could reduce accumulated errors and improve decision-making, propelling autonomous driving closer to seamless, safe deployment at scale.

Of course, this is only at the embryonic stage, and we’ll know its real potential in a few years.

In the meantime, how does Waymo tackle the autonomous vehicle problem?

Introduction of EMMA: Waymo unveiled its “End-to-End Multimodal Model for Autonomous Driving” (EMMA), designed to help its robotaxis navigate by processing sensor data to predict future trajectories and make complex driving decisions.

Leveraging Google’s MLLM Gemini: Waymo’s model builds on Google’s multimodal large language model (MLLM) Gemini, marking a significant move to use advanced AI in real-world driving applications, potentially expanding the uses of MLLMs beyond chatbots and digital assistants.

End-to-End Model Benefits: Unlike traditional modular systems that separate tasks (e.g., perception, mapping, prediction), EMMA’s end-to-end model integrates these functions, which could reduce errors and improve adaptability to novel or unexpected driving environments.

Superior Reasoning: EMMA utilizes “chain-of-thought reasoning,” mimicking human-like step-by-step logical processing, enhancing decision-making capabilities, particularly in complex road situations like construction zones or animal crossings.

Limitations and Challenges: EMMA currently faces limitations with processing 3D inputs (like lidar and radar) and handling many image frames. Additionally, MLLMs like Gemini may struggle with reliability under high-stakes conditions, posing risks for real-world deployment.

Future Research and Caution: Waymo acknowledges EMMA’s challenges and stresses the need for continued research to mitigate these issues before wide-scale deployment.

In addition, LLMs are fundamentally changing self-driving by adding reasoning capabilities on top of it for better tuning of its actions in the real world and the ability to reverse/audit mistakes.

Waymo's new Foundation Model combines advanced AI with real-world driving expertise, integrating large language and vision-language models to boost autonomous vehicle intelligence.

This innovation enables the Waymo Driver to interpret complex scenes, predict behaviors, and adapt in real time, setting new standards for safety and reliability in autonomous driving.

How?

Mission-Driven AI: Waymo aims to be the “world’s most trusted driver,” solving complex AI challenges to create a safe, reliable autonomous vehicle (AV) experience.

Advanced Technology Stack: The Waymo Driver uses a sophisticated sensor suite (lidar, radar, cameras) and real-time AI to interpret the dynamic environment and navigate complex scenarios.

Cutting-Edge AI Models: Waymo’s Foundation Model integrates driving data with Large Language and Vision-Language Models, enhancing scene interpretation, behavior prediction, and route planning.

Simulation and Rapid Iteration: Waymo’s high-powered infrastructure and closed-loop simulation enable fast iteration, testing realistic driving scenarios to refine model capabilities.

Safety at Scale: Serving hundreds of thousands of riders, Waymo’s safety-driven AI constantly improves with each mile driven, supported by rigorous evaluation methods.

Commitment to Future Innovation: Waymo sees immense potential ahead, encouraging AI talent to tackle groundbreaking autonomous driving, robotics, and embodied AI challenges.

Are we really at the stage where this can scale up? Maybe...

AI music

AI music refers to music compositions, productions, or performances created or aided by artificial intelligence algorithms.

These algorithms analyze vast musical datasets, learn patterns, and generate original pieces or emulate specific styles, transforming various aspects of music production from composition to performance.

AI music tools assist in tasks like mixing, mastering, and sound design while enhancing the accessibility and personalization of music for listeners.

News came out recently that YouTube started experimenting with an AI Music feature rolled out within its shorts:

AI Music Expansion: The "Dream Track" experiment now includes an AI remix option for select tracks, enabling customized 30-second soundtracks.

Live-Stream Reminders in Shorts: Automated reminders for scheduled live streams now appear in Shorts feeds 24 hours before the stream starts.

Shorts Conversion Updates: Videos under 3 minutes, uploaded after October 15th, will be classified as Shorts. Platform-wide conversion to be completed by next month.

What potential does it have, and what implications for the music industry?

Increased Music Discovery: AI remixes of popular songs allow creators to reimagine tracks in different genres and moods, increasing exposure for the original artist. Tracks become interactive, encouraging listeners to engage creatively and potentially driving streaming and purchase metrics.

New Revenue Streams for Artists: Attribution ensures artists retain recognition and royalties for AI-generated variations of their work. It also opens opportunities for licensing AI-generated remixes for advertisements, content, and user-generated videos.

Creative Democratization: AI tools enable independent creators to access professional remixing capabilities, leveling the playing field with major labels. It could lead to an explosion of micro-genres and experimental remixes, pushing creative boundaries.

Potential Challenges: Copyright complexities: Determining ownership and royalties for AI-generated content might require new legal frameworks. There is also a risk of oversaturation as infinite remixes dilute the uniqueness of original tracks.

What comes into the pocket of YouTube?

Enhanced Engagement:

AI music remixes make Shorts more dynamic and appealing, leading to higher viewer retention and increased ad impressions.

Live-stream reminders within Shorts seamlessly connect creators’ broader content offerings, enhancing cross-platform engagement.

New Ad Opportunities:

Custom Soundtracks for Ads: Brands could commission AI-generated remixes tailored to their campaigns, aligning ads more closely with target audiences.

Interactive Ads: Brands might integrate AI remix features into their campaigns, allowing users to customize music tracks associated with the ad.

Improved Creator Monetization:

Creators leveraging AI music tools may draw larger audiences, increasing the effectiveness of mid-roll and pre-roll ads.

Shorts integration with live streams offers advertisers a two-pronged approach: targeting both short-form and live content audiences.

Broader Music Licensing Ecosystem:

AI remixes could streamline licensing processes for advertisers, as YouTube ensures proper attribution and royalty handling within its ecosystem.

AI video generation

AI Video Generation has become a staple of this current AI paradigm. Indeed, the ability to tokenize everything has also made an impressive breakthrough on the generative side for images and videos possible.

Tokenization in the context of AI video generation involves breaking down video data into smaller, structured components (tokens) that a model can process.

This is similar to how Large Language Models (LLMs) process text, but the tokens in video generation represent visual, temporal, and sometimes audio elements.

Thus, it's critical to understand how video generation in AI is inherently a multimodal problem, requiring integrating and synchronizing text, audio, and video tokens to create coherent, high-quality outputs.

AI Video Generation: The Hollywood Commercial Use Case

For instance, just recently, Meta released a Gen AI Video Generator that Is Capable of Making Actual Movies, Music Included:

The prompt was: “A fluffy koala bear surfs. It has a grey and white coat and a round nose. The surfboard is yellow. The koala bear is holding onto the surfboard with its paws. The koala bear’s facial expression is focused. The sun is shining.” Credit of Gif: Meta

This is the perfect example of how Meta managed via its now AI model to combine multiple data types into a whole.

It shows how Meta managed to:

Create realistic videos from short text prompts, turning imaginative scenes into visual content (e.g., a koala surfing or penguins in Victorian outfits)

Edit existing videos, add backdrops, and modify outfits while preserving original content.

Generate videos based on images and integrate photos of people into AI-created movies.

On top of it, on the audio side:

Featured a 13B parameter audio generator that adds sound effects and soundtracks based on simple text inputs (e.g., "rustling leaves").

Currently limited to 45 seconds of audio generation but capable of syncing sounds with visuals.

The interesting thing is that Meta is targeting Hollywood and creators, blending professional and casual use cases.

That is why Meta collaborated with filmmakers and video producers during development.

Yet, while AI Video generation is progressing fast at this stage, it's worth remembering that it is not yet available for public use due to high costs and long generation times.

In addition, a critical issue of all these AI image and video generators is the limited understanding of the training data sources, which raises controversies.

For Meta's case, the model might well have been trained on user-generated content and photos from Meta platforms (e.g., Facebook, and Meta Ray-Ban smart glasses).

Meta is not alone in progressing quickly in the AI video generation part for creators.

Indeed, it competes with other AI video tools like RunwayML’s Gen 3 and OpenAI’s Sora but offers additional capabilities like video editing and integrated audio.

All these players are targeting creatives as primary users for these upcoming platforms.

AI Video Generation: The Productivity Commercial Use Case

Another critical angle of AI video generation just came out from Google, with its Google Vids available to Workspace Labs and Gemini Alpha users, with general availability expected by the end of the year.

Key features include:

AI-Assisted Storyboarding: Utilizes Google's Gemini technology to generate editable outlines with suggested scenes, stock media, and background music based on user prompts and files.

In-Product Recording Studio: This allows users to record themselves, their screens, or audio with a built-in teleprompter to assist in confidently delivering messages.

Extensive Content Library: Provides access to millions of high-quality, royalty-free media assets, including images and music, to enrich video content.

Customization Options: Offers a variety of adaptable templates, animations, transitions, and photo effects to personalize and enhance videos.

Seamless Collaboration: Enables easy sharing and collaborative editing within the Google Workspace environment, similar to Docs, Sheets, and Slides.

The productivity use case has massive potential as well!

Key Highlights of AI Video Generation

Technological Basis

Tokenization: The process of breaking down video into smaller components (tokens) such as visual, temporal, and audio elements, enabling AI to process multimodal data.

Multimodal Integration: Synchronization of text, audio, and video tokens is essential for creating coherent, high-quality outputs.

Commercial Use Cases

Hollywood and Creative Industry

Meta’s Gen AI Video Generator:

Generates realistic videos from text prompts (e.g., a surfing koala).

Enables video editing, adding backdrops, and modifying elements while maintaining original content.

Integrates audio generation (13B parameter model), syncing sounds and soundtracks with visuals.

Collaborates with filmmakers and video producers, targeting professional and casual creators.

Limitations:

High costs and long generation times.

Potential controversies over the use of training data from user-generated content.

Competitors:

RunwayML’s Gen 3 and OpenAI’s Sora are also advancing in this space, targeting creators but with varying features and focus areas.

Google Vids (Productivity Use Case):

AI-Assisted Storyboarding: Generates editable outlines, scenes, and music based on user prompts.

In-Product Recording Studio: Features teleprompter-assisted recording for confident delivery.

Extensive Content Library: Access to millions of royalty-free media assets.

Customization: Adaptable templates, animations, and effects for personalization.

Collaboration: Seamless sharing and editing within Google Workspace.

Limitations and Challenges

Access Restrictions: Many tools, like Meta’s video generator, are not yet publicly available due to cost and processing constraints.

Ethical Concerns: Limited transparency regarding training data sources, raising potential legal and ethical issues.

Industry Implications

Target Audience: Primarily creators, filmmakers, and businesses seeking high-quality, customized content.

Future Potential: Rapidly advancing tools may democratize video creation, impacting industries like entertainment, marketing, and education.

AI Advertising

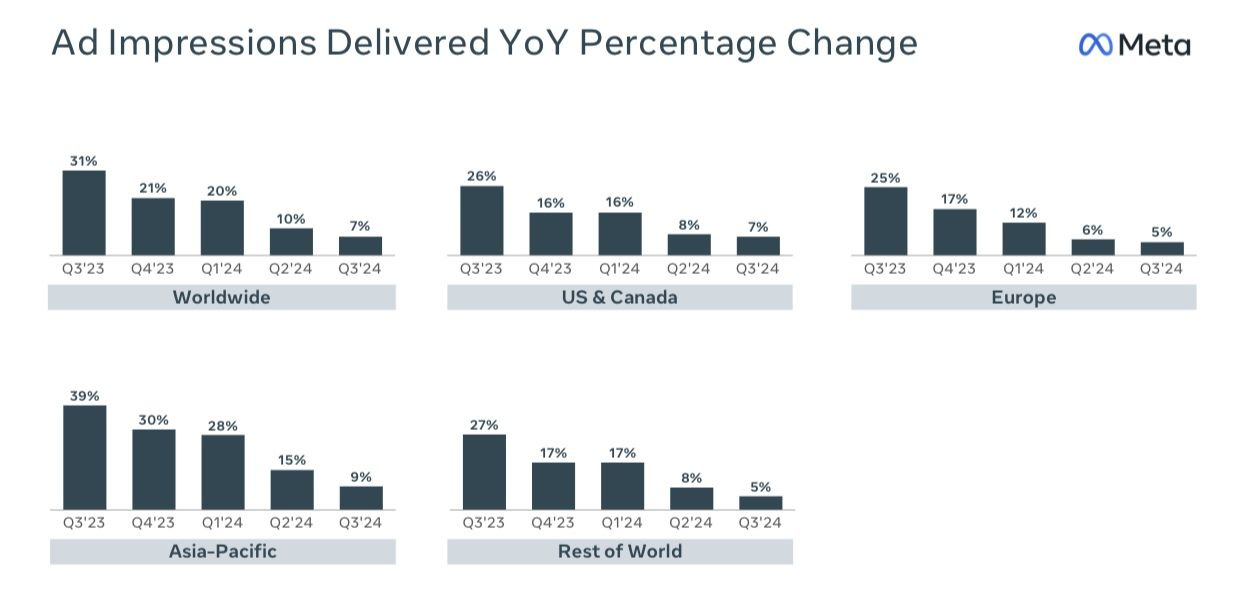

As we close 2024, another key takeaway emerged from the financials of top big tech players in the advertising space, like Google, Meta, and TikTok: AI attached to their advertising platforms can create a massive revenue boost in the short term, as low-hanging fruit for these companies!

Alphabet's AI Ads Potential

According to its latest 2024 Q3 financial reports, by 2025, Alphabet will further ramp up the integration of ads within its AI-powered search features.

Indeed, according to the latest earning reports, Alphabet’s AI advancements are reshaping Search, integrating ads within new AI-powered summaries for enhanced monetization.

Increased capital spending in 2025 highlights Alphabet’s commitment to AI as it diversifies beyond traditional ad revenue.

How is Alphabet (Google) integrating AI into its advertising platforms:

AI Investments Drive Growth: Alphabet’s AI investments have boosted its Search and Cloud businesses, with Cloud revenue rising 35%, the fastest in eight quarters.Increased Ad Revenue: YouTube ad sales were strong, partly due to U.S. election spending, with Alphabet’s overall ad revenue reaching $65.85 billion.

Higher Capital Expenditures Planned: CFO Anat Ashkenazi announced that capital spending will increase in 2025, reflecting Alphabet’s commitment to AI and cloud expansion.

Cloud as a Revenue Diversifier: Cloud is increasingly offsetting growth slowdowns in Alphabet’s ad business, helping to diversify revenue sources amid rising competition from Amazon and TikTok.

New AI-Driven Ad Features: Google is integrating ads into AI-powered Search summaries, enhancing user experience and monetization by summarizing content with generative AI.

In addition, Google is also integrating AI into its analytics platform.

For instance, Google Looker’s new GenAI-powered agents transform data analytics with proactive insights, automated analysis, and trusted outputs.

Leveraging LookML for reliable, organization-wide data consistency and Google’s Gemini model, Looker’s agentic AI aims to empower all users—beyond specialists—to access and act on valuable insights seamlessly, redefining business intelligence.

How is Google integrating AI into its Looker’s architecture?

Agentic AI for Proactive Analysis: Looker’s GenAI-powered agents can independently perform complex tasks, such as suggesting follow-up questions, identifying data anomalies, and recommending metrics.

Semantic Layer for Trusted Data: Looker’s agents leverage LookML, a semantic layer, to ensure AI outputs are consistent and trustworthy across the organization, setting it apart from competitors.

Conversational Analytics: Looker’s flagship feature enables users to ask questions about their data with confidence, thanks to AI responses grounded in a reliable data foundation.

Integration with Google Gemini: Looker’s GenAI functionality is built on Google’s Gemini model, enhancing capabilities like large context windows, allowing deeper insights and seamless integration.

Future Vision for BI: Looker aims to make BI tools more accessible and insightful for all employees, not just specialists, by enhancing AI-driven analysis and focusing on reliability and sophisticated reasoning.

What about Meta?

Meta AI Ads Strategy

How has integrating AI into the Meta Ads platform affected its balance sheet and the digital advertising ecosystem?

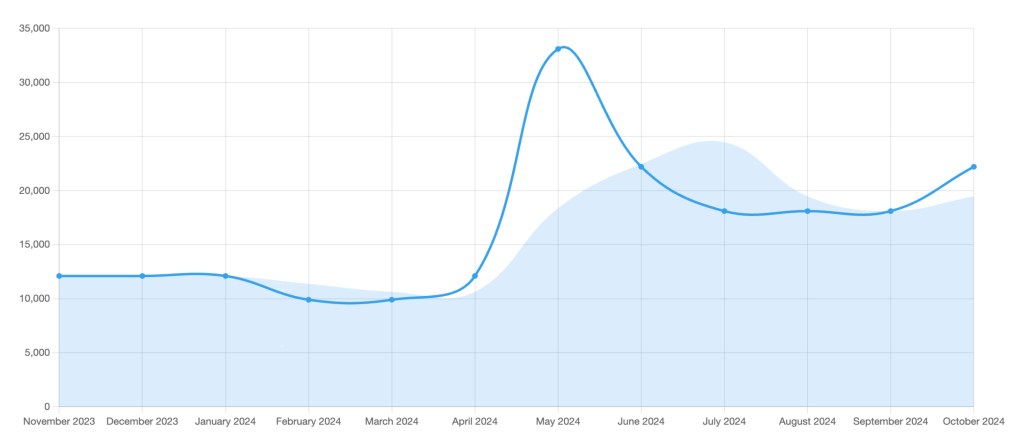

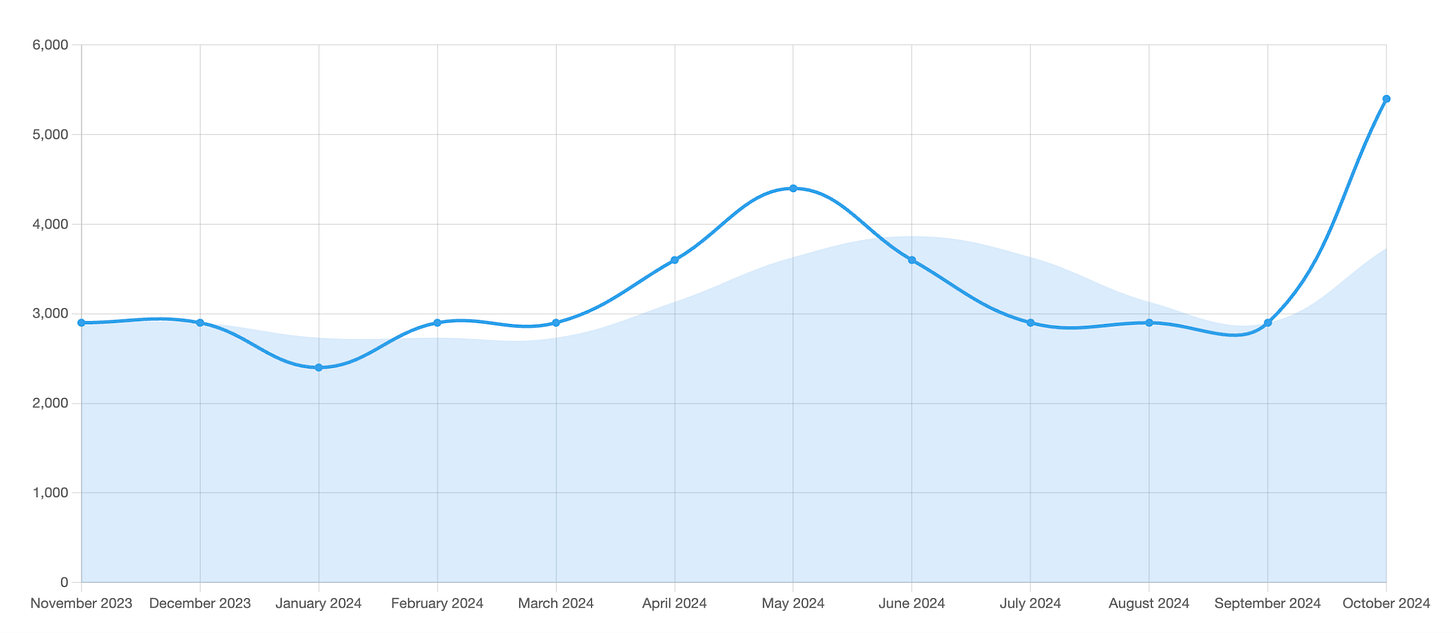

Meta’s Q3 2024 saw ad revenue surge 19% to $39.89 billion, driven by a 7% rise in ad impressions and an 11% increase in ad prices.

CEO Mark Zuckerberg attributes this growth to AI advancements, which improved ad targeting and relevance, driving advertisers to spend more due to increasing returns.

In short, a key trend came out to carefully look at for next year:

Key Trend: Increased ad revenue driven by AI-powered enhancements in targeting and pricing.

Ad Revenue Growth: Ad revenue grew by 19% year-over-year, reaching $39.89 billion in Q3 2024.

Ad Impressions spike: The number of ad impressions rose by 7%, showcasing higher engagement across Meta’s platforms.

Ad Pricing increase driven by better targeting: The average price per ad increased by 11%, reflecting better monetization and targeting.

AI’s Role: CEO Mark Zuckerberg highlighted that AI advancements have enhanced ad relevance and delivery, which contributed to the increase in ad revenue.

In short, both Google and Meta can add a few hundred billion to their market caps in the next 2-3 years by simply integrating AI as an additional layer within their ad platforms.

This is clear as these companies are betting on AI within their advertising platforms.

And they are not alone...

TikTok AI Ads Strategy

TikTok has also launched a set of tools to enable AI within its advertising ecosystem.