AI Business Models Book

“This is not a race against the machines. If we race against them, we lose. This is a race with the machines. You’ll be paid in the future based on how well you work with robots. Ninety percent of your coworkers will be unseen machines.”

This is what Kevin Kelly said in The Inevitable, published in 2016. These words seem spot-on for understanding the current AI revolution!

This is all discussed in the AI Business Models. To get access, subscribe to the premium paid plan!

How did we get there?

The technological paradigm that brought us here moves along a few key concepts to understand, enabling AI to move from very narrow to much more generalized.

And it all starts with unsupervised learning.

Indeed, GPT-3 (Generative Pre-trained Transformer 3) was the underlying model used to build ChatGPT, with an essential layer on top of it (InstructGPT) that used a human-in-the-loop approach to smooth some of the critical drawbacks of a large language model (hallucination, factuality, and bias).

The premise is GPT-3, launched as a large language model developed by OpenAI that uses the Transformer architecture and is the precursor of ChatGTP.

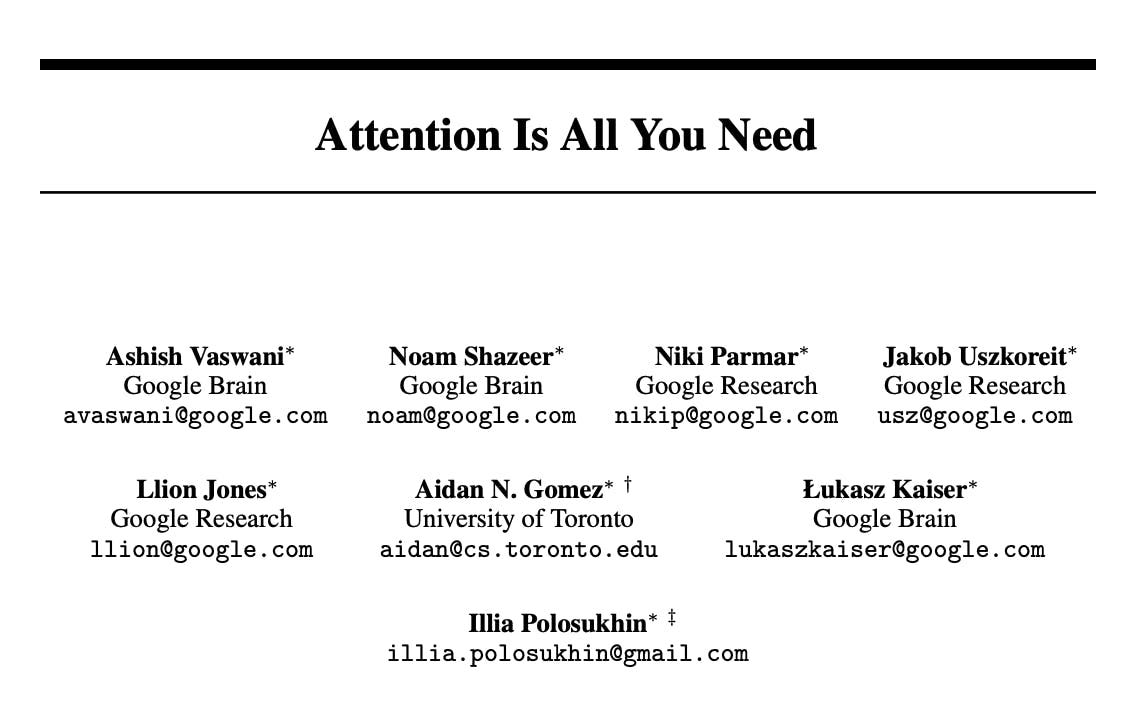

As you'll see in the Business Architecture of AI, the turning point for the GPT models was the Transformer architecture (a neural network designed specifically for processing sequential data, such as text).

Thus, a good chunk of what made ChatGPT incredibly effective is a kind of architecture called "Transformer," which was developed by many Google scholars at Google Brain and Google Research.

The key thing to understand is that the information on the web is moving away from a crawl, index, rank model to a pre-train, fine-tune, prompt, and in-context learn model!

As I explained over and over again in this newsletter, in turn, this is making traditional paradigms (like search) obsolete in a few years.

In short, we're moving from search/discovery to generative.

But what does the AI ecosystem look like?

What does the ecosystem look like?

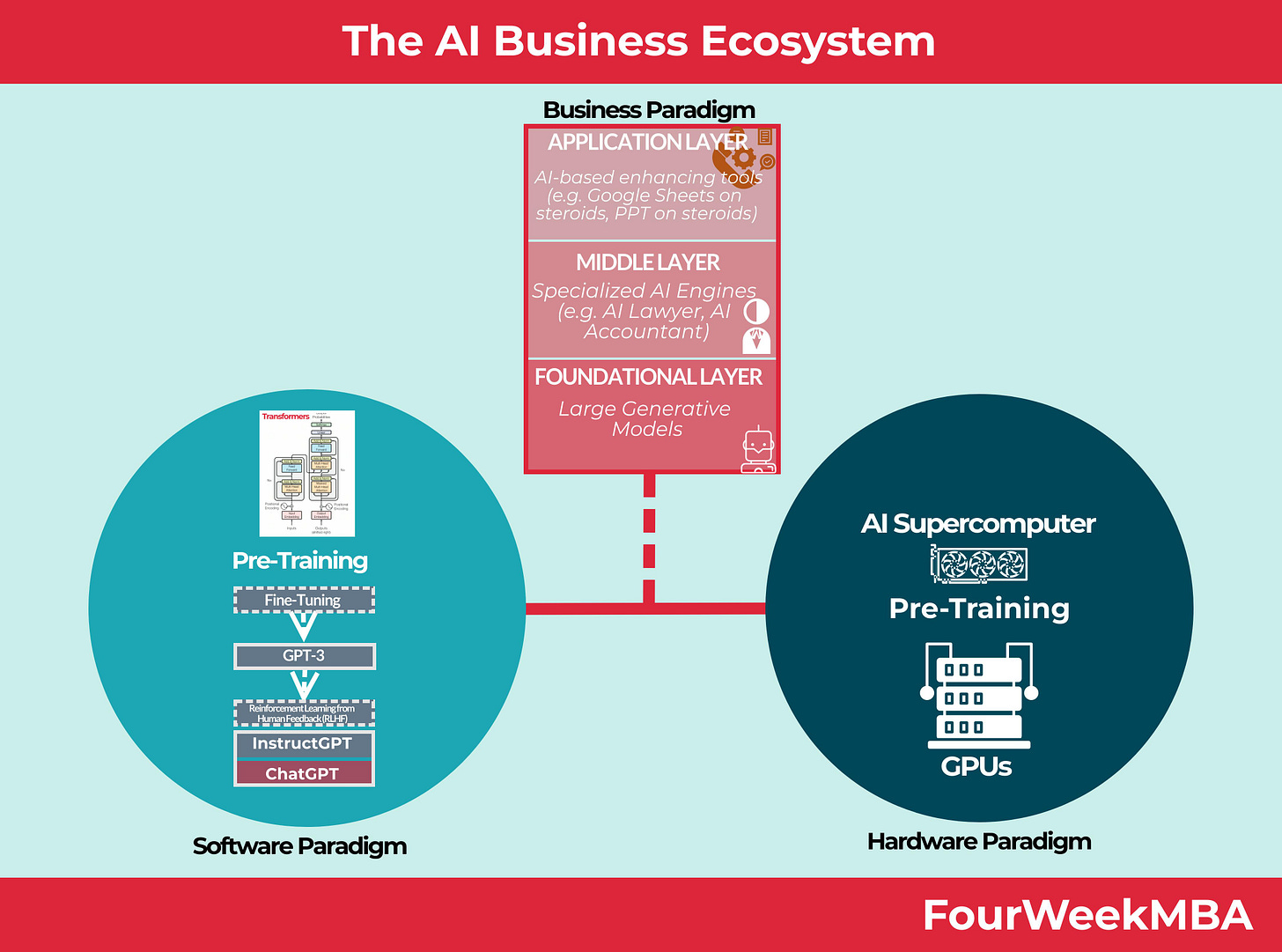

Software: we moved from narrow and constrained to general and open-ended (the most powerful analogy at the consumer level is from search to conversational interfaces).

Hardware: we moved from CPUs to GPUs, powering up the current AI revolution.

Business/Consumer: we're moving from a software industry that is getting 10x better as we speak just by simply integrating OpenAI's API endpoints to any existing software application. Code is getting way cheaper, and barriers to entering the already competitive software industry are getting much, much lower. At the consumer level, first millions, and now hundreds of millions of consumers across the world are getting used to a different way to consume content online, which can be summarized as the move from indexed/static/non-personalized content to generative/dynamic/hyper-personalized experiences.

Where are we now? And what's next?

In late December 2022, a month after ChatGPT had come out, I explained the AI ecosystem via the three layers of AI theory.

This is an effective mental model to understand the development of the AI industry in the coming 5-10 years.

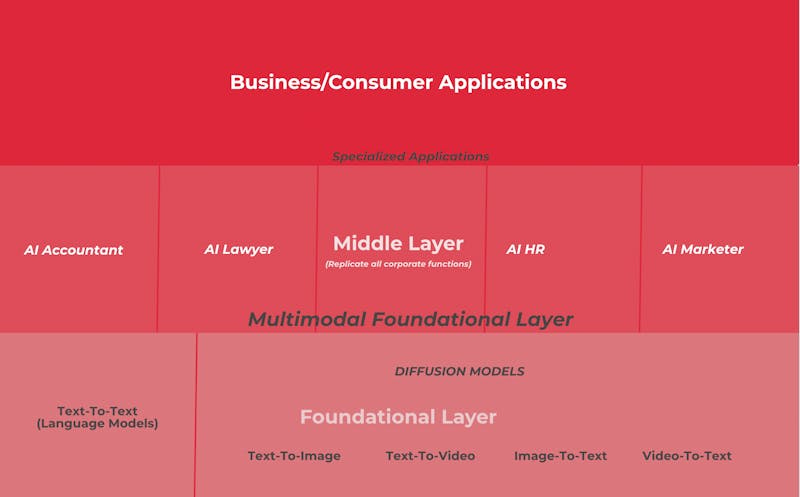

The foundational layer

That might comprise general-purpose engines like GPT-3, DALL-E, StableDiffusion, etc.

This layer might have the following key features:

General Purpose: it will be built to provide more generalized solutions to any specific need.

This layer might be mostly a B2B/Enterprise layer, on the one hand, powering up many businesses.

Just like AWS in the 2010s, powered by the applications made of Web 2.0 (Netflix, Slack, Uber, and many others).

The AI foundational layer (still based on centralized cloud infrastructures) might power up the next wave of consumer applications.

A commercial Cambrian explosion is happening as we speak..

Multimodal: these general-purpose engines will be multi-modal.

They might be able to handle any interaction, be it text-to-text, text-to-image, text-to-video, and vice versa.

Thus, it might move in two directions.

On the one hand, the UX might be primarily driven by natural language instructions.

On the other hand, the built-in AI into the plethora of tools on the web will be able to read, classify and learn patterns from all formats available.

This two-way system might bring the next evolution of the foundational model to become general-purpose engines able to do many things.

Natural Language Interface: the primary interface for those general-purpose engines might be natural language.

Today, this is expressed as a prompt (or a natural language instruction).

Prompting, though, might remain a key feature of the foundational layer. Still, it might instead disappear in the apps' layer, where those AI engines might primarily work as push-based discovery engines (the AI will serve what it thinks is relevant to users).

Real-time: these engines might adapt in real-time, with the ability to read patterns as we navigate the real world.

A middle layer

That might be comprised of vertical engines (imagine here you find your AI Lawyer, Accountant, AI HR Assistant, or AI Marketer).

This middle layer might be built on the foundational layer and can become great at particular tasks combined with other "middle layer" engines.

This middle layer might:

Replicate corporate functions: thus, a first step in this direction might be an AI that can replicate each relevant corporate function from accounting to HR, marketing, and sales. This middle layer will enhance a company, making it possible to run departments that combine humans and machines.

Data moats: differentiation might be built on top of data moats. This means that by continuously fine-tuning foundational layer engines to be adapted to middle layer functions, these AI specialized will become relevant for specific tasks.

AI engines: these middle-layer players might also be able to add other engines on top of existing foundational layers in creating specific data pipelines to train the models for specific tasks. And the ability to adapt those models makes them more and more relevant to the specialized functions.

And the app's layer

That might see the rise of many smaller and more specialized applications built on top of the middle layer.

These will evolve based on the following:

Network effects: scaling up the user base will be critical to building network effects.

Feedback loops: users' feedback loops might become critical to enforce network effects.

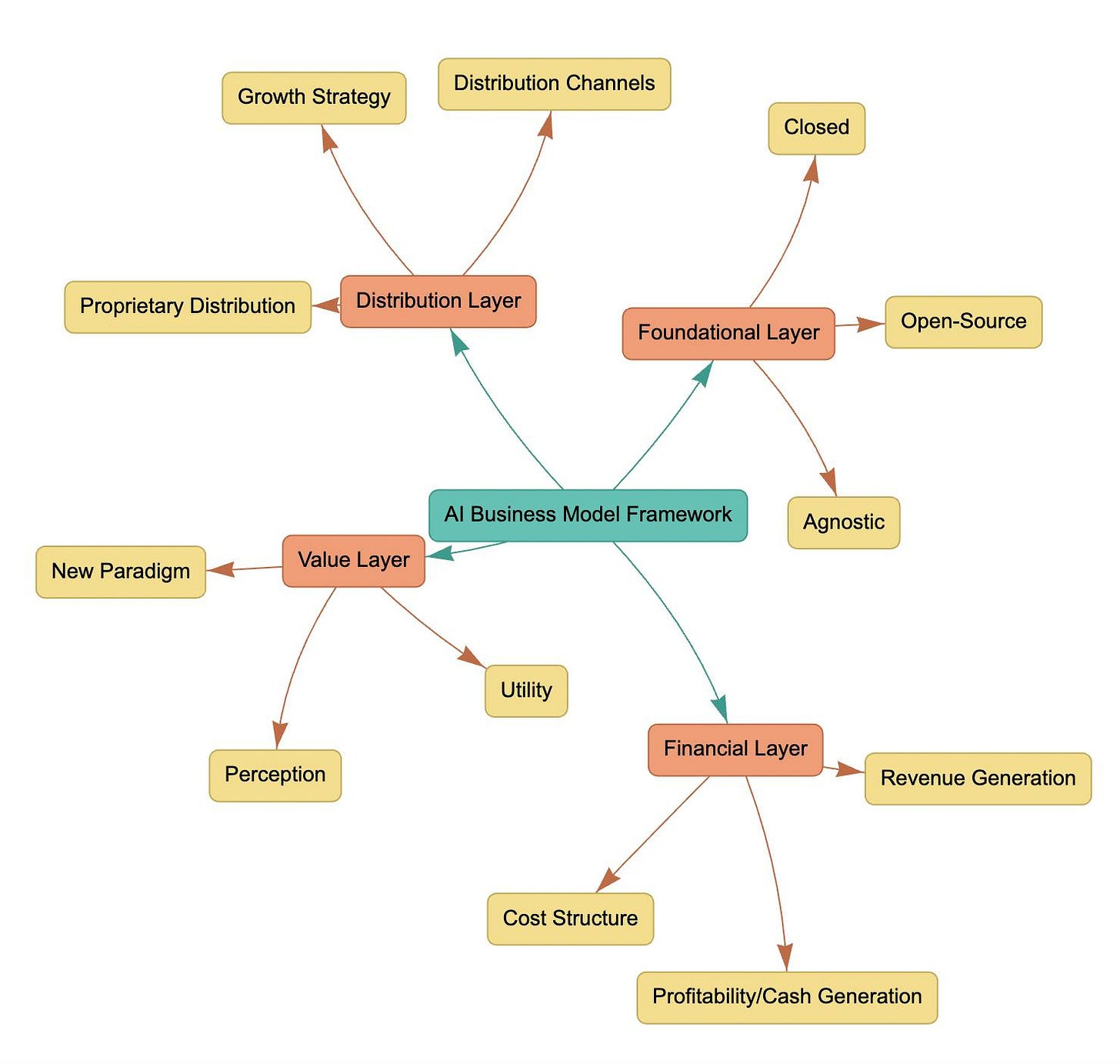

What makes up an AI business model?

I put together a framework or mental model to think about AI business models straightforwardly, with a four-layered approach.

Where AI becomes the connector between value and distribution to enhance both.

You can click on the image below to get the dynamic visualization of the framework.

Foundational Layer

What's the underlying technological paradigm of the business?

Open-Source: Utilizing a set of open-source generative AI models to enhance products.

Closed Source/Proprietary: Using closed-source generative AI models to enhance products.

Agnostic: Combining closed-source, open-source, or both generative AI models to enhance products via AI.

Value Layer

How does the AI underlying tech stack enhance value for the user/customer?

Perception: Changing the perception of the product through the underlying AI layer.

Utility: Significantly improving the product through the underlying AI layer.

New Paradigm: Transforming the current value paradigm through the underlying technological layer.

Distribution Layer

What key channels is the business leveraging, and how is the company building distribution into the product?

Growth Strategy: Combining technology and value to make products appealing to customers.

Distribution Channels: Leveraging various distribution channels to reach customers.

Proprietary Distribution: Utilizing proprietary distribution channels for product delivery.

Financial Layer

Can the company sustain its cost structure and generate enough profits and cash flows to sustain continuous innovation?

Revenue Generation: Generating revenue through AI-enhanced products.

Cost Structure: Assessing the cost structure of the AI business model.

Profitability: Evaluating profitability and cash flow of the AI business model.

Cash generation: Assessing the ability of the AI business to generate cash flow to sustain its continuous development.

AI Business Models Framework: Real World Case Studies

DeepMind (Acquired by Google)

Foundational Layer: Closed Source/Proprietary - DeepMind's algorithms are proprietary and highly specialized.

Value Layer: New Paradigm - At the forefront of AI research, especially in deep learning.

Distribution Layer: Proprietary Distribution - Google uses DeepMind's technology for various applications. Right now DeepMind has been re-organized within Google to tackle the Generative AI race.

Financial Layer: Revenue Generation - DeepMind monetizes through collaborations and integrations within Google's services.

OpenAI

Foundational Layer: Open-Source - Initially, OpenAI provided various AI models openly. Then it closed its algorithims, to become a for-profit organization, launching its API access, and tools like ChatGPT and DALL-E.

Value Layer: Utility - They offer powerful AI solutions that serve multiple industries.

Distribution Layer: Growth Strategy - API integration, consumer-facing business (ChatGPT), enteprise services (ChatGPT Enterprise), partnerships (Microsoft), developers' community.

Financial Layer: Freemium Model, Enterpise Model, API (Pay-as-you-go).

Tesla

Foundational Layer: Closed Source/Proprietary - Tesla's Autopilot system is proprietary.

Value Layer: Utility - Improving car safety and driving experience.

Distribution Layer: Proprietary Distribution - Tesla cars are the primary distribution channel.

Financial Layer: Profitability - Autopilot adds significant value to Tesla's cars, enhancing profitability.

ChatGPT (by OpenAI)

Foundational Layer: Open-Source - Built upon the GPT architecture which was initially open.

Value Layer: Perception - Changing the way people interact with machines.

Distribution Layer: Growth Strategy - By offering a conversational AI.

Financial Layer: Revenue Generation - Subscription models for enhanced versions.

Neuralink

Foundational Layer: Closed Source/Proprietary - Their brain-machine interface technology is proprietary.

Value Layer: New Paradigm - Aiming to revolutionize human-computer interaction.

Distribution Layer: Proprietary Distribution - Directly through their medical devices.

Financial Layer: Revenue Generation - Through the potential sale and use of their devices.

NVIDIA

Foundational Layer: Closed Source/Proprietary - Their hardware and some software are proprietary.

Value Layer: Utility - Providing powerful GPUs essential for AI computations.

Distribution Layer: Proprietary Distribution - Direct sales and partnerships.

Financial Layer: Profitability - Sales of GPUs and AI-related hardware drive their profits.

Baidu

Foundational Layer: Closed Source/Proprietary - Their deep learning platform is proprietary.

Value Layer: New Paradigm - Leading AI research in China.

Distribution Layer: Proprietary Distribution - Integration within their services.

Financial Layer: Profitability - They drive revenue through AI-enhanced services.

Key Takeaways

Technological Paradigm Shift: The evolution of AI from narrow to generalized capabilities, with unsupervised learning being a fundamental starting point.

Transformer Architecture: Developed by Google scholars, this architecture, particularly crucial in the GPT models, revolutionized text processing and paved the way for more effective AI systems like ChatGPT.

Shift in Information Processing: Moving away from traditional search-based models to pre-train, fine-tune, prompt, and in-context learning approaches, rendering old paradigms like search obsolete.

AI Ecosystem Evolution: Transitioning from narrow and constrained software to general and open-ended interfaces, powered by advancements in hardware (from CPUs to GPUs).

Consumer Experience Transformation: Shift from indexed/static/non-personalized content to generative/dynamic/hyper-personalized experiences, impacting millions of consumers worldwide.

Three Layers of AI Theory: A conceptual framework comprising foundational, middle, and app layers to understand the development trajectory of AI.

Foundational Layer: General-purpose engines like GPT-3, with features such as being multi-modal, driven by natural language, and adapting in real-time.

Middle Layer: Comprised of specialized vertical engines replicating corporate functions and building differentiation on data moats.

App Layer: Rise of specialized applications built on top of the middle layer, focusing on scaling up user base and utilizing feedback loops to create network effects.

What makes up an AI business model?

Foundational Layer: Utilizes open-source, closed-source, or a combination of generative AI models to enhance products.

Value Layer: Changes product perception, significantly improves utility, and introduces a new value paradigm through AI.

Distribution Layer: Combines technology and value, leverages various distribution channels, and utilizes proprietary distribution channels.

Financial Layer: Generates revenue, assesses cost structure, and measures profitability and cash flow.

Ciao!

With ♥️ Gennaro, FourWeekMBA

You can request access to the book if you subscribed to the premium paid newsletter!