Microsoft's Frontier AI Dilemma

Premium Analysis

This analysis examines how Microsoft is navigating this dilemma through a strategic pivot from “OpenAI exclusivity as competitive moat” to “infrastructure for all AI players,” a transformation that could determine whether the company captures the full value of the AI revolution or merely participates in it.

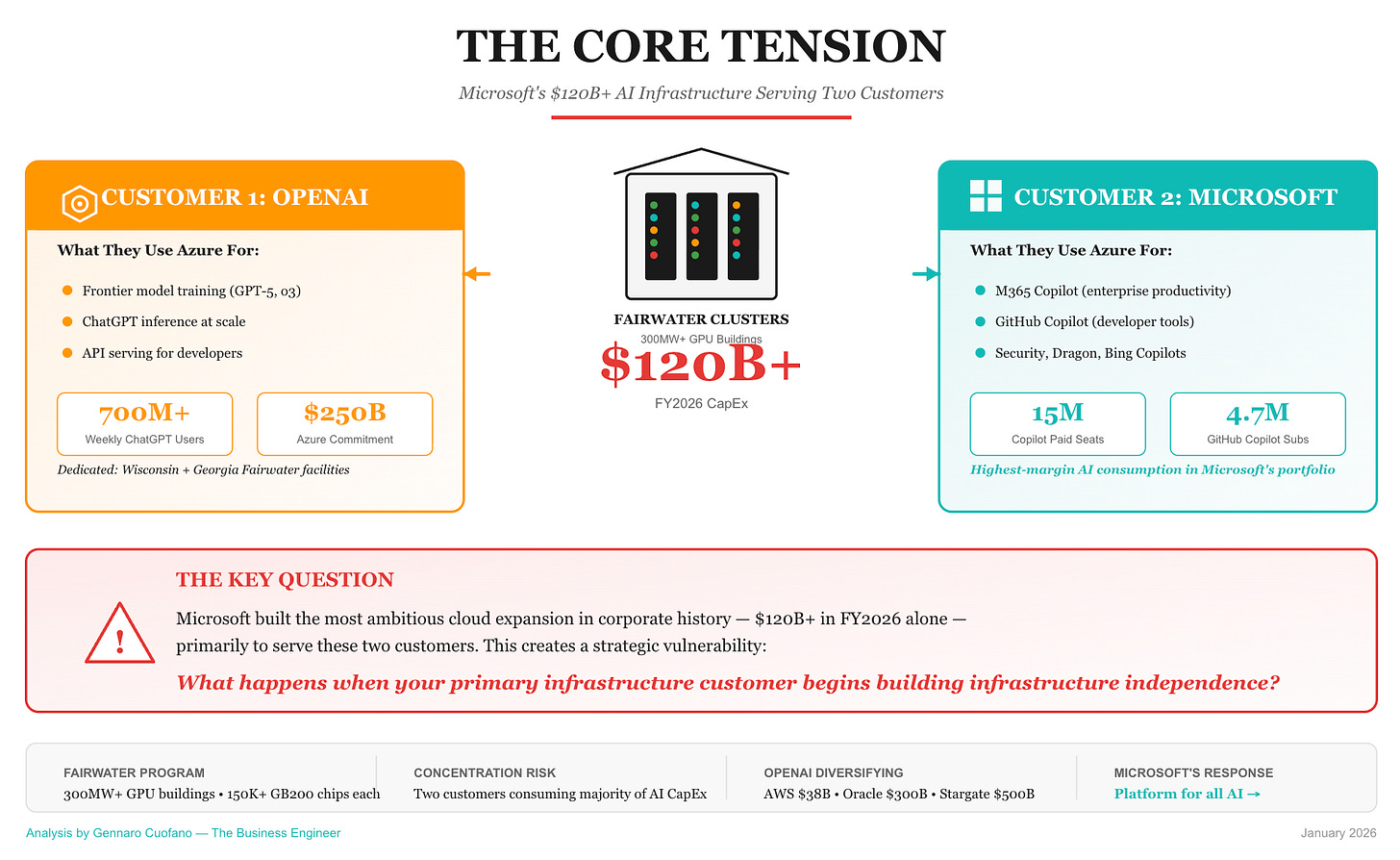

The Core Tension

Microsoft’s AI infrastructure story contains an uncomfortable truth: the company has built the most ambitious cloud expansion in corporate history—with FY2026 CapEx exceeding $120 billion—primarily to serve two customers:

OpenAI — Training frontier models and running inference for ChatGPT’s 700+ million weekly users

Microsoft itself — Powering M365 Copilot (15M seats), GitHub Copilot (4.7M subscribers), and the broader Copilot ecosystem

The “Fairwater” datacenter program illustrates this concentration. Microsoft simultaneously built two of the largest data centers ever built—each featuring 300MW+ GPU buildings capable of housing 150,000+ NVIDIA GB200 chips. One in Wisconsin serves OpenAI exclusively. A sister facility in Georgia does the same.

This creates a strategic vulnerability that Microsoft’s leadership has clearly recognized: what happens when your primary infrastructure customer begins building infrastructure independence?