NVIDIA's Cognitive AI Revolution

In a single session at CES 2025, Nvidia introduced a set of groundbreaking innovations, all following a single trajectory.

Jensen Huang also loudly announced that many of us in the industry are thinking about:

The ChatGPT moment for General robotics is just around the corner!

Let’s, though, put some order to the chaos.

In fact, while it might be easy to get confused about all the things NVIDIA has announced, and rightly so, there is a single innovation thread the company is following, which makes it quite clear where NVIDIA thinks we’re going next.

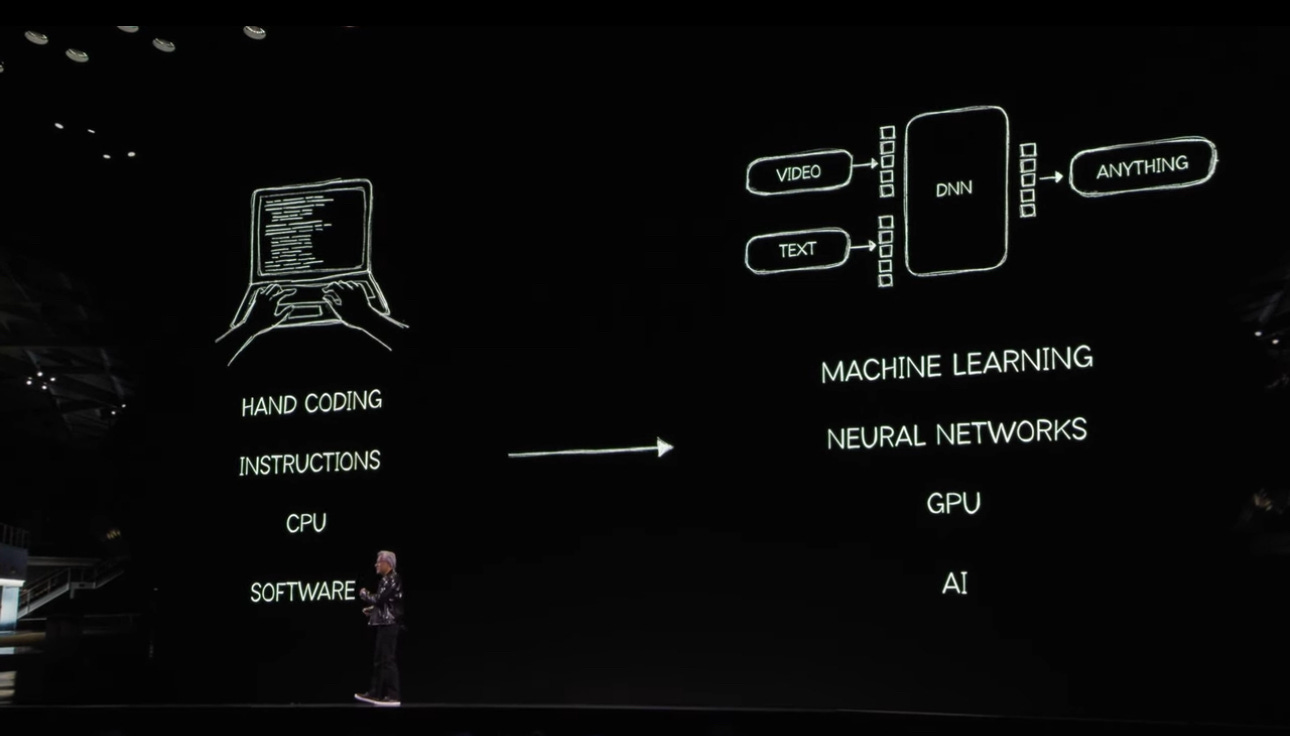

Iconic Jensen Huang has highlighted the path that took us to where we are today from his company’s perspective.

AI as a computing revolution

As I’ve explained in The Web²: The AI Supercycle, Jensen Huang has made clear that what we’re living through is a computing revolution that will enable AI to be embedded anywhere at first, then create something completely new:

It’s worth reminding you that this journey, as I’ve explained, might well take anywhere between 30-50 years:

Yet, it’s also worth reminding that it took us nearly 30 years to get to where we are.

A 30 years journey

Jensen Huang reminded the audience what it took for NVIDIA to realize part of its vision, and it was a nearly 30-year journey:

Introduction of the first GPU in 1999.

CUDA's invention in 2006 enabled extensive AI and computational advancements.

Transformers in 2018 revolutionizing AI and computing.

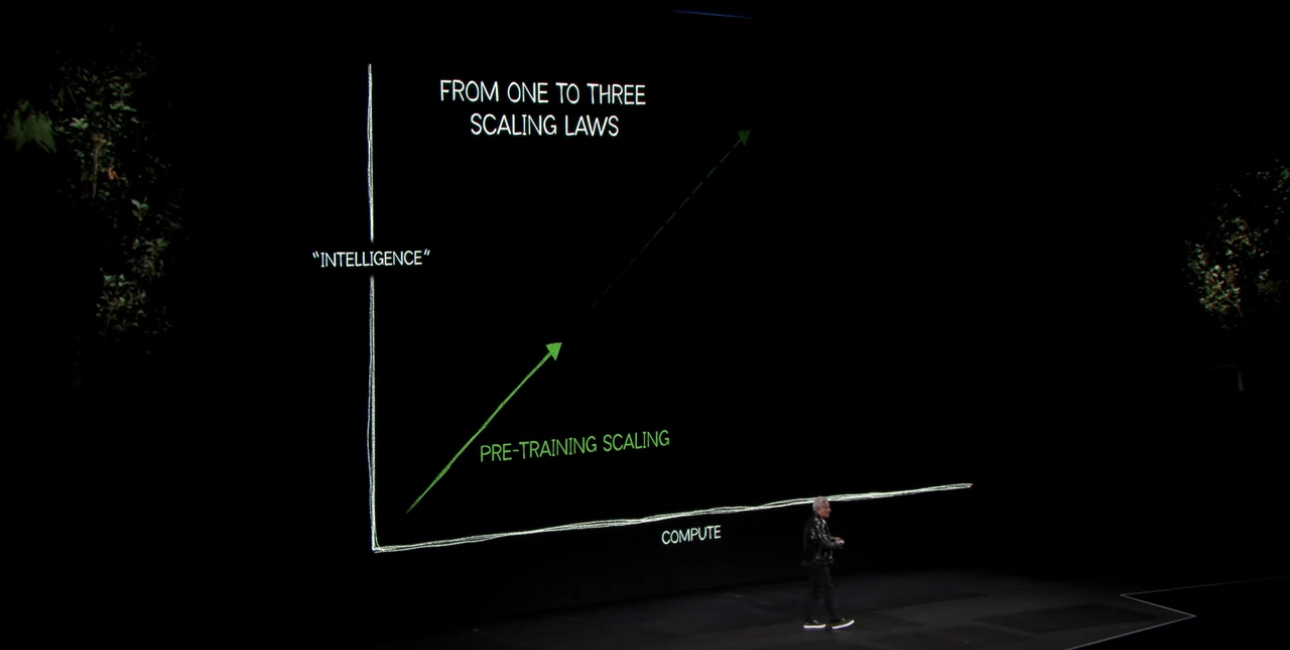

As the transformer approached, it became clear that we were assisting a “scaling phenomenon” driven mainly through scaling laws.

As Jensen Huang highlighted, the interesting take is that these scaling laws initially played on the pre-training side and then moved along to other parts of the AI ecosystem.

Scaling laws are enabling the whole cognitive revolution

I’ve explained this in detail in The Web²: The AI Supercycle:

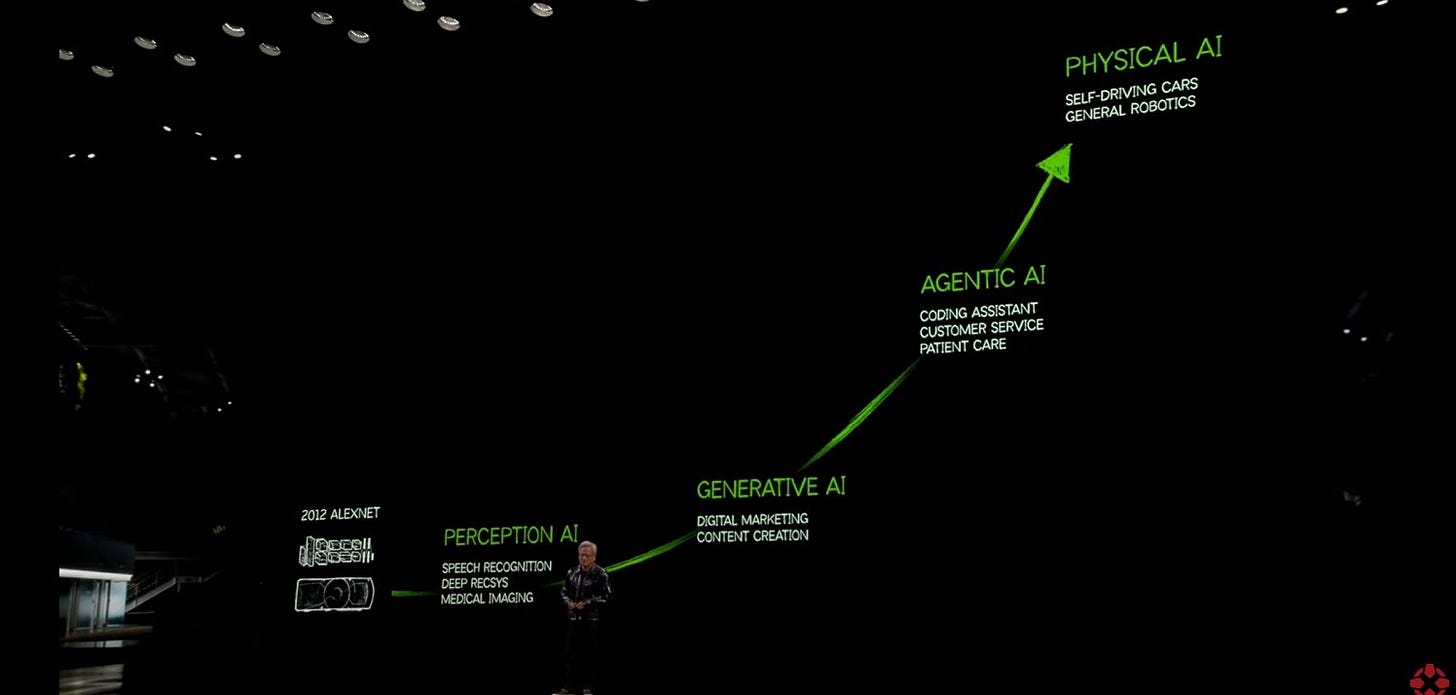

But pretty much, as also Jensen Huang has highlighted, we have lived through three phases of AI technological development in the last few years.

From 2018 up to 2023, that has been chiefly about pre-training, in which the foundation resided on three things:

More data.

More computing.

Better algorithms.

This paradigm told us that the more we would scale compute, the more we would get intelligence.

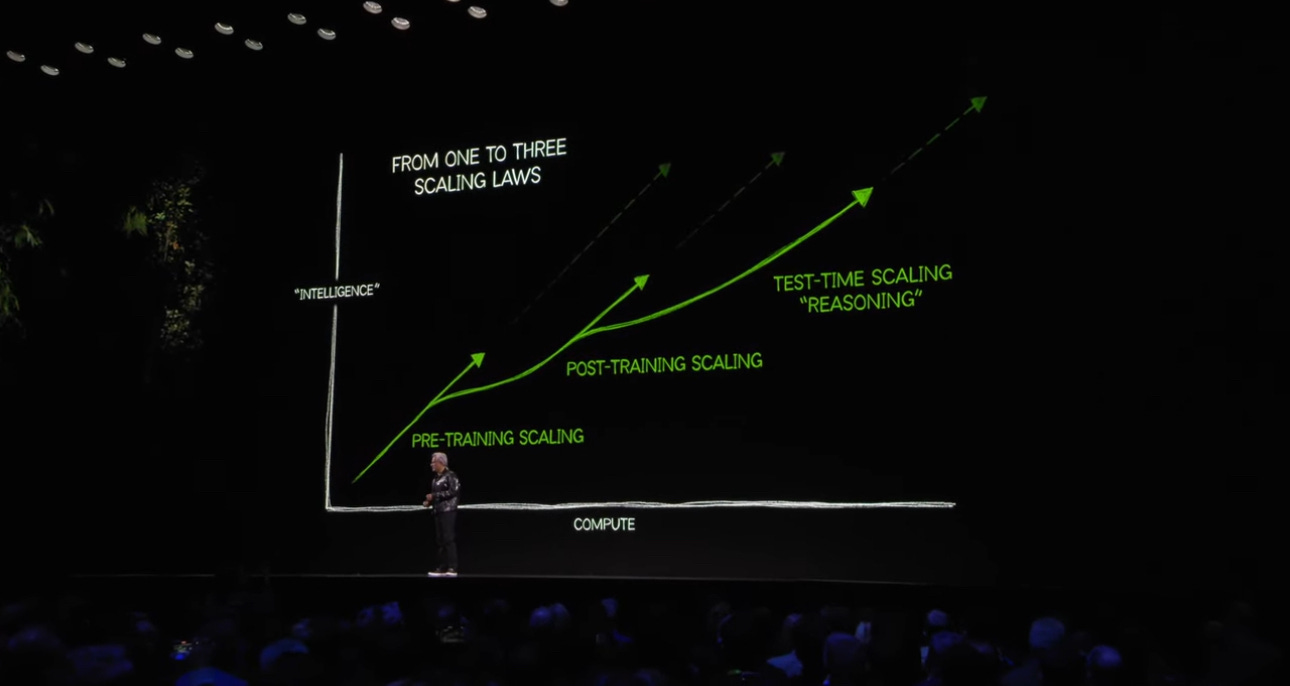

As we went into 2024, the pre-training scaling paradigm was finally replaced by two other technical scaling paradigms:

Post-Training Scaling.

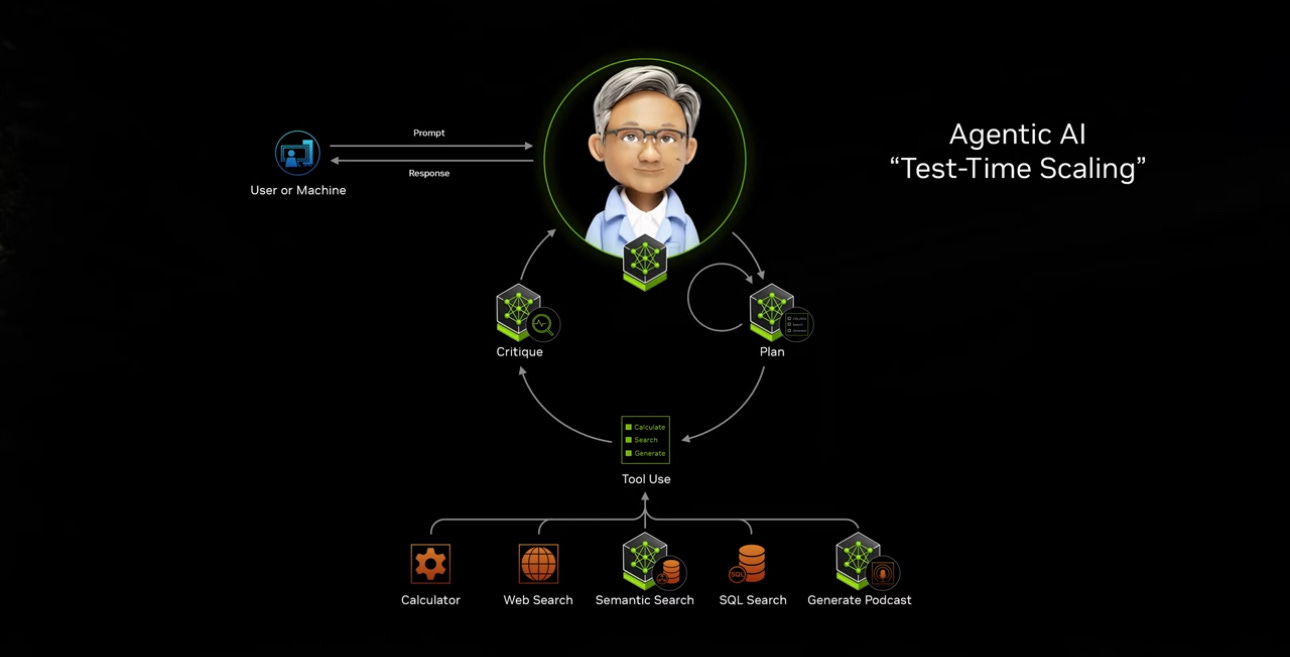

And Test-Time Scaling (primarily via “Reasoning”).

Thus, we can summarize the scaling laws as per these three dimensions:

Training Scaling Law: More data and computation yield better AI models.

Post-training Scaling Law: Techniques like reinforcement learning enhance AI capabilities.

Test-time Scaling Law: Improved computation during AI inference for more accurate results.

We’re at the point where the third scaling law (test-time scaling) might give us the most results in the coming years.

And yet, these will keep working in parallel, and we might figure out some new scaling laws in the next few years.

What’s coming next?

Jensen Huang has clarified that we’ve seen two of the three core phases that will enable the next steps of AI development:

Indeed, while we’ve been going through the Generative AI phase, and we’re just now scratching the surface of Agentic AI, Jensen Huang highlighted the next critical phase: Physical AIs.

Let’s see how NVIDIA is tackling all of them.

The Web² as the upcoming phase

In the Web² framework, I’ve highlighted how the first phase for AI will be the integration of it into native-web industries:

That’s what NVIDIA is betting on in its Generative AI offering.

The company offers the infrastructure to integrate AI into digital marketing and content development.

This is only the first step of integration of AI into Consumer, B2B, and Enterprise Applications out of the existing industries we have on the Web:

This integration will happen across consumer, B2B, and enterprise applications.

For that, NVIDIA is upping its game into the AI GPU infrastructure via its Blackwell architecture:

The launch of the RTX Blackwell family will enhance performance across the whole tech stack, thus potentially bringing significant advancements in energy efficiency and performance.

Thus enabling a single massive commercial use case: digital workers.

Digital Workers and Agentic AI for full Web² integration

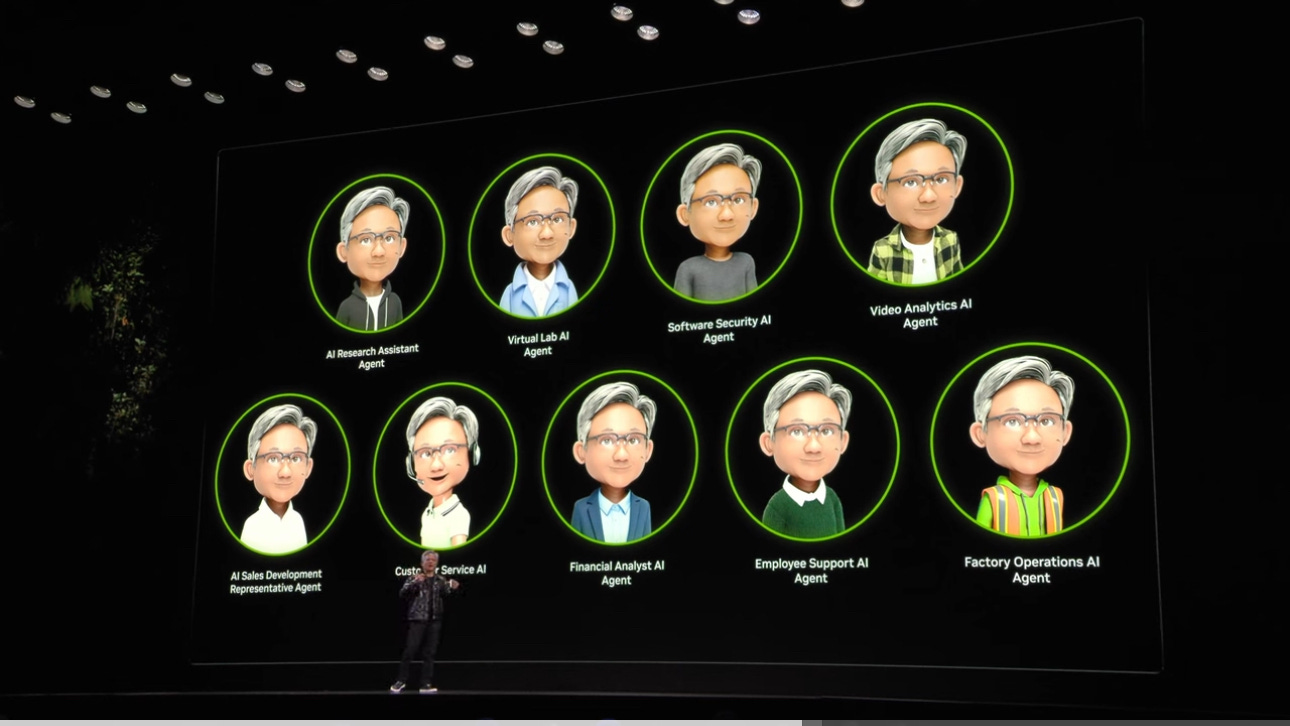

Digital workers are AI-powered agents designed to function as virtual employees, performing specific tasks, collaborating with humans, and supporting organizational processes.

Unlike traditional automation tools, digital workers combine the flexibility of AI with domain-specific capabilities, making them adaptable to a wide range of applications.

What are the core features that make them up?

Autonomous Task Execution:

Digital workers operate independently to complete repetitive or knowledge-intensive tasks, reducing human intervention.

Examples include data entry, report generation, customer support, and real-time monitoring.

Domain-Specific Expertise: These agents are trained or fine-tuned to understand specific industries, processes, or organizational needs, enabling them to provide highly relevant outputs.

Integration with Human Workflows:

Digital workers collaborate with human colleagues by augmenting decision-making, handling routine tasks, or managing workloads.

They seamlessly integrate with existing IT ecosystems and software tools.

Continuous Learning: They improve through post-training feedback mechanisms, reinforcement learning, and adaptive updates, ensuring alignment with evolving business goals.

Scalability: Unlike human employees, digital workers can be replicated or scaled effortlessly to handle surges in demand or new tasks.

How is NVIDIA advancing the digital worker ecosystem?

NVIDIA Nemo:

A framework for onboarding and training AI agents, ensuring they align with an organization's processes, language, and objectives.

Digital workers are "onboarded" like human employees, learning company-specific workflows and terminology.

NVIDIA Nim:

A suite of pre-built AI microservices that integrate capabilities like speech recognition, language understanding, and computer vision into digital workers.

AI Blueprint Models:

Open-source blueprints provide developers with a foundation to create custom digital workers tailored to specific business needs.

This will create three core trends:

Workforce Augmentation: Digital workers are not replacing humans but augmenting them, taking over mundane tasks and enabling humans to focus on creative and strategic work.

AI-Driven HR: IT departments will evolve into managing digital agents as part of their workforce, much like HR departments manage human employees.

Trillion-Dollar Opportunity: With over a billion knowledge workers globally, adopting digital workers represents one of AI's most significant growth opportunities.

The whole ecosystem is developing around an entire tech stack made of dozens of AI-native startups, each operating in a different part of the stack:

Physical AI and beyond Web²

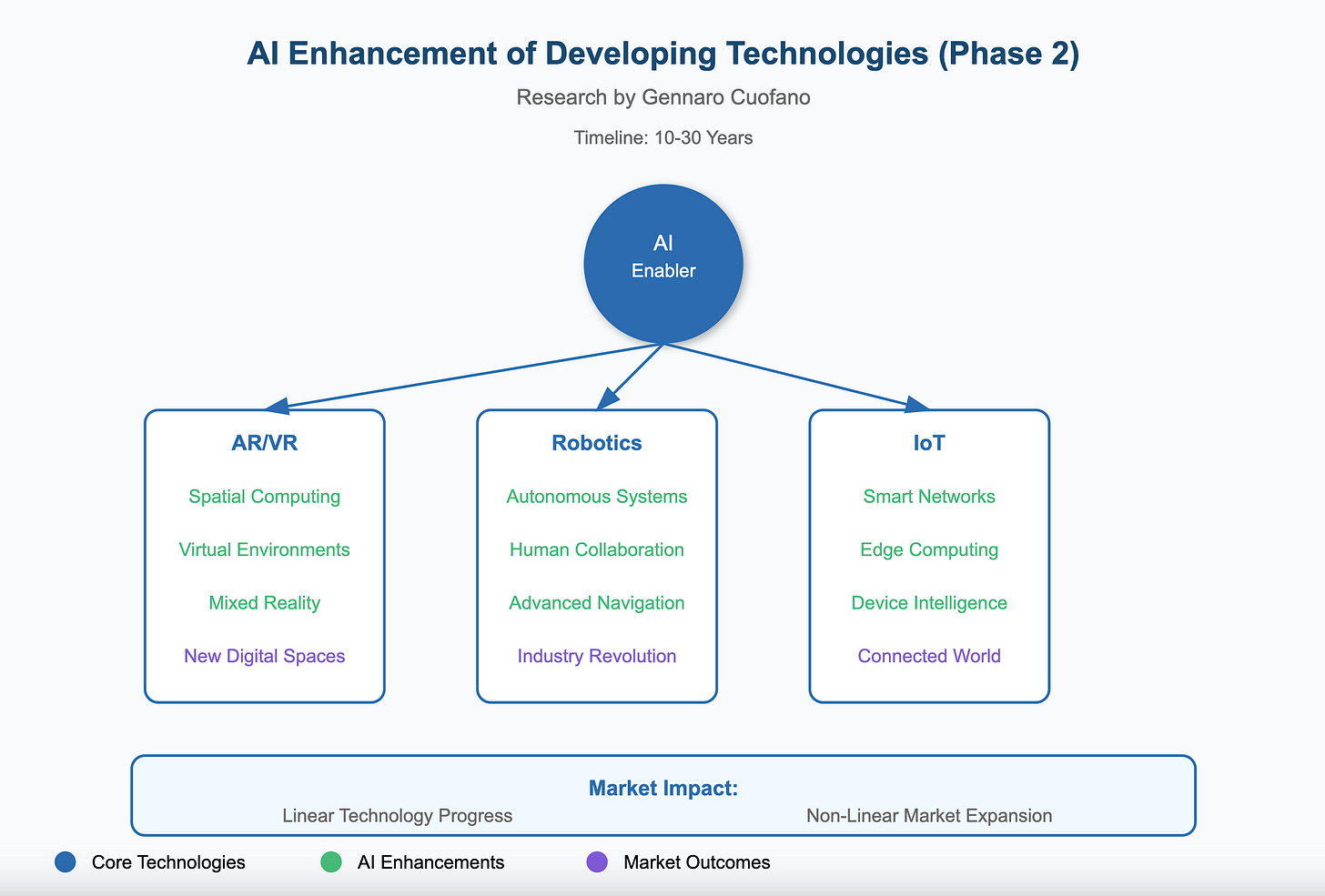

Physical AI represents the convergence of artificial intelligence with the physical world, enabling machines to perceive, understand, and interact with their surroundings in a way that mirrors human intuition.

Unlike traditional AI models, which primarily process data in text, image, or numerical formats, Physical AI focuses on integrating knowledge of physical laws, dynamics, and spatial relationships.

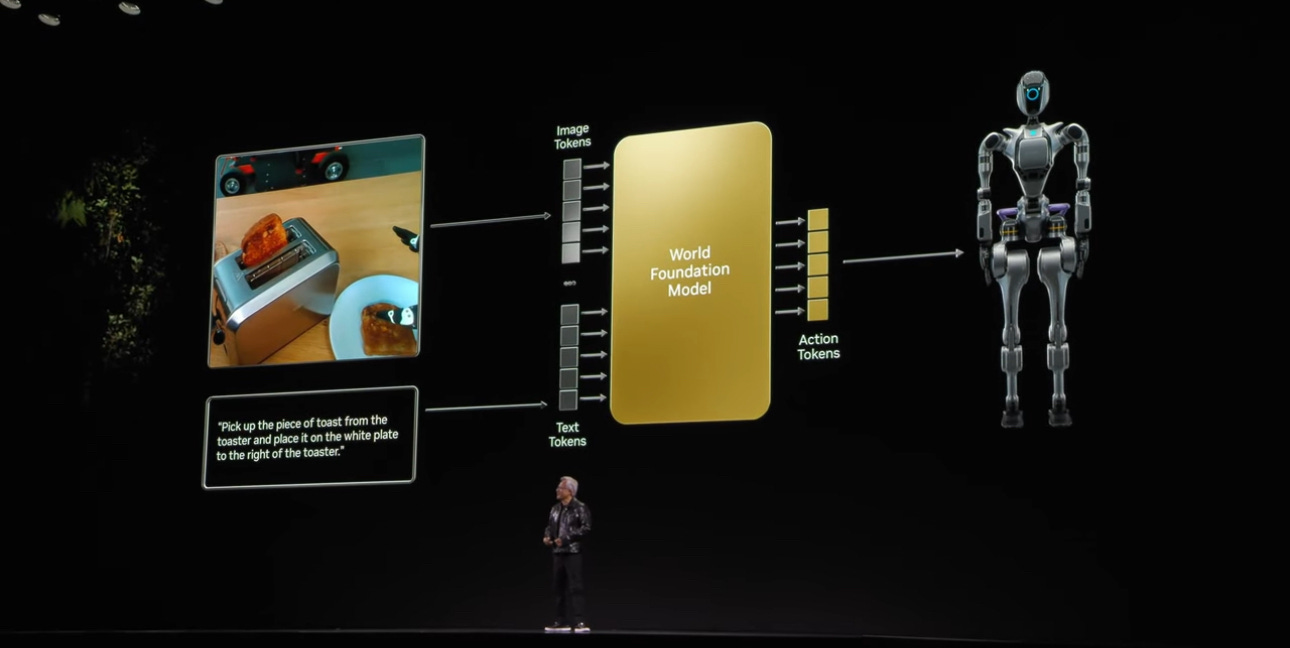

The foundation of Physical AI is World Models that simulate and understand the physical dynamics of objects and environments.

These models are designed to:

Recognize gravity, friction, and inertia.

Understand geometric and spatial relationships.

Incorporate cause-and-effect reasoning.

Handle object permanence (e.g., knowing an object remains present even when out of sight).

To get there, NVIDIA highlighted that we’ll need to have this shift:

Action Tokens: Instead of generating text-based outputs like language models, Physical AI produces "action tokens"—instructions that result in physical actions, such as moving an object or navigating a space.

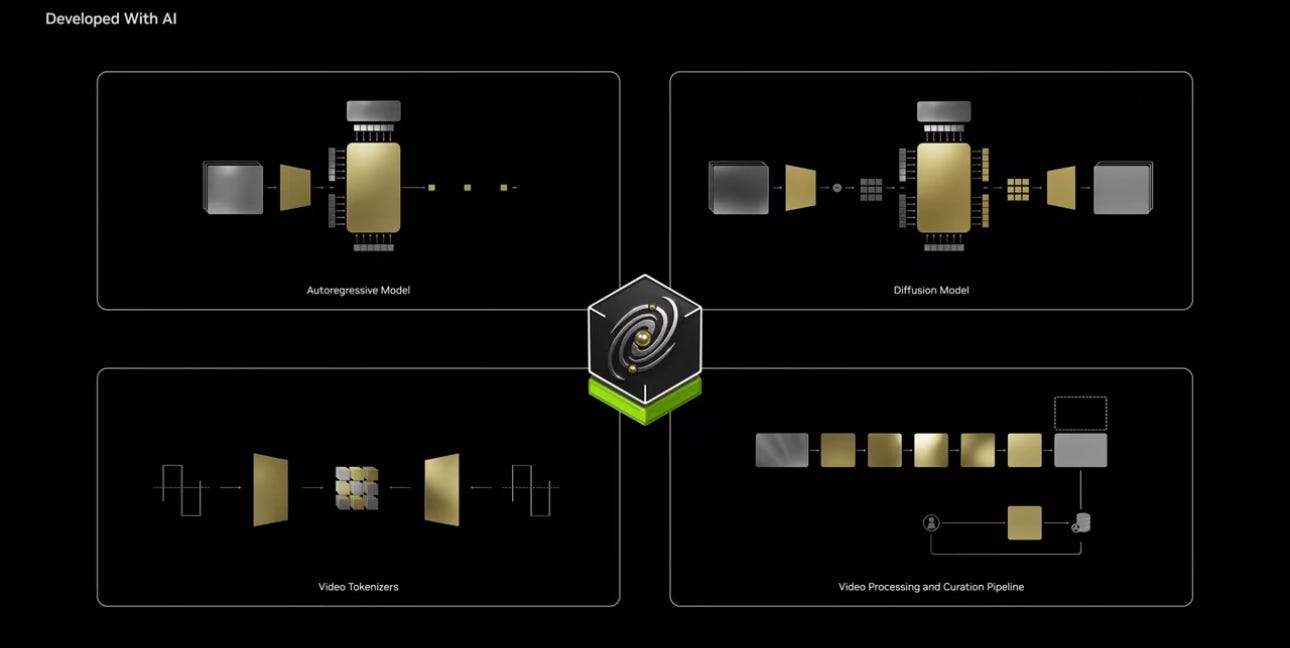

Foundation Models for the Physical World: Platforms like NVIDIA Cosmos aim to provide a framework for developing world models. These models are capable of:

Ingesting multimodal inputs like text, images, and videos.

Generating virtual world states as video outputs.

Supporting applications in autonomous vehicles, robotics, and real-world simulations.

Simulation and Real-world Data: Physical AI development requires high-fidelity simulations and extensive real-world data. Simulated environments allow for scalable, low-cost training, while real-world data ensures practical applicability.

What applications will this primarily enable?

Autonomous Robots: Robots equipped with physical AI can understand their environments and perform tasks such as object manipulation, navigation, and complex assembly.

Autonomous Vehicles (AVs): Physical AI enables vehicles to predict and respond to road conditions, simulate scenarios, and improve safety in real-world operations.

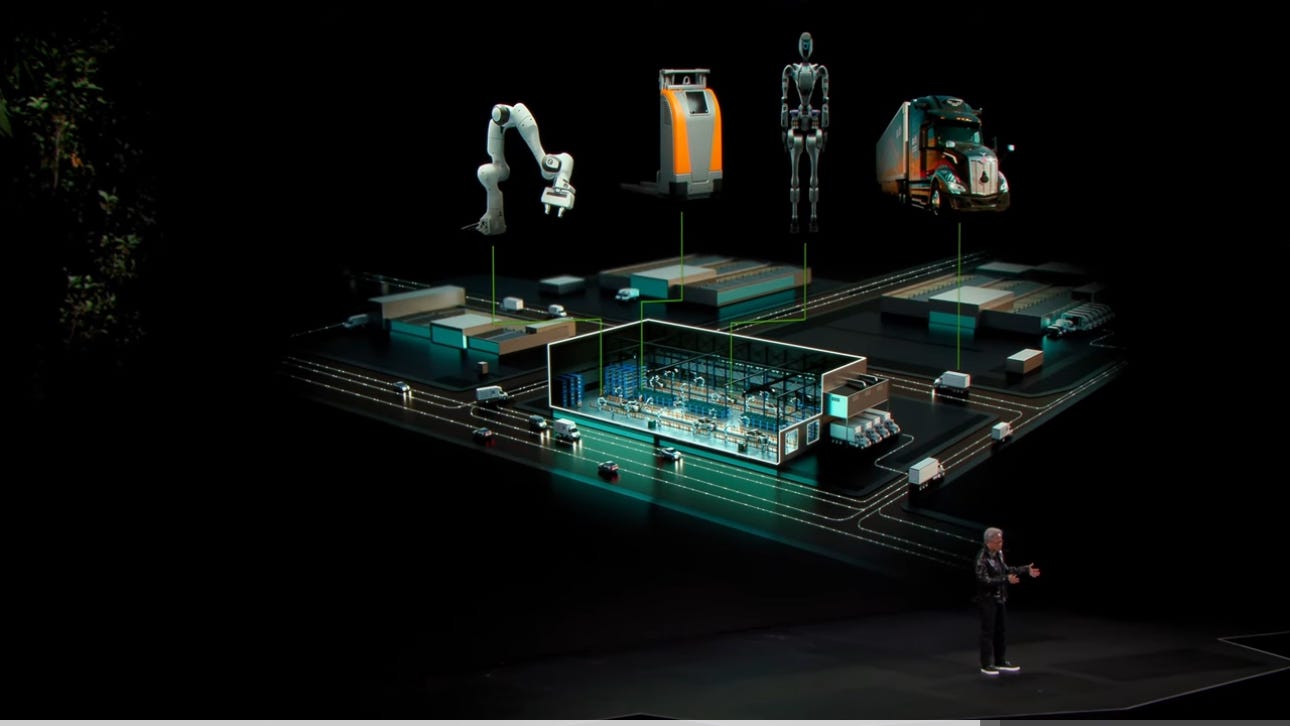

Industrial Automation: In manufacturing and logistics, Physical AI helps optimize workflows, manage dynamic environments, and reduce human intervention.

Industrial digitalization

The first integration here will be the whole redefinition of manufacturing processes via AI:

Industrial digitalization transforms traditional industrial processes and operations through advanced technologies like artificial intelligence (AI), the Internet of Things (IoT), robotics, and digital twins.

It enables industries to improve efficiency, reduce costs, enhance safety, and unlock new productivity levels by integrating smart systems into their workflows.

Autonomous driving

Autonomous driving is one of the most advanced applications of AI, combining machine learning, computer vision, and robotics to enable vehicles to navigate and operate without human intervention.

The goal is to create safer, more efficient, and more convenient transportation systems.

How is NVIDIA contributing to the ecosystem development there?

NVIDIA DRIVE Platform:

An end-to-end platform for developing, testing, and deploying AVs.

Combines GPUs, deep learning, and simulation to accelerate AV development.

AI-Powered Perception: NVIDIA's AI frameworks power real-time object detection, scene understanding, and environmental mapping.

Omniverse for Simulation: Provides a virtual testing environment to simulate millions of miles of driving scenarios, reducing the need for physical road tests.

DRIVE Hyperion: A reference architecture with all the sensors and software needed to build AVs, simplifying the development process for automakers.

Collaboration with Industry Leaders: NVIDIA partners with companies like Toyota, Mercedes-Benz, and Waymo to integrate its technology into their autonomous vehicle systems.

What major commercial applications will we see there?

Passenger Vehicles:

Self-driving cars and ride-hailing services like Waymo and Cruise.

Enhanced safety features and convenience for personal transportation.

Commercial Transport:

Autonomous trucks for long-haul freight and logistics.

Reduces costs, increases efficiency, and addresses driver shortages.

Public Transit:

Autonomous shuttles and buses for urban transportation.

Improves accessibility and reduces traffic congestion.

Robotics in Last-Mile Delivery: Self-driving vehicles for package delivery and logistics in urban environments.

Humanoids and the New Economic Paradigm

As Jensen Huang has highlighted:

This will likely be the first multitrillion-dollar robotics industry.

I’ve explained how, in the technology expansion cycle, humanoids will be a critical building block in terms of expanding tech to enable a whole new paradigm:

Humanoids are robots designed to resemble and mimic human physical and behavioral traits, enabling them to perform tasks in human environments with minimal adaptation.

Combining advancements in robotics, AI, and human-like interfaces, humanoids aim to work alongside humans in industries ranging from healthcare and manufacturing to personal assistance.

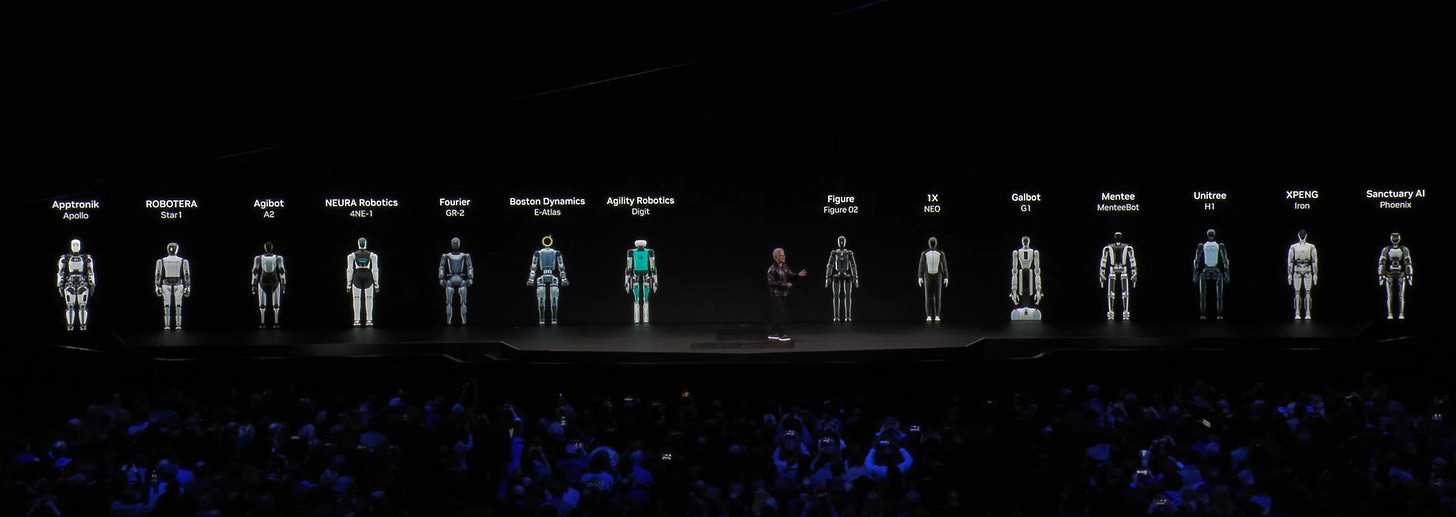

Interestingly, in just 2 years, we’ve seen a whole humanoid ecosystem created!

Jensen Huang emphasized:

The ChatGPT moment for General robotics is just around the corner!

What role will NVIDIA play in Humanoid Development?

Omniverse for Simulation:

NVIDIA’s Omniverse platform allows developers to create and simulate humanoid robots in virtual environments before physical deployment.

It provides high-fidelity physics simulations for training and testing humanoid movements and interactions.

Physical AI Models: NVIDIA Cosmos offers foundational models for Physical AI, enabling humanoids to understand gravity, friction, and spatial relationships.

AI Frameworks: Platforms like NVIDIA Nemo and NVIDIA Nim equip humanoids with natural language understanding, decision-making, and multimodal AI capabilities.

Hardware Advancements: NVIDIA GPUs power the real-time perception and decision-making capabilities required for humanoid robots.

Collaborations: Partnerships with robotics companies accelerate the development of humanoid robots for various industries.

I’ll dedicate a whole issue about it.

But for now, let’s recap the whole thing.

Recap: In This Issue!

NVIDIA's announcements at CES 2025 showcase a clear vision of the future, driven by scaling laws and breakthroughs in AI.

From autonomous vehicles to humanoids and digital workers, NVIDIA is at the forefront of enabling the AI-powered transformation of industries and daily life.

General Vision and Strategy

The ChatGPT Moment for Robotics: Jensen Huang emphasized that the breakthrough moment for general robotics is imminent, signaling a paradigm shift in robotics driven by AI.

Unified Innovation Thread: NVIDIA's announcements follow a coherent strategy to integrate AI into every aspect of computing, industry, and daily life.

30-Year Journey: From the introduction of the GPU in 1999 to CUDA in 2006 and Transformers in 2018, NVIDIA has been building the foundation for the AI revolution.

AI as a Computing Revolution

Scaling Laws:

Training Scaling Law: More data and computation yield better AI models.

Post-Training Scaling Law: Reinforcement learning enhances AI capabilities.

Test-Time Scaling Law: Improved reasoning during AI inference drives next-level results.

Generative AI to Physical AI: NVIDIA is transitioning from Generative AI and Agentic AI to Physical AI, enabling machines to perceive and act in the real world.

Key Innovations and Platforms

RTX Blackwell Architecture:

Launch of RTX Blackwell family, enhancing energy efficiency and performance across the AI tech stack.

NVIDIA Cosmos:

A platform for developing world models to support Physical AI applications like robotics and autonomous vehicles.

NVIDIA DRIVE:

End-to-end solutions for autonomous driving, including DRIVE Hyperion and Omniverse simulation for safer, faster development.

NVIDIA Omniverse:

Simulation and collaboration platform for digital twins, robotics, and humanoid development.

Core Areas of Application

Generative AI and Digital Workers:

Digital Workers: AI-powered virtual employees that operate autonomously, integrate with workflows, and scale effortlessly.

NVIDIA Nemo and Nim: Tools for training and deploying digital workers, creating an AI-driven workforce.

Industrial Digitalization:

Transforming manufacturing and logistics with digital twins, predictive maintenance, and energy optimization.

AI integration into Industrial IoT (IIoT) for smarter, more connected factories.

Autonomous Driving:

Passenger vehicles, commercial transport, and public transit enhanced by NVIDIA's AI-powered DRIVE platform.

Virtual testing with Omniverse reduces road testing costs and accelerates deployment.

Humanoids:

Robots resembling humans to operate in human environments, addressing tasks in healthcare, manufacturing, and personal assistance.

NVIDIA’s role includes simulation with Omniverse, Physical AI models with Cosmos, and AI frameworks like Nemo.

Emerging Trends

Web² Framework: AI's integration into digital marketing, enterprise applications, and consumer tech as the next phase of the web.

Agentic AI: Systems capable of reasoning, planning, and acting autonomously in complex scenarios.

Physical AI: Robots and systems that interact with the real world using action tokens and world models.

Economic and Industry Implications

Trillion-Dollar Opportunity:

Digital workers and autonomous systems represent a massive economic potential.

Humanoids are set to drive the first multitrillion-dollar robotics industry.

Workforce Augmentation:

Digital workers complement human roles, enabling creative and strategic focus.

Read Also:

With ♥️ Gennaro, FourWeekMBA