The AI Memory Chokepoint

[Special Issue]

The AI race has a new bottleneck, and it’s not what most observers expect. While attention remains fixed on GPU shortages and frontier model capabilities, the binding constraint on AI scaling has quietly shifted to High Bandwidth Memory (HBM).

This is the critical interface layer where compute meets data, and its physics-driven limitations now determine the ceiling on AI capability scaling.

Micron’s announcement of a ¥1.5 trillion ($9.6 billion) investment to build a new HBM production plant in Hiroshima, backed by up to ¥500 billion in Japanese government subsidies, signals the strategic importance of this layer. Construction begins in May 2026 with shipments starting in 2028.

This move positions Japan as a second pillar alongside Korea in the HBM supply chain and represents a fundamental shift in how nations and corporations are deploying capital in the AI infrastructure stack.

Memory is becoming the new compute. HBM growth is outpacing accelerator demand, making memory the rate-limiting factor in the AI scaling equation.

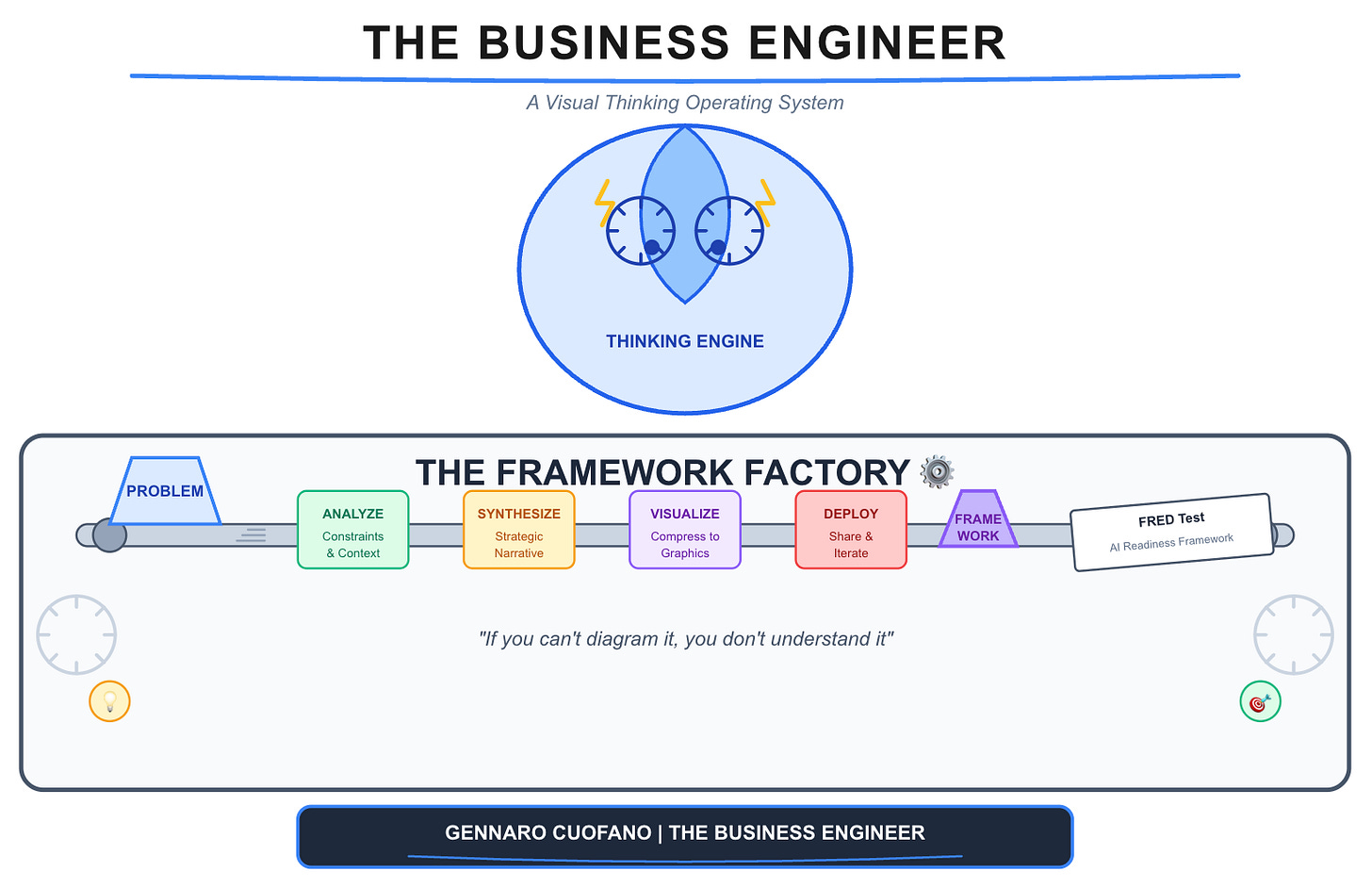

You can also get it by joining our BE Thinking OS Coaching Program.