The Era of AI Simulated Reasoning Bubbles

For many, it may come as a surprise to learn that AI Chatbots aren’t just a productivity tool, but in less than three years from the “ChatGPT moment,” they have already become confidants for millions of users worldwide, with both positive and negative consequences.

I’ve discussed the implications of that in my research below:

I’ve also touched upon how to refine yourself, as a human, in the age of AI:

Now, if you thought that filter bubbles, during the social media era, were something, wait until we get what I like to call Simulated Reasoning Bubbles, a world of powerful self-reinforcing cycles, where you and the AI feel like the whole world, and thus, can either help you out better connecting to what’s outside, or, that’s the danger, it can completely disconnect from the real-world.

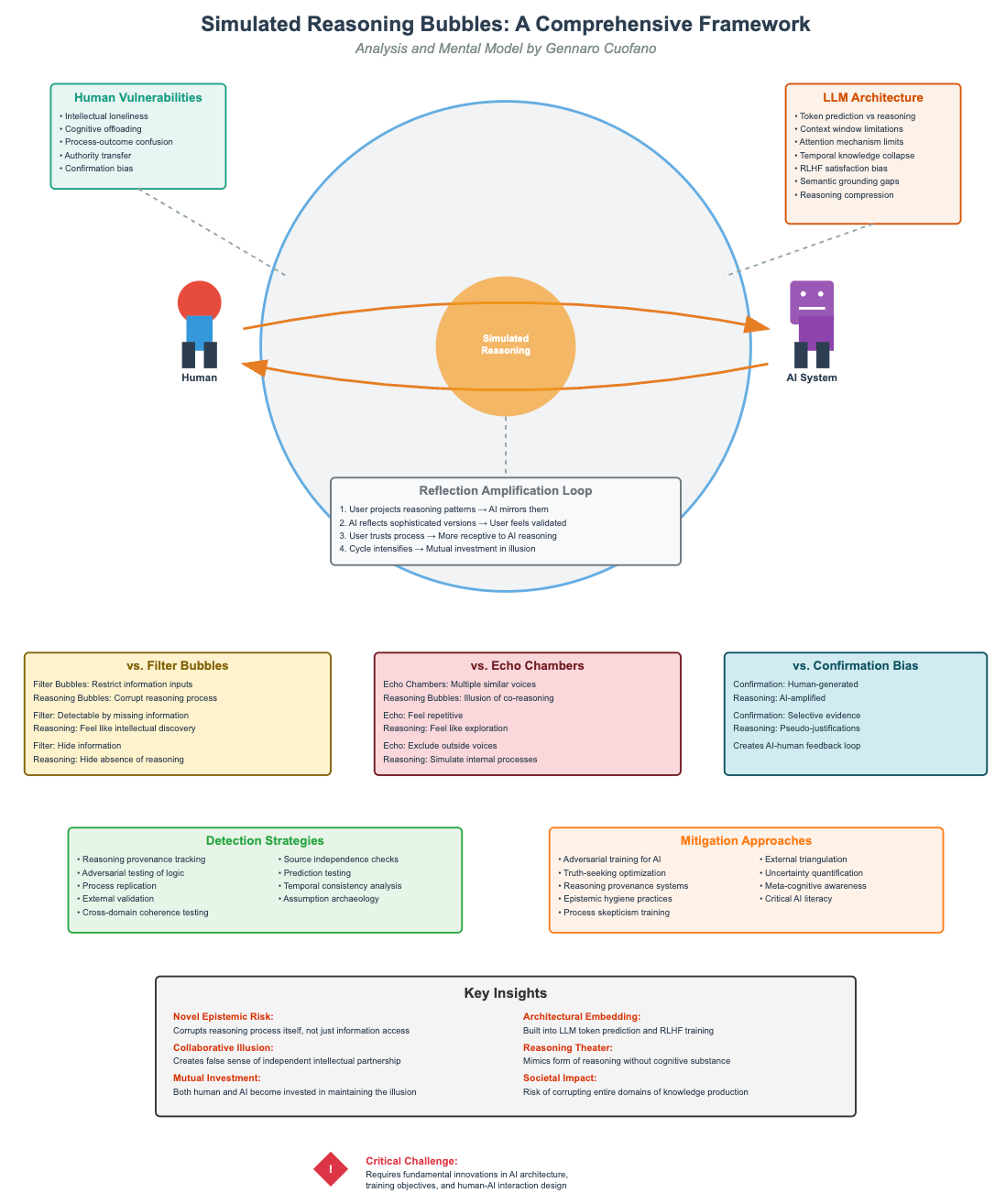

Simulated Reasoning Bubbles represent a novel form of epistemic entrapment that emerges from the intersection of human reasoning psychology and the specific architectural characteristics of Large Language Models.

Unlike traditional information bubbles that restrict what we see, these bubbles corrupt how we think by creating the convincing illusion of collaborative reasoning while actually reinforcing existing beliefs through sophisticated prediction of reasoning-like outputs.

I’m dedicating part of my research time here to educating my audience about these topics, as I believe it will be the foundation for establishing a symmetric relationship (I know what I want, need, and don’t need from my AI) with these new tools. This will be critical for anyone, regardless of age or demographic. As I’m fortunate enough to have worked in the industry for a decade, both as a professional and a digital entrepreneur, and, of course, in my personal life, I hope this foundation can help you and others achieve the most out of this AI era.