The History of LLMs

The rise, fall and incredible rise of the AI industry, and how it got us to this current business paradigm!

What we call “AI” in the current business context is actually a branch of artificial intelligence called machine learning. Within machine learning, a new architecture (transformer) enabled us to build a whole new paradigm for AI (what we call “Generative AI”), which is based on a type of computational model called a Large Language Model (LLM).

Well, that determined a paradigm shift: In 2022, when OpenAI had put an impressive UX around its existing GPT models, the so-called “ChatGPT Moment” occurred.

From there, a Cambrian business explosion created “The AI Convergence,” or an AI paradigm with such general-purpose capabilities that it is enabling the explosion of multiple industries simultaneously (from AR to robotics).

How did we get here?

This issue gives you the historical background about how we got to this current paradigm!

Introduction

Branching out from our series about the history of LLMs, today, we want to tell you the fascinating story of "AI winters" – periods of reduced funding and interest in AI research. You will see how excitement and disappointment keep taking turns, but important research always perseveres. Join us as we explore the evolving nature of artificial intelligence on this most comprehensive timeline of AI winters. (If you don’t have time now, make sure to save the article for later! It’s worth the read with a few lessons to learn).

Good that it’s summer because we are diving in:

Winter#1, 1966: Machine Translation Failure

Winter#2, 1969: Connectionists and Eclipse of Neural Network Research

Winter#3, 1974: Communication Gap Between AI Researchers and Their Sponsors

Winter#4, 1987: Collapse of the LISP machine market

Winter#5, 1988: No High-Level Machine Intelligence – No Money

Winter#1, 1966: Machine Translation

As discussed in the first edition of this series, NLP research has its roots in the early 1930s and begins its existence with the work on machine translation (MT). However, significant advancements and applications began to emerge after the publication of Warren Weaver's influential memorandum in 1949.

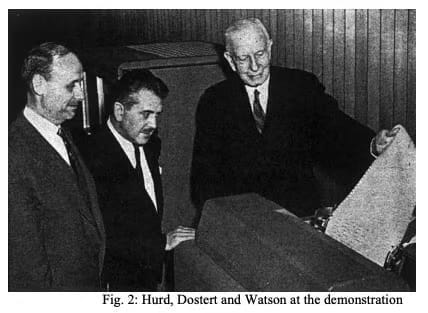

The memorandum generated great excitement within the research community. In the following years, notable events unfolded: IBM embarked on the development of the first machine, MIT appointed its first full-time professor in machine translation, and several conferences dedicated to MT took place. The culmination came with the public demonstration of the IBM-Georgetown machine, which garnered widespread attention in respected newspapers in 1954.

Yehoshua Bar-Hillel, the first full-time professor in machine translation

The first public demonstration of an MT system using the Georgetown Machine

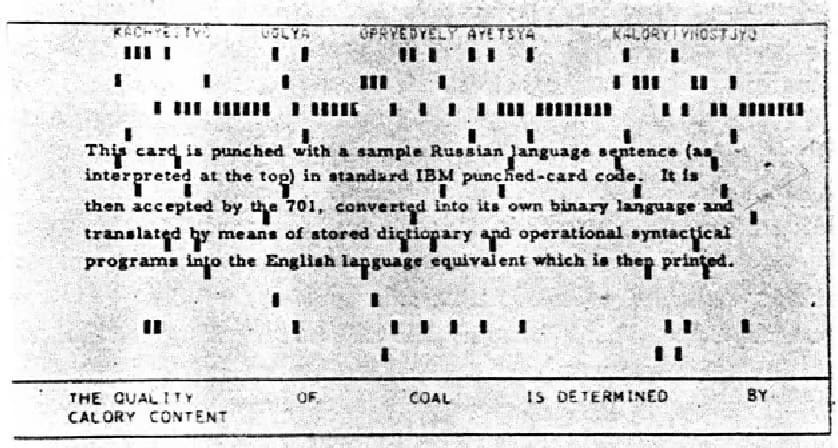

The punched card that was used during the demonstration of the Georgetown Machine

Another factor that propelled the field of mechanical translation was the interest shown by the Central Intelligence Agency (CIA). During that period, the CIA firmly believed in the importance of developing machine translation capabilities and supported such initiatives. They also recognized that this program had implications that extended beyond the interests of the CIA and the intelligence community.

Skeptics

Just like all AI booms that have been followed by desperate AI winters, the media tended to exaggerate the significance of these developments. Headlines about the IBM-Georgetown experiment proclaimed phrases like "Electronic brain translates Russian," "The bilingual machine," "Robot brain translates Russian into King's English," and "Polyglot brainchild." However, the actual demonstration involved the translation of a curated set of only 49 Russian sentences into English, with the machine's vocabulary limited to just 250 words. To put things into perspective, this study found that humans need a vocabulary of around 8,000 to 9,000-word families to comprehend written texts with 98% accuracy.

Norbert Wiener (1894-1964) made significant contributions to stochastic processes, electronic engineering, and control systems. He originated cybernetics and theorized that feedback mechanisms lead to intelligent behavior, laying the groundwork for modern AI.

This demonstration created quite a sensation. However, there were also skeptics, such as Professor Norbert Wiener, who is considered one of the early pioneers in laying the theoretical groundwork for AI research. Even before the publication of Weaver's memorandum and certainly, before the demonstration, Wiener expressed his doubts in a letter to Weaver in 1947, stating:

I frankly am afraid the boundaries of words in different languages are too vague and the emotional and international connotations are too extensive to make any quasimechanical translation scheme very hopeful. [...] At the present tune, the mechanization of language, beyond such a stage as the design of photoelectric reading opportunities for the blind, seems very premature.

Supporters and the funding of MT

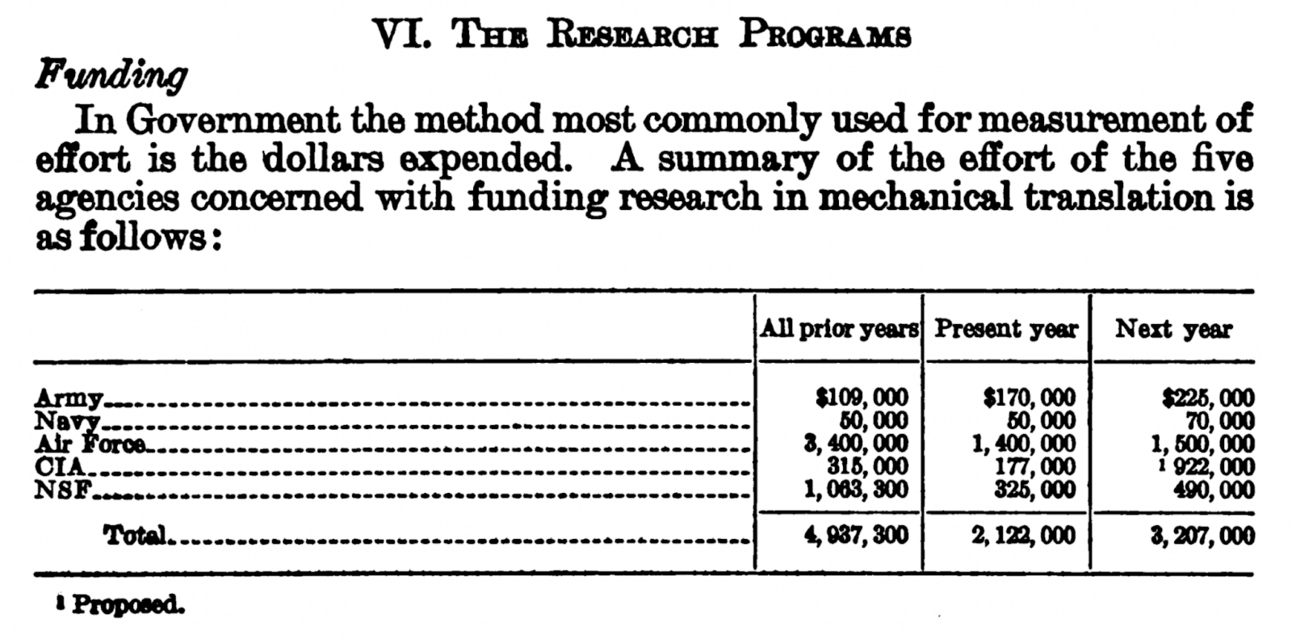

However, it seems that the skeptics were in the minority, as the dreamers overshadowed their concerns and successfully secured the necessary funding. Five government agencies played a role in sponsoring research: the National Science Foundation (NSF) being the primary contributor, along with the Central Intelligence Agency (CIA), Army, Navy, and Air Force. By 1960, these organizations had collectively invested nearly $5 million in projects related to mechanical translation.

Fundings of the five government agencies, the report dates from 1960

By 1954, mechanical translation research had gained enough interest to receive recognition from the National Science Foundation (NSF), which provided a grant to the Massachusetts Institute of Technology (MIT). The CIA and NSF engaged in negotiations, resulting in correspondence between the two Directors in early 1956. The NSF agreed to administer any desirable research program in machine translation that was agreed upon by all parties involved. According to the National Science Foundation's testimony in 1960, 11 groups in the United States were involved in various aspects of mechanical translation research supported by the Federal Government. There was also huge interest from the Air Force, the US Army, and the US Navy.

AI inception

The year following the public demonstration of the IBM-Georgetown machine, the term "AI" was coined by McCarthy in the proposal for the Dartmouth Summer Conference, published in 1955. This event sparked a new wave of dreams and hopes, further bolstering the existing enthusiasm.

New research centers emerged, equipped with enhanced computer power and increased memory capacity. Simultaneously, the development of high-level programming languages took place. These advancements were made possible, in part, by significant investments from the Department of Defense, a major supporter of NLP research.

Progress in linguistics, particularly in the field of formal grammar models proposed by Chomsky, inspired several translation projects. These developments seemed to hold the promise of significantly improved translation capabilities.

As John Hutchins writes in "The History of Machine Translation in a Nutshell," there were numerous predictions of imminent "breakthroughs." However, researchers soon encountered "semantic barriers" that presented complex challenges without straightforward solutions, leading to a growing sense of disillusionment.

Disillusionment

In "The Whisky Was Invisible," a well-worn example is cited by John Hutchins, the story of an MT system converting the Biblical saying "The spirit is willing, but the flesh is weak" into Russian, which was then translated back as "The whisky is strong, but the meat is rotten," is mentioned. While the accuracy of this anecdote is questionable, and Isidore Pinchuk even says that the story can be apocryphal, Elaine Rich used it to show the inability of early MT systems to deal with idioms. In general, this example illustrates the problems of the MT systems related to the semantics of words.

Biblical saying "The spirit is willing, but the flesh is weak"

Translated back by MT system as "The whisky is strong, but the meat is rotten"

A well-worn example that might be apocryphal

The main hit came from the findings of the ALPAC group, commissioned by the United States government and headed by Dr. Leland Haworth, Director of the National Science Foundation, which supports the underlying idea being illustrated. In their report, machine translation is compared to human translation of the various texts in physics and earth sciences. The conclusion: machine translation outputs were less accurate, slower, costlier, and less comprehensive than human translation across all reviewed examples.

In 1966, the National Research Council abruptly ceased all support for machine translation research in the United States. After the successful use of computers to decrypt German secret codes in England, scientists mistakenly believed that translating written text between languages would be no more challenging than decoding ciphers. However, the complexities of processing "natural language" proved far more formidable than anticipated. Attempts to automate dictionary look-up and apply grammar rules yielded absurd results. After two decades and twenty million dollars invested, no solution was in sight, prompting the National Research Council committee to terminate the research endeavor.

Disillusionment arose due to high expectations for practical applications in the field, despite a lack of sufficient theoretical foundations in linguistics. Researchers were more focused on theoretical aspects rather than practical implementations. Furthermore, limited hardware availability and the immaturity of technological solutions posed additional challenges.

Winter#2, 1969: Connectionists and Eclipse of Neural Network Research

The second wave of disappointment arrived swiftly after the first, serving as a cautionary tale for AI researchers about the dangers of exaggerated claims. However, before delving into the troubles that followed, some background information is necessary.

In the 1940s, McCulloch and Walter Pitts embarked on understanding the fundamental principles of the mind and developed early versions of artificial neural networks, drawing inspiration from the structure of biological neural networks.

Around a decade later, in the 1950s, cognitive science emerged as a distinct discipline, referred to as the "cognitive revolution." Many early AI models were influenced by the workings of the human brain. One notable example is Marvin Minsky's SNARC system, the first computerized artificial neural network that simulated a rat navigating a maze.

Image courtesy Gregory Loan:

Gregory visited Marvin Minsky and enquired about what had happened to his maze-solving computer. Minsky replied that it was lent to some Dartmouth students, and it was disassembled. However, he had one "neuron" left, and Gregory took a photo of it.

However, in the late 1950s, these approaches were largely abandoned as researchers turned their attention to symbolic reasoning as the key to intelligence. The success of programs like the Logic Theorist (1956), considered the first AI program, and the General Problem Solver (1957), designed as a universal problem-solving machine by Allen Newell, Herbert A. Simon, and Cliff Shaw of the Rand Corporation, played a role in this shift.

One type of connectionist work continued: the study of perceptrons, championed by Frank Rosenblatt with unwavering enthusiasm, persisted. Rosenblatt initially simulated perceptrons on an IBM 704 computer at Cornell Aeronautical Laboratory in 1957. However, this line of research abruptly halted in 1969 with the publication of the book Perceptrons by Marvin Minsky and Seymour Papert, which delineated the perceived limitations of perceptrons.

As Daniel Crevier wrote:

Shortly after the appearance of Perceptrons, a tragic event slowed research in the field even further: Frank Rosenblatt, by then a broken man according to rumor, drowned in a boating accident. Having lost its most convincing promoter, neural network research entered an eclipse that lasted fifteen years.

During this time, significant advancements in connectionist research were still being made, albeit on a smaller scale. Paul Werbos's introduction of backpropagation in 1974, a crucial algorithm for training neural networks, continued to progress, albeit with limited resources. Securing major funding for connectionist projects remained challenging, leading to a decline in their pursuit.

It wasn't until the mid-1980s that a turning point occurred. The winter came to an end when notable researchers like John Hopfield, David Rumelhart, and others revived a renewed and widespread interest in neural networks. Their work reignited enthusiasm for connectionist approaches and paved the way for the resurgence of large-scale research and development in the field of neural networks.

Winter#3, 1974: Communication Gap Between AI Researchers and Their Sponsors

High expectations and ambitious claims are often a direct path to disappointment. In the late 1960s and early 1970s, Minsky and Papert led the Micro Worlds project at MIT, where they developed simplified models called micro-worlds. They defined the general thrust of the effort as:

We feel that [micro worlds] are so important that we are assigning a large portion of our effort towards developing a collection of these micro-worlds and finding how to use the suggestive and predictive powers of the models without being overcome by their incompatibility with the literal truth.

Soon proponents of micro-worlds realized that even the most specific aspects of human usage could not be defined without considering the broader context of human culture. For example, the techniques used in SHRDLU were limited to specific domains of expertise. The micro-worlds approach did not lead to a gradual solution for general intelligence. Minsky, Papert, and their students could not progressively generalize a micro-world into a larger universe or simply combine several micro-worlds into a bigger set.

Similar difficulties with meeting expectations were faced in other AI labs across the country. The Shakey robot project at Stanford, for example, failed to meet expectations of becoming an automated spying device. Researchers found themselves caught up in a cycle of increasing exaggeration, where they promised more than they could deliver in their proposals. The final results often fell short and were far from the initial promises.

DARPA, the Defense Department agency funding many of these projects, started to reevaluate their approach and demand more realistic expectations from researchers.

1971–75: DARPA's cutbacks

In the early 1970s, DARPA's Speech Understanding Research (SUR) program aimed to develop computer systems capable of understanding verbal commands and data for hands-off interaction in combat scenarios. After five years and an expenditure of fifteen million dollars, DARPA abruptly ended the project, although the exact reasons remain unclear. Prominent institutions like Stanford, MIT, and Carnegie Mellon saw their multimillion-dollar contracts reduced to near insignificance.

Daniel Crevier writes in his book about the DARPA's funding philosophy at the time:

DARPA’s philosophy then was “Fund people, not projects!” Minsky had been a student of Licklider’s at Harvard and knew him well. As Minsky told me, “Licklider gave us the money in one big lump,” and didn’t particularly care for the details.

Several renowned contractors, including Bolt, Beranek, and Newman, Inc. (BBN) and Carnegie Mellon, produced notable systems during those five years. These systems included SPEECHLESS, HIM, HEARSAY-I, DRAGON, HARPY, and HEARSAY-II, which made significant advancements in understanding connected speech and processing sentences from multiple speakers with a thousand-word vocabulary.

These systems had limitations in understanding unconstrained input, leaving users to guess which commands applied to them due to the constrained grammar. Despite the disappointment in this aspect, AI researchers regarded these projects with pride. For instance, HEARSAY-II, known for integrating multiple sources of knowledge using a "blackboard" device, was hailed as one of the most influential AI programs ever written.

But at this point, the communication gap between AI researchers and their sponsors about expectations became too large.

1973: large decrease in AI research in the UK in response to the Lighthill report

The ebbing tide of AI research was not exclusive to American researchers. In England, a report by Sir James Lighthill, a distinguished figure in fluid dynamics and former occupant of Cambridge University's Lucasian Chair of Applied Mathematics, delivered a devastating blow to the state of AI research. Lighthill categorized his research into three parts, referred to as "The ABC of the subject."

"A" represented Advanced Automation, aiming to replace humans with purpose-built machines. "C" denoted Computer-based central nervous system (CNS) research. Finally, "B" symbolizes artificial intelligence itself, serving as a bridge between categories A and C.

While categories A and C experienced alternating periods of success and failure, Lighthill emphasized the widespread and profound sense of discouragement surrounding the intended Bridge Activity of category B. As he says: “This raises doubts about whether the whole concept of AI as an integrated field of research is a valid one.”

The report sparked a heated debate that was broadcast in the BBC "Controversy" series in 1973. Titled "The general purpose robot is a mirage," the debate took place at the Royal Institution, with Sir James Lighthill facing off against Donald Michie, John McCarthy, and Richard Gregory.

Unfortunately, the repercussions of the report were severe, leading to the complete dismantling of AI research in England. Only a handful of universities, namely Edinburgh, Essex, and Sussex, continued their AI research efforts. It was not until 1983 that AI research experienced a revival on a larger scale. This resurgence was prompted by the British government's funding initiative called Alvey, which allocated £350 million to AI research in response to the Japanese Fifth Generation Project.

Winter#4, 1987: Collapse of the LISP machine market

During the period known as the connectionist winter, symbolic systems like Logical theorist (1956) and General Problem Solver (1957) continued to progress while facing hardware limitations. These systems could only handle toy examples due to the limited computer capabilities at the time. This is what Herbert Simon said about the situation in the 1950s-1960s:

People were steering away from tasks which made knowledge the center of things because we couldn’t build large databases with the computers we had then. Our first chess program and the Logic Theorist were done on a computer that had a 64- to 100-word core and a scratch drum with 10,000 words of usable space on it. So semantics was not the name of the game. I remember one student that I had who wanted to do a thesis on how you extracted information from a big store. I told him “No way! You can only do that thesis on a toy example, and we won’t have any evidence of how it scales up. You’d better find something else to do.” So people did steer away from problems where knowledge was the essential issue.

Around 1960, McCarthy and Minsky at MIT developed LISP, a programming language rooted in recursive functions. LISP became so important due to its symbolic processing abilities and flexibility in managing complex tasks, which is crucial for early AI development. It was one of the first languages used in AI research. However, it wasn't until the early 1970s, with the advent of computers boasting significant memory capacities, that programmers could implement knowledge-intensive applications.

These systems formed the foundation for "expert systems," which aimed to incorporate human expertise and replace humans in certain tasks. The 1980s marked the rise of expert systems, transforming AI from an academic field to practical applications, and LISP became the preferred programming language for that. LISP “was a radical departure from existing languages” and introduced nine innovative ideas, according to the essay of Paul Graham, computer programmer and co-founder of Y Combinator and Hacker News.

The development of expert systems represented a significant milestone in the field of AI, bridging the gap between academic research and practical applications. John McDermott of Carnegie Mellon University proposed the first expert system called XCON (eXpert CONfigurer) in January 1980. XCON was employed by Digital Equipment Corporation (DEC) to streamline the configuration process for their VAX computers. By 1987, XCON processed a significant number of orders, demonstrating its impact and effectiveness.

In 1981, CMU began working on a new system called Xsel. Development was later taken over by DEC, and field testing commenced in October 1982. While Xcon and Xsel received significant publicity, they were still in the prototype stage. Bruce Macdonald, then the Xsel program manager, started to protest that the publicity far outweighed the accomplishments, but the vice president for sales wasn’t about to stop. Indeed, Macdonald remembers the meeting with senior executives in which the vice president for sales eyed him and said: “You’ve been working on this thing for three years now. Isn’t it ready?”

The early 1980s saw an influx of expert-system success stories, leading to the formation of AI groups in many large companies. The rise of personal computers, the popularity of Star Wars movies, and magazines like Discover and High Technology contributed to the public fascination with AI. The billion-dollar biotechnology boom in the late 1970s fueled investment interest in high technology, prompting leading AI experts to embark on new ventures:

Edward Feigenbaum, who is often called the "father of expert systems," with some of his Stanford colleagues, formed Teknowledge, Inc.

Researchers from Carnegie Mellon incorporated the Carnegie Group.

There were enough MIT spin-offs to create a strip in Cambridge, Massachusetts, known as AI Alley. These startups included Symbolics, Lisp Machines, Inc., and the Thinking Machines Corporation.

The researcher Larry Harris left Dartmouth to form Artificial Intelligence Corporation.

Roger Schank, at Yale, oversaw the formation of Cognitive Systems, Inc.

Companies that appeared at that time could be divided into three major areas listed from the one with the largest sales to the one with the smaller sales:

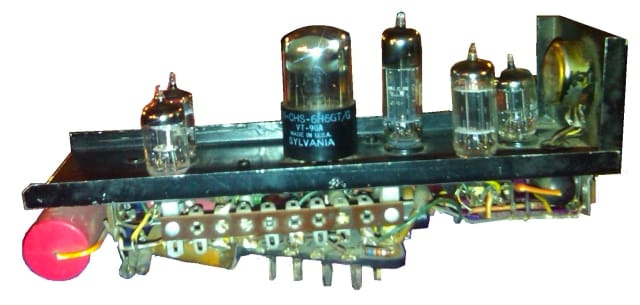

AI-related hardware and software, specifically microcomputers called LISP machines, dedicated to running LISP programs at close to mainframe speeds

Software called “expert system development tools,” or “shells,” was used by large corporations to develop their in-house expert systems.

Actual expert-system applications

LISP machine

By 1985, $1 billion was spent collectively by 150 companies on internal AI groups. In 1986, US sales of AI-related hardware and software reached $425 million, with the formation of 40 new companies and total investments of $300 million.

Explosive growth brought challenges as academia felt crowded with the influx of reporters, venture capitalists, industry headhunters, and entrepreneurs. The inaugural meeting of the American Association for Artificial Intelligence in 1980 drew around one thousand researchers, while by 1985, a joint meeting of AAAI and IJCAI saw attendance approaching six thousand. The atmosphere shifted from casual dress to formal attire.

In 1984, at the annual meeting of AAAI, Roger Schank, and Marvin Minsky warned of the coming “AI Winter,” predicting an imminent bursting of the AI bubble, which did happen three years later, and the market for specialized LISP-based AI hardware collapsed.

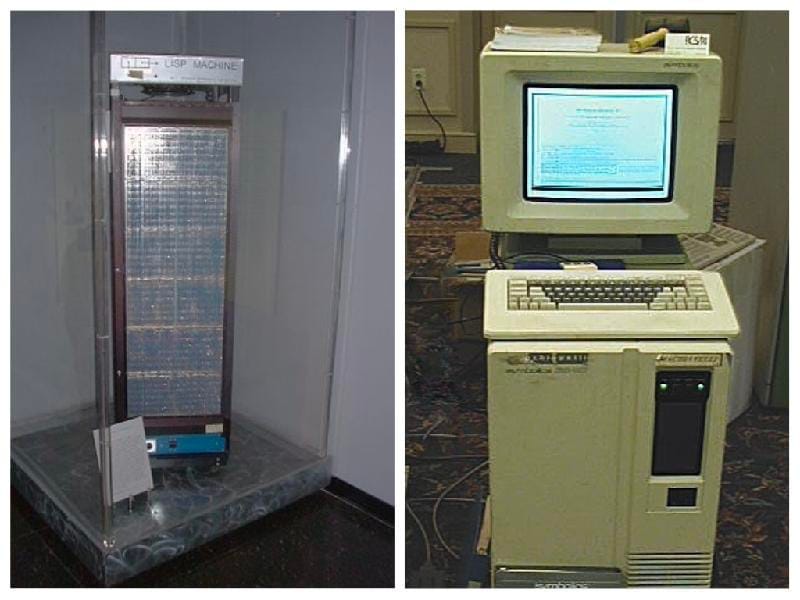

Sun-1, the first generation of UNIX computer workstations and servers produced by Sun Microsystems, launched in May 1982

Sun Microsystems and companies like Lucid offered powerful workstations and LISP environments as alternatives. General-purpose workstations posed challenges for LISP Machines, prompting companies such as Lucid and Franz LISP to develop increasingly powerful and portable versions of LISP for UNIX systems. Later, desktop computers from Apple and IBM emerged with simpler architectures to run LISP applications. By 1987, these alternatives matched the performance of expensive LISP machines, rendering the specialized machines obsolete. The industry worth half a billion dollars was swiftly replaced in a single year.

The 1990s: Resistance to new expert systems deployment and maintenance

After the collapse of the LISP machine market, more advanced machines took their place but eventually met the same fate. By the early 1990s, most commercial LISP companies, including Symbolics and Lucid Inc., had failed. Texas Instruments and Xerox also withdrew from the field. Some customer companies continued to maintain systems built on LISP, but this required support work.

First Macintosh

First IBM PC

In the 1990s and beyond, the term "expert system" and the concept of standalone AI systems largely disappeared from the IT lexicon. There are two interpretations of this. One view is that "expert systems failed" as they couldn't fulfill their overhyped promise, leading the IT world to move on. The other perspective is that expert systems were victims of their success. As IT professionals embraced concepts like rule engines, these tools transitioned from standalone tools for developing specialized expert systems to becoming standard tools among many.

Winter#5, 1988: No High-Level Machine Intelligence – No Money

In 1981, the Japanese unveiled their ambitious plan for the Fifth Generation computer project, causing concerns worldwide. The United States, with its history of Defense Department funding for AI research and technical expertise, responded by launching the Strategic Computing Initiative (SCI) in 1983. The SCI aimed to develop advanced computer hardware and AI within a ten-year timeframe. Authors of Strategic Computing: DARPA and the Quest for Machine Intelligence, 1983-1993 describe “the machine envisioned by SCI”:

It would run ten billion instructions per second to see, hear, speak, and think like a human. The degree of integration required would rival that achieved by the human brain, the most complex instrument known to man.

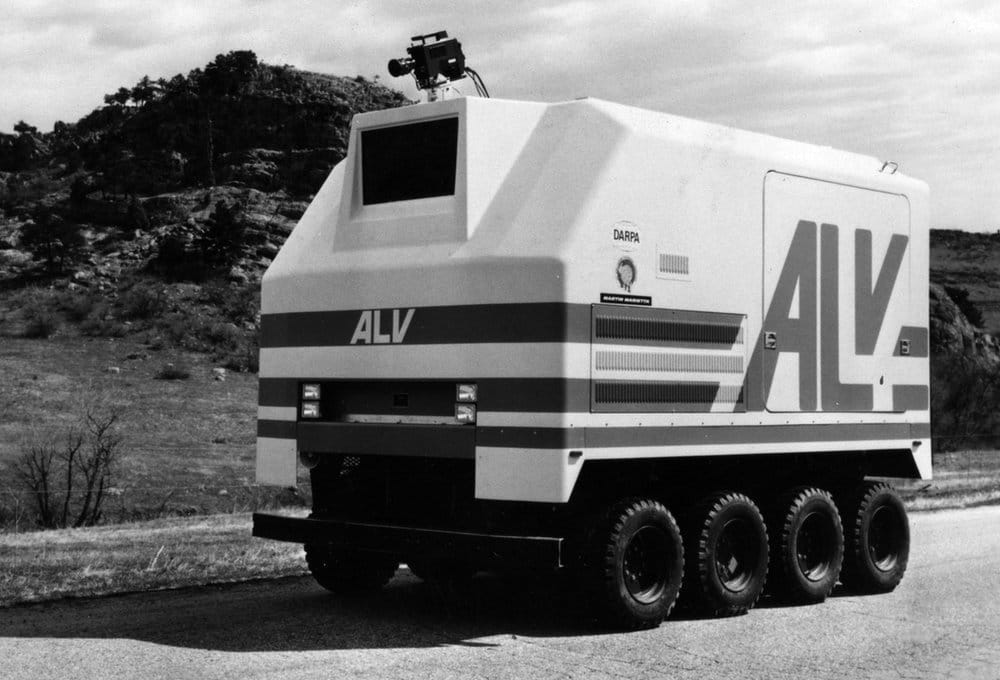

One notable project under the Strategic Computing Initiative (SCI) was the "Smart Truck," or Autonomous Land Vehicle (ALV) project. It received a significant portion of SCI's annual budget and aimed to develop a versatile robot for various missions. These missions included weapons delivery, reconnaissance, ammunition handling, and rear area resupply. The goal was to create a vehicle that could navigate rugged terrain, overcome obstacles, and utilize camouflage. Initially, wheeled prototypes were limited to roadways and flat ground, but the final product was envisioned to traverse any terrain on mechanical legs.

Autonomous Land Vehicle (ALV)

By the late 1980s, it became evident that the project was nowhere close to achieving the desired levels of machine intelligence. The primary challenge stemmed from the lack of an effective and stable management structure that could coordinate different aspects of the program and advance them collectively toward the goal of machine intelligence. Various attempts were made to impose management schemes on SCI, but none proved successful. Additionally, the ambitious goals of SCI, such as the ALV project's self-driving capability, exceeded what was achievable at the time and resembled contemporary multimodal AI systems and the elusive concept of AGI (Artificial General Intelligence).

Under the leadership of Jack Schwarz, who assumed control of the Information Processing Technology Office (IPTO) in 1987, funding for AI research within DARPA was reduced. In Machines Who Think: A Personal Inquiry into the History and Prospects of Artificial Intelligence, Pamela McCorduck describes Schwarz’s attitude toward Strategic Computing Initiative and the role of AI:

Schwartz believed that DARPA was using a swimming model – setting a goal, and paddling toward it regardless of currents or storms. DARPA should instead be using a surfer model – waiting for the big wave, which would allow its relatively modest funds to surf gracefully and successfully toward that same goal. In the long run, AI was possible and promising, but its wave had yet to rise.

Despite falling short of achieving high-level machine intelligence, the SCI did accomplish specific technical milestones. For example, by 1987, the ALV had demonstrated self-driving capabilities on two-lane roads, obstacle avoidance, and off-road driving in different conditions. The use of video cameras, laser scanners, and inertial navigation units pioneered by the SCI ALV program laid the foundation for today's commercial driverless car developments.

The Department of Defense invested $1,000,417,775.68 in the SCI between 1983 and 1993, as said in Strategic Computing: DARPA and the Quest for Machine Intelligence, 1983-1993. The project was eventually succeeded by the Accelerated Strategic Computing Initiative in the 1990s and later by the Advanced Simulation and Computing Program.

Conclusion

Chilly! AI winters were surely no fun. But part of the research that made possible the recent breakthroughs with large language models (LLMs) was made during those times. During the height of symbolic expert systems, connectionist researchers continued their work on neural networks, albeit on a smaller scale. Paul Werbos' discovery of backpropagation, a crucial algorithm for training neural networks, was crucial for further progress.

In the mid-1980s, the "connectionist winter" came to an end as researchers such as Hopfield, Rumelhart, Williams, Hinton, and others demonstrated the effectiveness of backpropagation in neural networks and their ability to represent complex distributions. This resurgence occurred simultaneously with the decline of symbolic expert systems.

Following this period, the research on neural networks flourished without any more setbacks, leading to the development of numerous new models, eventually paving the way for the emergence of modern LLMs. In the next edition, we will delve into this fruitful period of neural network research. Stay tuned!

History of LLMs by Turing Post:

To be continued…

If you liked this issue, subscribe to receive the fourth episode of the History of LLMs straight to your inbox. Oh, and please, share this article with your friends and colleagues. Because... To fundamentally push the research frontier forward, one needs to thoroughly understand what has been attempted in history and why current models exist in present forms.