The Next AI Scaling Phase

Many think that the era of scaling AI is over, that’s wrong, in my opinion, having followed the industry for quite sometime, and having had the chance to also restructure my whole media business from generative AI to what we can call now, contextual AI, not only we’ll keep scaling, but the process of scaling AI right now, looks way more linear than it was just a few years back.

However, instead of just scaling data, parameters, and computing, we’ll need something else on top.

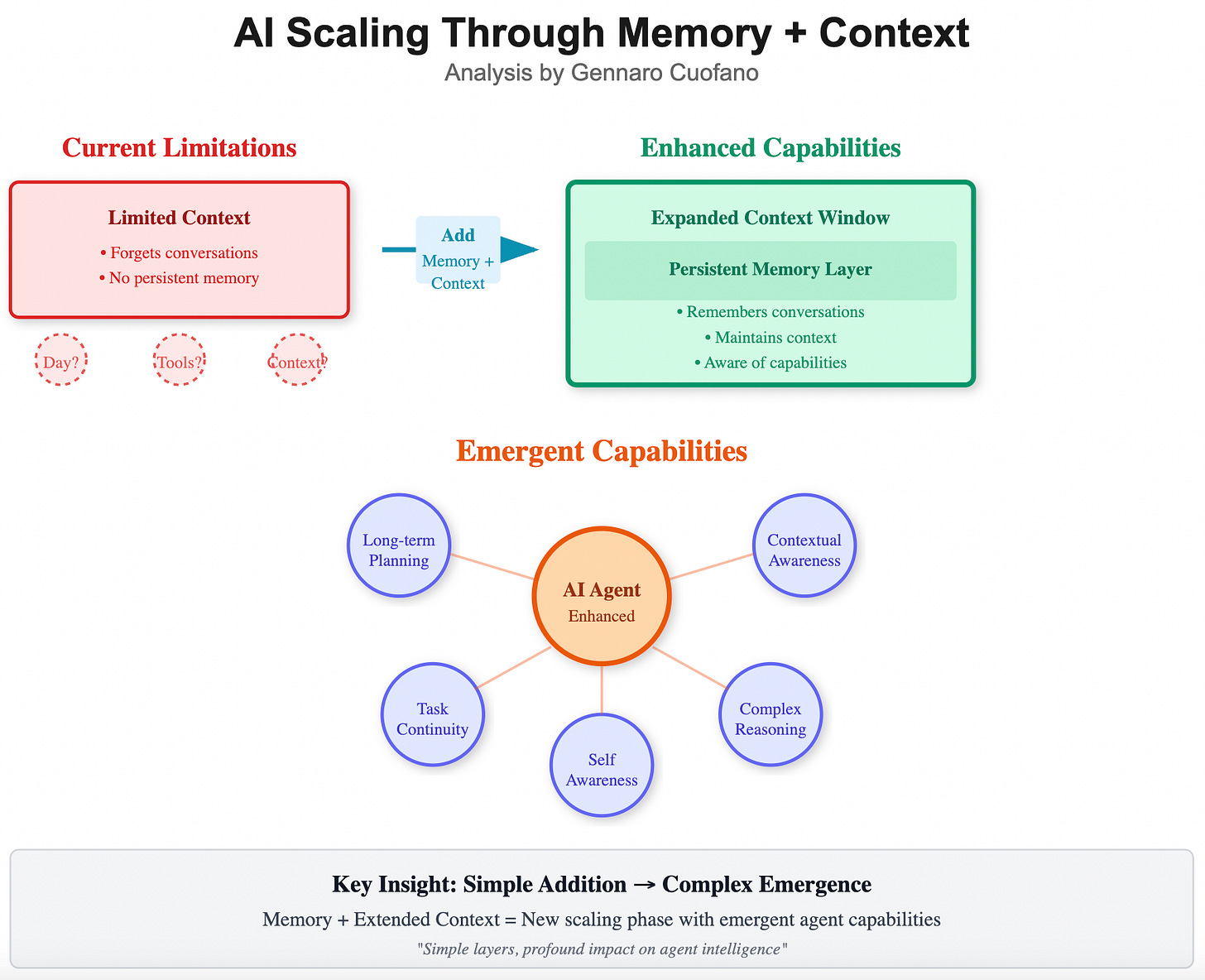

Something as simple as adding memory and expanding context windows for AI agents will make so much of a difference, creating another scaling phase in artificial intelligence.

We stand at a peculiar moment where the most transformative improvements don't require revolutionary breakthroughs in model architecture or training paradigms, but rather the implementation of what seems almost mundane: memory persistence and broader contextual awareness.

The elegance lies in the simplicity. These aren't exotic technologies—they're fundamental capabilities we take for granted in human cognition. Yet their absence in current AI systems creates a chasm between potential and performance that we're only beginning to understand.