This Week In AI Business: AI CapEx Race, Talent Wars, & No Moats in AI? [Week #6-2025]

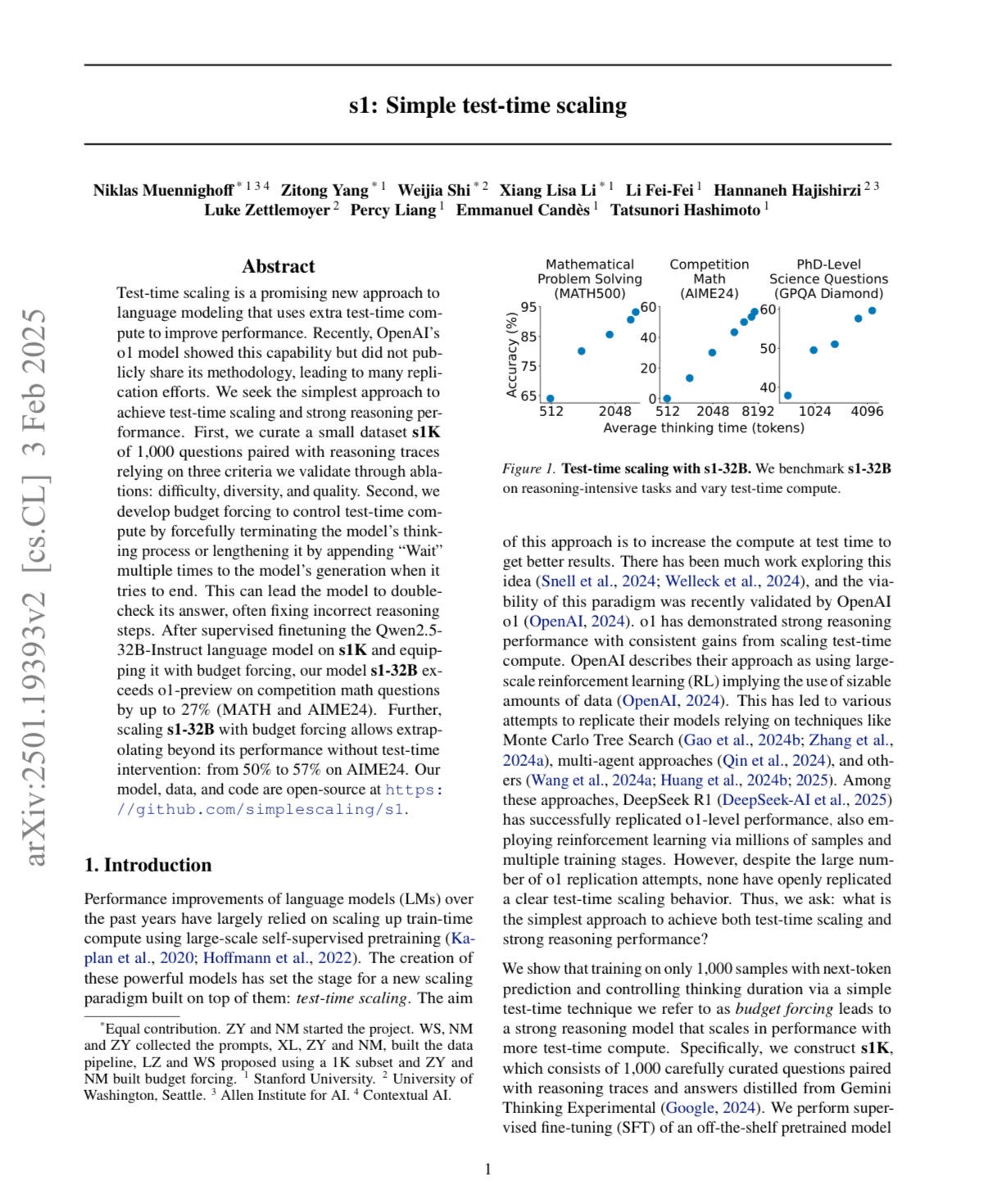

Prepare for an AI reasoning take-off as a further technique/paradigm; test-time scaling makes reasoning much cheaper and better.

That means we might go much faster from reasoning to agents (meant open-ended ones capable of very complex tasks) than expected.

For instance, during test-time computing, we’ve learned that AI models can improve reasoning by scaling up and thinking longer during inference.

However, with test-time scaling, we learned that simply budget-forcing the reasoning model gets much better by using fewer resources.

In short, researchers added a “Wait” command when the “reasoning AI process” wasn’t efficient, thus stopping it instead of having it consume tokens without providing the proper outcome.

This method outperformed OpenAI’s o1-preview, hinting that brute-force scaling may give way to smarter optimization techniques on top.

In short, that points out that there is a lot of optimization space on top of these reasoning models that can be done on the cheap and with “smart hacks”

Indeed, at least on the basic reasoning side, there is still so much optimization space that the progress can continue for the next few years.

In short, since the “ChatGPT moment,” we went and are going through three significant phases of take-off:

LLMs via pre-training, where all it mattered was scaling data, computing, and tweaking attention-based algorithms to get there. While this has hit, in part, a plateau,

Reasoning via post-training (currently here) and a set of architectural tricks on top enable AI models to produce outcomes way more complex than simple answers. We’re now at the phase where this is accelerating.

“Real agentic” (we’re getting there fast), here we move from a paradigm where the human is continuously in the loop to make the LLM work on several tasks to a paradigm where the human only checks if the agent output aligns with the desired outcome as it handles multiple tasks in parallel and in background.

These combined and further developing in parallel will make things go even faster.

This also shows that the “cost of inference” and then “reasoning” and thus intelligence are going down fast, creating an impressive race at the AI frontier, where there are no moats.

But if that is the case, are there no moats in AI?

In this weekly I will focus on that!

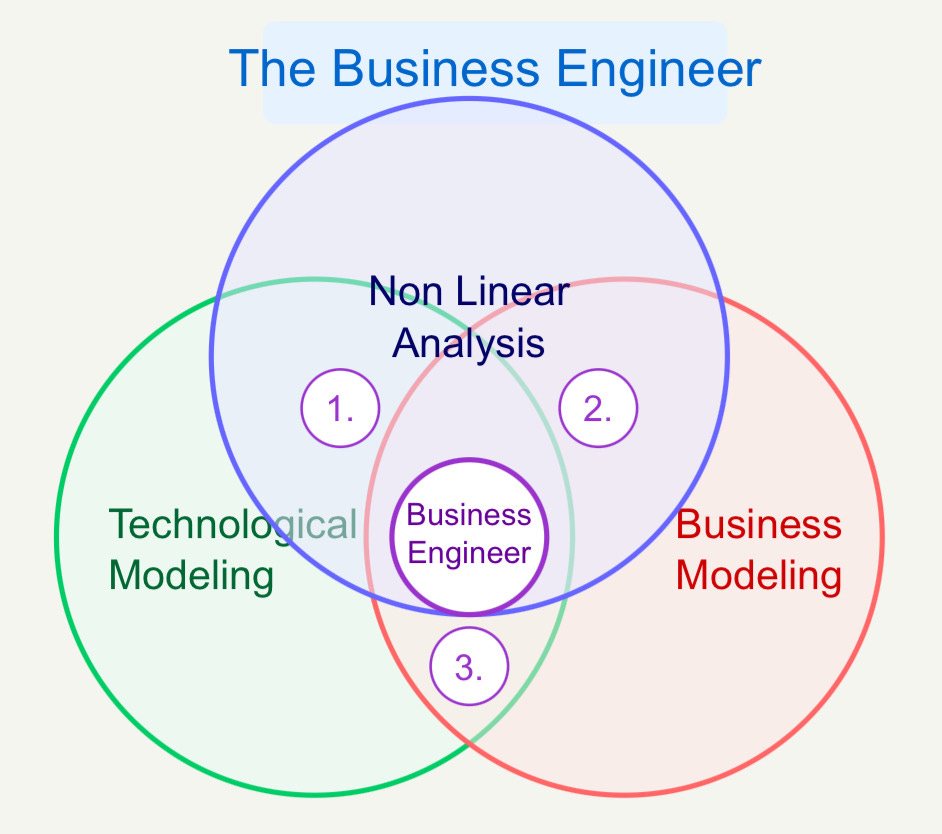

The weekly newsletter is in the spirit of what it means to be a Business Engineer:

We always want to ask three core questions:

What’s the shape of the underlying technology that connects the value prop to its product?

What’s the shape of the underlying business that connects the value prop to its distribution?

How does the business survive in the short term while it sticks to its long-term vision via transitional business modeling market dynamics?

These non-linear analyses aim to isolate the short-term buzz and noise, find the signal, and ensure you can reconcile the short with the long term!