Amazon's Dual AI Power Move

Just a few days back, Amazon announced a further 4 billion dollar investment into Anthropic AI. While this feels like plowing money at it, there is a more fundamental shift happening right now!

Amazon is executing a very powerful AI strategy via its AWS business unit. As I explained over and over, in this newsletter, cloud and AI are intertwined.

Read Also

How?

Indeed the current AI revolution (for now) is at the intersection of natural language computing interface (which is a further step of the computing revolution), enterprise cloud computing (which provides the AI supercomputers to pre-train models like GPT), and a new consumer paradigm based on generation, rather than consumption.

Let me explain each of these three points/elements, as they are crucial to fully grasp the current AI paradigm and then we move to Amazon's AI strategy!

Natural language computing interface

My main argument is that we tend to overestimate current trends, as we zoom in too much, as if what we have today is all that is.

What do I mean?

Many today look at the current AI paradigm, and they assume this is something completely new, a new technology trend.

But, I argue, that is not the case, this is all part of the same continuum, which started in the 1940s-50s, with the computing revolution.

When looking at the current historical development is critical to ask: how would I consider this trend by looking at it a hundred years from now?

In other words, we started with the first computer, the ENIAC machine by John W. Mauchly and J. Presper Eckert at the University of Pennsylvania, back in the mid-1940s

The ENIAC was an Electrical Numerical Integrator and Calculator that used a word of 10 decimal digits instead of binary ones like previous automated calculators/computers.

It ran on 2,000 vacuum tubes, using nearly 18,000 vacuum tubes.

And you know what kind of machine is comparable to this, today? Here you go!

A CASIO scientific calculator, available at Target for $11.79!

In short, we moved through Moore's Law, to these days, where hardware got exponentially better, and software followed an even more exponential improvement trajectory.

Thus, today we're at the stage where the computing revolution is at its last leg, where we'll finally be able to have it imbued into anything!

Enterprise cloud computing

Another key element of this paradigm, is the cloud infrastructure which perfectly borrowed itself to the current AI revolution, becoming the de facto underlying computing infrastructure for AI models.

For instance, OpenAI had to turn to Microsoft to bring its GPT-3 AI model to life.

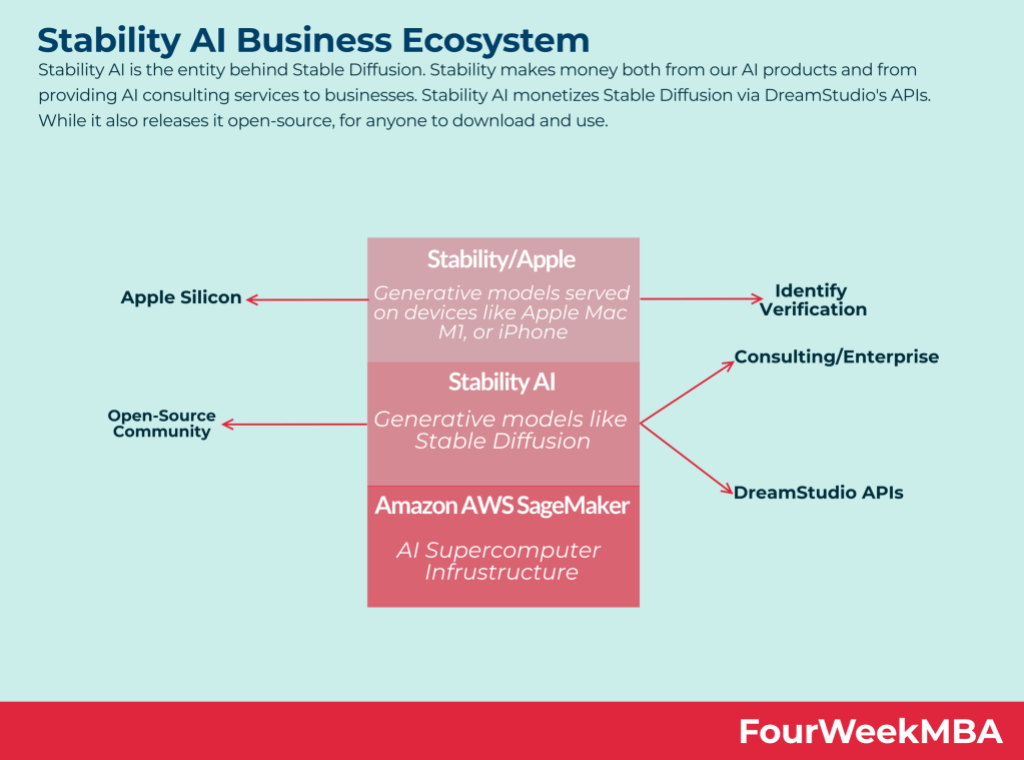

And Stability AI had to turn to Amazon AWS to turn its Stable Diffusion to life!

I could continue here, but the main point is about a complete transition of the full stack of technologies to enable this current AI paradigm.

New consumer paradigm based on generation and outcome

Today generating code has become so cheap, that the whole software industry is undergoing an incredible revolution.

Whereas in the previous software era, you could build smart applications with very narrow use cases, that might no longer make sense in the future.

In short, in the new software paradigm, either you build something extremely valuable, or the software won't make much sense as a user can easily replicate it on a device in the future.

A valuable consumer application has to have proprietary data (which is hard to find anywhere else), smart integrations, and a physical component, which can't be replicated by the AI.

These will be some key ingredients to build AI moats at consumer level.

On a business-to-business level I believe that will still a lot of space for specialized/smart applications, but those, to survive need to get 10-100x better!

In addition, as we completely shifted into the agentic AI era, we’ll see more and more, business models moving toward “outcome” or “pay-per-work-done” rather than output or simply consumption!

Read Also

This will be a fundamental shift!

The Current AI Landscape: A Convergence of Innovations

Before delving into Amazon’s strategy, let me recap what I’ve explained so far, as the AI paradigm has three pivotal elements shaping today’s technological landscape:

Natural Language Computing Interface:

AI interfaces like ChatGPT represent the culmination of decades of advancements in computing, tracing back to foundational breakthroughs like ENIAC in the 1940s. These interfaces mark the evolution of human-computer interaction, simplifying complex tasks and unlocking new efficiencies.Enterprise Cloud Computing:

AI thrives on robust cloud infrastructures. Companies like OpenAI (via Microsoft Azure) and Stability AI (via AWS) rely on cloud computing to train and deploy powerful models like GPT-3 and Stable Diffusion. This symbiosis between cloud and AI has made scalable, high-performance computing more accessible than ever.A New Consumer Paradigm:

As AI tools become mainstream, they emphasize generation/outcome/work-done over consumption. Applications must offer proprietary data, seamless integrations, and physical elements to stand out in this transformative era.

Enter Amazon's Powerful Dual AI Move!

Just a few days after Amazon announced its further financing and tightened partnership with Anthropic, now the company is developing its own AI chips to challenge Nvidia’s dominance in the $100 billion AI chip market.

Engineers at its Austin lab are tackling complex hardware development to reduce Amazon Web Services’ dependency on external suppliers. Although Nvidia remains dominant, Amazon is playing a big shot at it.

In fact; Amazon’s intensified partnership with Anthropic, marked by an additional $4 billion investment, complements its in-house AI chip development efforts.

By integrating Anthropic’s advanced AI models, such as Claude, into its Amazon Web Services (AWS) platform, Amazon enhances its AI capabilities.

Simultaneously, developing proprietary AI chips aims to reduce reliance on external suppliers like Nvidia.

This dual strategy positions Amazon to offer more efficient, cost-effective AI solutions, potentially enabling it to keep its dominance in the cloud space for the next 10-20 years!

To understand how important the AI move is to Amazon, take into account (as transpired from some tech insiders) Jeff Bezos is on top of it!

Having discussions and dinners with some key players in the AI space.

Below are some key remarks about it:

Machine learning (ML) is transforming businesses across industries due to scalable compute capacity, data proliferation, and ML technology advancements.

Generative AI applications like ChatGPT have gained attention; Amazon has used AI and ML for over 20 years in e-commerce, fulfillment, supply chain, Prime Air, Amazon Go, and Alexa.

AWS democratizes ML and offers a broad portfolio of AI and ML services, including Amazon SageMaker and AI capabilities like image recognition and forecasting.

Generative AI creates new content (e.g., conversations, stories, images) using Foundation Models (FMs) with billions of parameters, such as GPT4, Anthropic, Gemini...

FMs can perform diverse tasks and be customized for domain-specific functions, leading to unique customer experiences in industries like banking, travel, and healthcare.

The Amazon AI offering moves along three lines of business

Amazon Bedrock, a new service, makes FMs accessible via an API, allowing customers to build and scale generative AI applications, customize models, and integrate them into applications. Bedrock offers a range of FMs, including Jurassic-2, Claude, and Stable Diffusion, and introduces Amazon's Titan FMs for tasks like summarization, text generation, and information extraction.

Amazon EC2 Trn1n instances (powered by AWS Trainium) and Amazon EC2 Inf2 instances (powered by AWS Inferentia2) provide cost-effective cloud infrastructure for generative AI.

Amazon CodeWhisperer, an AI coding companion, improves developer productivity by generating code suggestions based on natural language comments and prior code in IDEs. CodeWhisperer is available for multiple programming languages, includes built-in security scanning, and is free for individual users. A Professional Tier is available for business users.

This offering above will become critical as we move toward the new phase of agentic AI!

Read Also

Deep Dive into Amazon’s Dual AI Strategy: Disrupting AI from Chips to Cloud

Amazon has firmly planted itself at the heart of the AI revolution with a robust dual approach: enhancing partnerships with AI pioneers like Anthropic and developing proprietary AI chips to challenge Nvidia's dominance.

This positions Amazon to not only reshape the AI chip market but also solidify its leadership in cloud computing for the next decade.

Amazon’s Strategy: From Cloud to Custom Hardware

1. Anthropic Partnership: AI Model Integration

Amazon recently fortified its partnership with Anthropic by injecting an additional $4 billion into the relationship. This strategic move allows AWS customers to leverage Anthropic’s cutting-edge AI models like Claude, making generative AI accessible and scalable via Amazon Bedrock.

Amazon Bedrock: Simplifies the use of generative AI by providing customers with APIs to build and customize AI applications using models like Claude and Amazon’s proprietary Titan FMs.

Consumer and Enterprise Edge: Integrating Anthropic’s expertise enhances Amazon’s ability to cater to both consumer and enterprise markets, providing AI-driven tools that are versatile and efficient.

2. In-House AI Chip Development

Amazon is tackling Nvidia’s dominance in the $100 billion AI chip market with an ambitious chip development project led by its Austin-based engineering hub. The initiative aims to build high-performance, cost-effective AI chips, reducing AWS’s reliance on external suppliers.

Engineering Hub: Operating like a startup within Amazon’s corporate ecosystem, the Austin lab focuses on overcoming the technical challenges of developing AI chips to rival Nvidia’s GPUs.

AI Chip Efficiency: Proprietary chips, such as AWS Trainium and Inferentia, already demonstrate Amazon’s commitment to optimized AI hardware tailored to its cloud infrastructure needs.

Amazon’s Long-Term Vision: Dual Competitive Advantage

By aligning its AI model partnerships with hardware innovation, Amazon aims to dominate two critical fronts:

Cloud AI Market: AWS continues to be a leader by integrating advanced models, like those from Anthropic, into its infrastructure, offering unparalleled tools for businesses to deploy AI at scale.

AI Hardware Space: Developing custom chips positions Amazon to reduce costs, control supply chains, and innovate faster, directly competing with Nvidia's stronghold.

This dual strategy cements Amazon’s position as both a cloud computing giant and an AI innovator. While competitors like Microsoft focus on cloud-AI synergies, Amazon’s foray into AI hardware adds another dimension to its arsenal.

What can go wrong?

Antitrust Oversight:

Governments and regulatory bodies globally are increasingly scrutinizing the monopolistic tendencies of tech giants.

Amazon’s $4 billion investment in Anthropic and its efforts to dominate the AI chip market could attract antitrust investigations.

Regulators may view these moves as stifling competition in both the AI and cloud computing sectors.

Nvidia’s Leading Role

While this is an entry point for Amazon, it’s important to remember Nvidia’s Dominance in AI Hardware, where still most of Amazon AWS AI infrastructure is built on top of NVIDIA!

Cloud Wars

Amazon is not the only one which is investing in chip infrastructure, and vertical integration for AI cloud.

Other leading players, like Microsoft (Azure) and Google (Google Cloud), are formidable competitors in the cloud-AI space, leveraging their own AI advancements and chip developments.

For instance, Google’s TPU chips and Microsoft’s Azure OpenAI service create direct competition for AWS.

Native AI Players

As the AI industry creates new demand, new players, native to it, will come to the market to specifically compete against NVIDIA, to offer vertically integrated chip solutions.

In addition, startups and other tech giants investing in custom AI chips, like Tesla and Meta, could outpace Amazon by delivering breakthrough technologies or specialized solutions.

Making AI Chips isn’t easy!

Chip Design and Fabrication Complexity:

Developing AI chips that rival Nvidia requires overcoming significant engineering challenges, such as improving power efficiency, scalability, and heat dissipation. Failures in these areas could lead to delays or underperforming products.Manufacturing Dependency:

Chip fabrication is heavily reliant on a few key players, such as TSMC. Supply chain disruptions, geopolitical conflicts, or technological dependencies could stall Amazon’s chip ambitions.Compatibility Issues:

Integrating new chips into AWS’s existing infrastructure without compromising performance or security poses a major technical challenge. Early iterations of custom chips may face adoption resistance from enterprise customers due to compatibility concerns or performance issues.

The Bigger Picture: Amazon vs. the World

Amazon’s intensified efforts signal a broader shift in the tech industry. Giants are moving away from reliance on external suppliers toward developing proprietary hardware and software ecosystems.

As Microsoft and Google reinforce their enterprise AI dominance, and as Apple potentially enters the consumer AI space, Amazon’s dual AI playbook could ensure its relevance across both dimensions for years to come.

While this bet is a very hard one to pull off, it’s still worth for Amazon, as this is the path to make the company highly relevant in the next 30-50 years!

Read Also

Ciao!

With ♥️ Gennaro, FourWeekMBA