The New AI Hardware Paradigm

It’s not just that the current AI paradigm is shifting the entire consumer industry, actually - and that is the most important change happening right now - the entire infrastructure is getting rebuilt, almost entirely for AI!

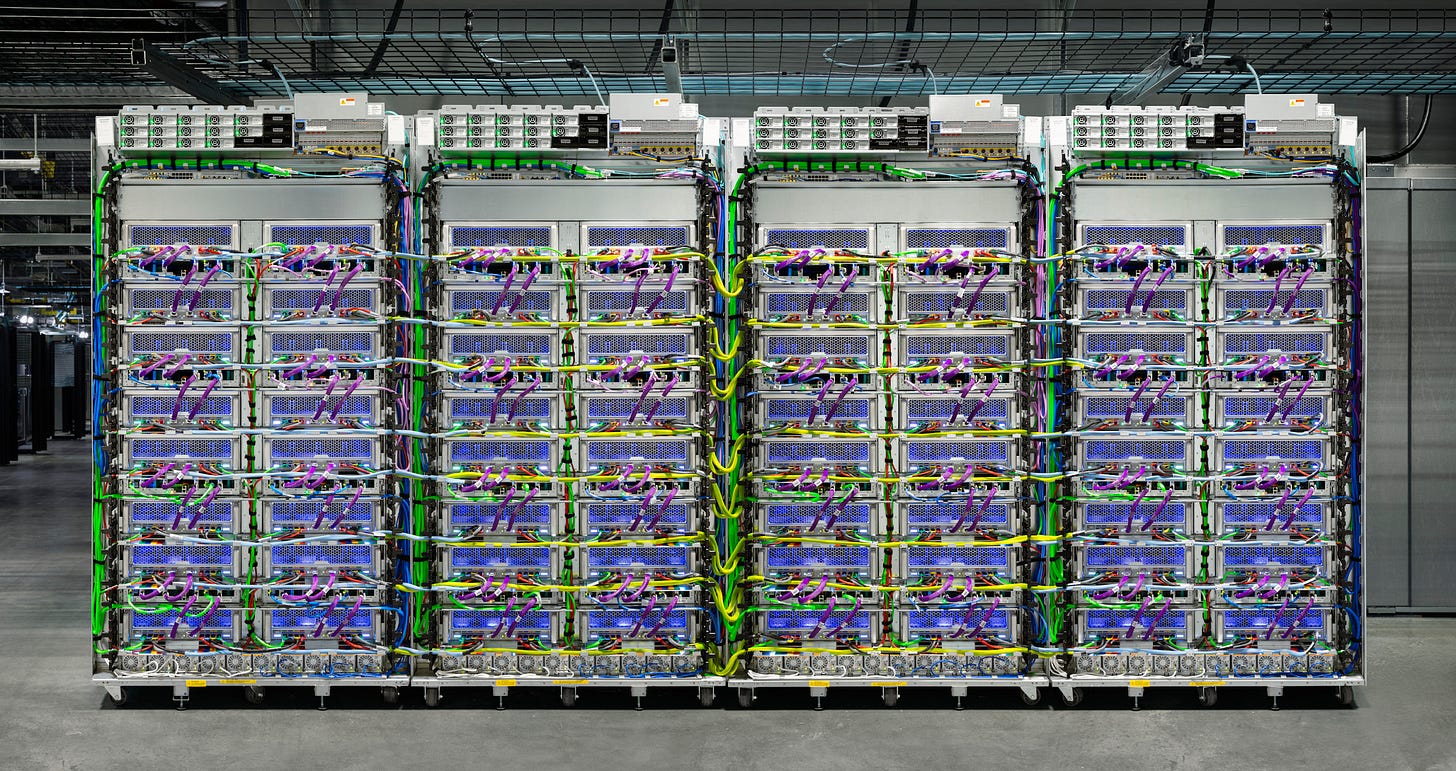

Indeed, as we speak, the entire cloud infrastructures of companies like Amazon AWS, Google Cloud, Microsoft Azure, Meta, Oracle, and more is getting completely rebuilt, based on a new hardware: the AI Chip!

As I’ve explained in Amazon's Dual AI Power Move, Amazon AWS right now is rebuilding its entire cloud architecture to embrace AI chips.

Read Also

But how did we get here and what’s next?

Let’s start from the how we got here. My aim is to help you decipher the next phases of this incredible revolution as an insider.

I know from the outside it all sounds buzzy, noisy (indeed it is!), thus it’s easy to get cynical towards it, yet this would make you lose the most important opportunity we have in front of us, probably bigger than the web itself!

Therefore my role is exactly that, to give you clarity, and a few simple mental models to deal with what’s coming next, so you can make the best of it!

How did we get here?

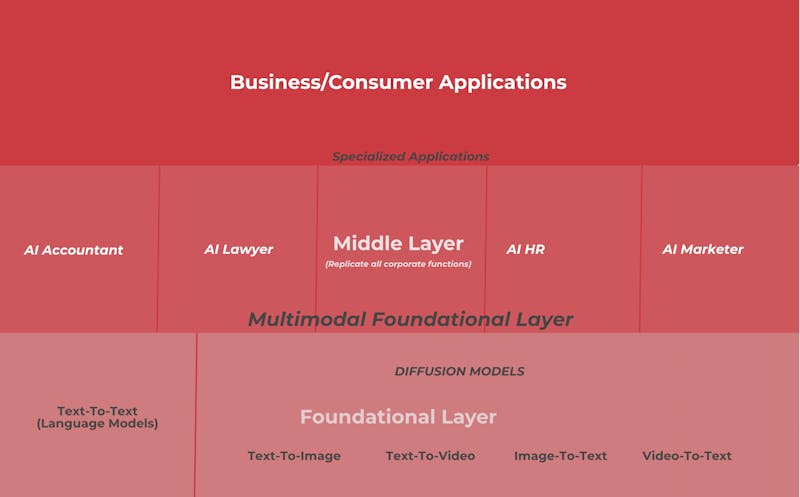

In the three layers of AI theory in the AI Business Models book, I explained in detail what the AI business ecosystem looks like.

There, I explained how, on the software side, the industry is developing according to three layers:

Foundational Layer: General-purpose engines like GPT-3, with features such as being multi-modal, driven by natural language, and adapting in real-time.

Middle Layer: Comprised of specialized vertical engines replicating corporate functions and building differentiation on data moats.

App Layer: Rise of specialized applications built on top of the middle layer, focusing on scaling up user base and utilizing feedback loops to create network effects.

Now, once you've understood that, let's see what - I argue - can create a competitive moat in AI.

This is part of a whole new Business Ecosystem.

In short:

Software: we moved from narrow and constrained to general and open-ended (the most powerful analogy at the consumer level is from search to conversational interfaces).

Hardware: we moved from CPUs to GPUs, powering up the current AI revolution.

Business/Consumer: we're moving from a software industry that is getting 10x better as we speak just by simply integrating OpenAI's API endpoints to any existing software application. Code is getting way cheaper, and barriers to entering the already competitive software industry are getting much, much lower. At the consumer level, first millions, and now hundreds of millions of consumers across the world are getting used to a different way to consume content online, which can be summarized as the move from indexed/static/non-personalized content to generative/dynamic/hyper-personalized experiences.

From CPUs to GPUs

The main hardware change we’ve seen in the last decades has been the transition from CPUs, to GPUs.

The CPU has been the main driver of the computing revolution throughout the 1970s to the 2010s.

The first commercially available microprocessor, the Intel 4004, was introduced in November 1971. This 4-bit central processing unit (CPU) marked a significant advancement in computing technology, integrating the functions of a computer's central processing unit onto a single chip.

The CPU spurred the whole computing revolution, which led us to the mobile phone revolution by the 2010s!

Indeed, still as of today the CPU occupies a prominent role into the mobile device you carry with you.

And to be clear the CPU will still be a critical foundational layer for the hardware side, yet, many more reasoning functions of our devices will be taken over by the GPU layers on top.

And yet, for the AI revolution to happen we needed something else than the CPU!

While there are many differences between the CPU and GPU. One key takeaways is that the GPU really introduced a whole new way for computing: From sequential to parallel!

Indeed, while the CPU operated sequentially, the GPU introduced a new architecture, which was able to process data in a parallel way.

For that, the GPU was fundamentally different in terms of architecture:

CPU: Known as the "brains" of the computer, CPUs are optimized for single-threaded performance and low-latency tasks. They consist of a few powerful cores capable of handling a wide range of operations, making them suitable for general-purpose computing tasks.

GPU: GPUs are instead designed with a massively parallel architecture, featuring thousands of smaller, efficient cores that can handle multiple tasks simultaneously. This design makes them ideal for tasks that can be parallelized, such as rendering graphics and processing large blocks of data in parallel.

Therefore, the shift from the CPU to the GPU is really a computational paradigm shift!

In fact, while:

CPUs excel at executing a variety of tasks sequentially, making them versatile for different computing needs. They are adept at handling complex computations and tasks that require significant control and flexibility.

GPUs: Specialize in performing repetitive calculations across large datasets simultaneously, which is essential for rendering images and videos. Their parallel processing capabilities enable them to handle multiple operations at once, significantly speeding up tasks like image processing and machine learning

This is a critical passage to understand as the GPU moved us away from deterministic computing (the machine executes a very narrow/specific task, based on an input) to probabilistic computing - more precisely called probabilistic modeling) - where the machine can make its predictions on a wide arrays of areas given the underlying data. That’s the foundation of Generative AI.

We might see a similar revolution from parallel computing (GPU-Driven) to quantum computing in the coming decades, but we’re still early there for now…

But this is the kind of architectural/computational change the GPU enabled.

That is why GPUs have become a critical component for AI computing.

NVIDIA debuted the first GPU in 1999 to render 3D images for video games. Gaming requires a massive amount of parallel calculations.

From there, GPUs rose to fame, thanks to the transition from gaming to using graphics servers for blockbuster movies.

Yet, GPUs started to be used by scientists and researchers, plugged into the world’s largest supercomputers also to study everything from chemistry to astrophysics.

AI computing emerged (over a decade ago) as the need for parallel processing became critical for developing large AI models.

Back in 1994, Sony used the term GPU as part of the launch of its PS1. Yet, by 1999, NVIDIA popularized the term with the launch of its GeForce 256. Definitely, the launch of the GeForce 256 was extremely effective from a marketing standpoint. Indeed, defined as “the world’s first GPU,” it created a category in its own right.

By 2006, NVIDIA launched CUDA, a general-purpose programming model. This accelerated the development of applications for various industries, from aerospace to bio-science research, mechanical and fluid simulations, and energy exploration.

NVIDIA has been the leader in the GPU space, starting with a focus on PC graphics. Nowadays, the company focuses on 3D graphics due to the exponential growth in the gaming market. At the same time, NVIDIA also represents the physical platform of entire industries.

From scientific computing to artificial intelligence, data science, autonomous vehicles, AV, robotics, and augmented and virtual reality, NVIDIA really gives us an understanding of how tomorrow’s industries will evolve based on the physical capabilities offered by its chips.

While GPU was initially applied primarily to gaming, over the years, running AI/ML algorithms that required massive computing power became increasingly relevant. Thus, GPU has become the basis for the most promising industries of this decade: AI, autonomous driving, robotics, AR/VR, and more.

However, the current GPU is not enough…

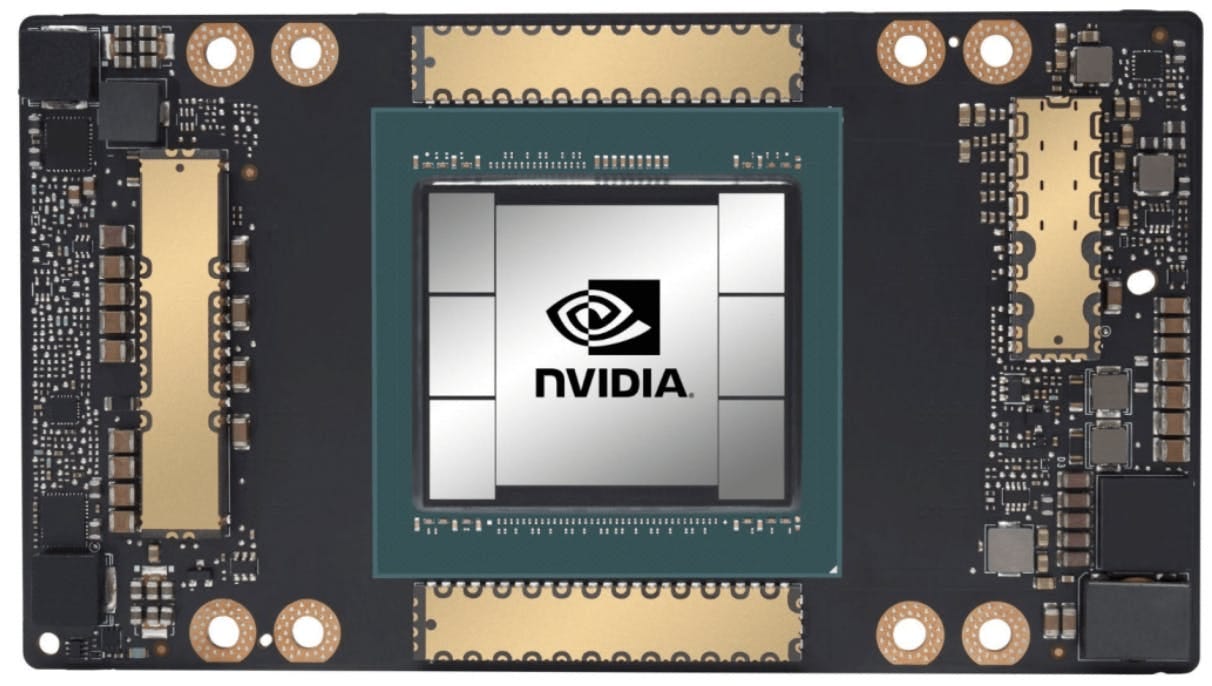

Enter the AI GPU

How is the AI GPU different than a traditional GPU?

First in terms of architecture, which (as the name suggests) been built specifically to handle AI models requirements:

AI GPUs: Purpose-built to accelerate specific AI computations, these chips feature specialized processing units like Tensor Cores in NVIDIA's Blackwell, which support lower-precision data types (e.g., FP4, FP6) to enhance throughput and efficiency in AI model training and inference.

Traditional GPUs: Originally developed for rendering graphics, traditional GPUs possess a more generalized architecture, making them versatile but less optimized for the unique demands of AI tasks.

Also in terms of performance and efficiency:

AI GPUs: Deliver superior performance and energy efficiency for AI workloads. For instance, Amazon's Trainium offers up to 50% cost-to-train savings over comparable GPU-based instances, with Trn1 instances powered by Trainium being up to 25% more energy-efficient.

Traditional GPUs: While capable of handling AI tasks, traditional GPUs may not match the performance and efficiency levels of AI-specific chips, especially for large-scale AI model training.

And more on security which will be critical to enable scale of AI adoption:

AI GPUs: Incorporate hardware-based security measures tailored for AI data protection. NVIDIA's Blackwell, for example, includes Confidential Computing capabilities to safeguard sensitive AI models and data.

Traditional GPUs: Generally lack specialized security features designed specifically for AI workloads, potentially necessitating additional security layers.

The same applies to Amazon AWS Trainium:

And Google’s TPU, which when clustered together, can be architectures to build a massive AI Supercomputer.

I’ll emphasize in a few passages the last piece about why AI GPUs are critical right now!

For now, it’s critical to understand that the AI race is getting defined through foundational models innovation, customer acquisition, and infrastructure development.

Each of these players (OpenAI, Anthropic, Microsoft, Google, Meta, Apple…) is focusing its strategy on some key areas; but overall they are all competing for the same three core resources:

1. Developing advanced generative AI models.

2. Winning customers by making AI applications practical and useful.

3. Building the infrastructure to support these efforts.

Number 1 and 2 would not be possible without number 3, or the underlying infrastructure to sustain the AI models (given their current scale) and consumer applications (given the massive, and quick adoptions).

How is the new AI Chip architecture different though, from a business perspective?

Built Both for Training And Inference

The key takeaway about the current AI infrastructure paradigm is this: new AI-specific chips like Amazon’s Trainium and NVIDIA’s Blackwell are designed for both training AI models and running (inference) those models efficiently.

Training vs. Inference:

Training: This phase involves teaching a model to recognize patterns by processing vast datasets, requiring substantial computational power and memory bandwidth to handle complex mathematical operations and large volumes of data.

Inference: In this phase, the trained model makes predictions or decisions based on new input data, emphasizing low-latency and energy-efficient computations to deliver real-time or near-real-time results.

This dual-purpose capability means the billions spent on this infrastructure will create AI supercomputers that not only build larger models but also power the entire AI business ecosystem in the next 10-30 years!

This will also mean the emergence of whole new business models.

This is critical, as AI demand will be so massive that building up the infrastructure to serve that will be instrumental to get there.

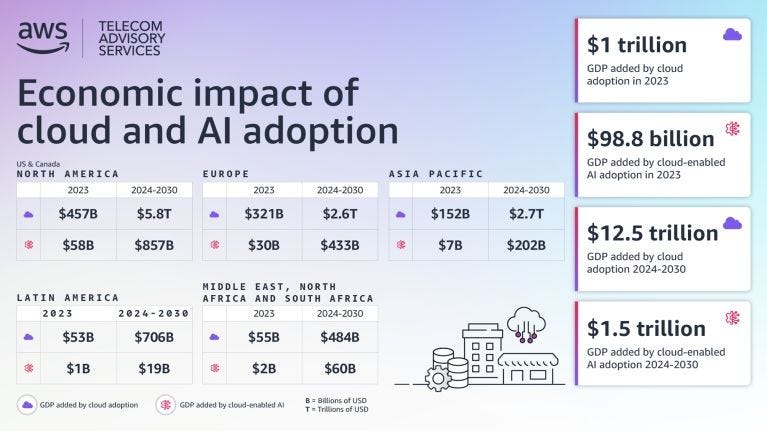

Indeed Amazon AWS estimated that Cloud adoption added $1 trillion to global GDP in 2023 and is projected to exceed $12 trillion by 2030, with AI contributing $1.5 trillion.

The good news? We’re still very early in the process!

The AI Convergence

As I’ve explained in The AI Convergence, as the current AI industry developed, based on the new hardware paradigm (GPU in the 2000-10s), software (transformer 2017), and business paradigm (ChatGPT moment in November 2022), these all converged into the “ChatGPT moment.”

And yet, that was only the start of it, which caught everyone unprepared. What that led to was a “catch up scenario” where demand is ramping up faster than supply.

This will create bottlenecks, not because there is too much buzz around it (which is true) but instead due to the lack of supply/infrastructure/tooling to serve that pent-up demand!

This is creating a cycle that might develop in three phases:

Phase 1: A layer on top of every existing industry (known knowns) - defined by linear technology and market expansion.

Phase 2: An enhancer for many developing/complementary industries (known unknowns) - defined by linear technology expansion, only partially linear market expansion, and non-linear market expansion.

Phase 3: The foundation for creating whole new emerging industries viable (unknown unknowns) - defined as fully non-linear technology/market expansion.

What’s next?

At this moment, we are at phase 1. Where the current AI paradigm will sit on top of the existing web infrastructure (as a smarter layer) to enhance it.

Yet, while this will work to expand the market opportunity, it will be also quite limiting from a few perspectives:

Inadequate Cloud Infruscture, while the current AI paradigm got built on the GPU infrastructure, we’ll see a transition toward an AI specialized infrastructure for the next decade.

Existing (yet not AI-native) Market Use Cases, right now current AI is building on top of the web, thus leveraging existing market demand to drive adoption. This means that you take any existing market that exists today, add AI on top, to make it 10x better and you’ve amplified the market. Take for instance the case of AI-powered search. While this works in the coming decade, it won’t work after that. When we’ll see the emergence of whole new commercial use case, natively built for AI!

Exppanding yet Trapped Market Demand (for the next decade): the above will create a “trapped market demand scenario” where before we discover entirely new use cases built specifically for AI it might take a decade. To be clear, this is a normal circumstance of the tech cycle, which might been very similar to how the commercial Internert developed.

This phase might last for a decade, and it will be the foundation before we move to the next phase, where we’ll see the emergence of a whole new set of commercial use cases, market demand for these use cases, all powered up by the new AI infrastructure which is getting built today!

What does it mean practically?

This is only a directional take, but in short, based on the above, we might see the AI industry develop in this direction.

AI-Specialized Infrastructure

Cloud Infrastructure and Computing: Transition from general-purpose GPU infrastructure to AI-specialized hardware (e.g., AI-specific chips, custom processors).

Edge Computing: Growth in low-latency AI processing at the edge for IoT, robotics, and real-time applications.

Quantum Computing: Advancements to handle complex AI models beyond traditional computing capabilities.

Energy-Efficient AI: Development of infrastructure focused on reducing the carbon footprint of AI training and inference.

Existing Market Use Cases (AI-Enhanced Traditional Industries)

Search Engines and Information Retrieval: AI-driven search engines like Perplexity AI enhancing relevance and user experience.

Healthcare:

Medical imaging and diagnostics enhanced with AI.

Personalized medicine through genomics and patient data analysis.

Education:

Personalized learning platforms.

AI tutors for adaptive learning.

Content Creation and Media:

AI-powered tools for video, image, and music production.

Automated journalism and text-to-speech platforms.

Customer Support: Virtual assistants and chatbots transforming helpdesk operations.

E-commerce: AI-powered recommendation engines, dynamic pricing, and personalized shopping experiences.

Financial Services:

Fraud detection.

Automated investment strategies.

Logistics and Supply Chain:

AI for route optimization.

Predictive inventory management.

Expanding, Yet Trapped Market Demand

This will be the most interesting phase, and yet we might only start to see it in full swing in the next 10-20 years.

Emerging Industries Built Natively for AI

Generative AI Products:

New content types (e.g., AI-generated virtual environments, interactive narratives).

AI-generated simulations for training and decision-making.

Autonomous Systems:

Self-driving vehicles tailored for AI-native traffic systems.

Fully autonomous drones and robotics.

Synthetic Biology:

AI-driven drug discovery and bioengineering.

Creation of synthetic organisms for industrial applications.

AI-Native Financial Platforms:

Autonomous trading systems with minimal human oversight.

AI-managed decentralized finance (DeFi) ecosystems.

Immersive AI-Driven Experiences:

AI-native virtual reality (VR) and augmented reality (AR) applications.

AI-generated metaverse environments.

Recap: In This Issue

Paradigm Shift in AI and Cloud Infrastructure:

The current AI wave is not just reshaping consumer applications but fundamentally rebuilding global cloud infrastructure using AI chips.

Major players like Amazon AWS, Google Cloud, and Microsoft Azure are redesigning their systems to optimize AI workloads.

Hardware Evolution - From CPUs to GPUs to AI Chips:

CPUs dominated the computing landscape from the 1970s to 2010s, driving the mobile revolution.

GPUs introduced parallel computing, a critical advancement enabling AI's rise by efficiently handling massive data and computations.

AI GPUs, like NVIDIA's Blackwell and Amazon Trainium, are purpose-built to accelerate training and inference, representing a leap from traditional GPUs.

New AI Business Ecosystem Layers:

Foundational Layer: General-purpose AI engines (e.g., GPT-3) for diverse applications.

Middle Layer: Specialized AI for corporate functions and data differentiation.

App Layer: Consumer-focused, hyper-personalized AI applications built atop the middle layer.

Training and Inference Unified in AI GPUs:

AI GPUs are dual-purpose, streamlining infrastructure investments by supporting both the creation (training) and deployment (inference) of AI models.

This duality enables efficient scaling for foundational models and consumer-facing AI applications.

Economic and Strategic Importance:

Investments in AI-specific infrastructure are foundational, powering the entire AI business ecosystem for decades.

AI-driven cloud infrastructure is projected to add $1.5 trillion to the global economy by 2030.

Bottlenecks and Future Growth:

The current infrastructure lags behind the surging demand for AI, creating a supply bottleneck.

The transition to AI-specialized systems will unlock new industries and use cases, expanding markets exponentially.

Phased AI Evolution:

Phase 1: Enhancing existing industries with AI (e.g., smarter search, better analytics).

Phase 2: Complementary industry growth with AI enhancements.

Phase 3: Creation of entirely new industries and markets driven by advanced AI capabilities.

The AI Convergence:

AI's trajectory is defined by hardware advancements (GPUs, AI chips), software innovations (transformer models), and business adoption.

Current AI, built on GPUs, will transition toward more specialized hardware, unlocking unprecedented capabilities.

Read Also

With ♥️ Gennaro, FourWeekMBA