AI Moats

I analyzed the AI landscape in late 2022, and came up with a framework to understand how to build competitive moats in the new AI paradigm. The below is my full perspective on that, updated based on current developments!

As soon as I saw ChatGPT at the end of November 2022, I deeply considered the nature of competition (as it developed) in the AI industry.

I wrote the below in early December 2022, and I think it gets confirmed more and more each day as the AI industry develops.

I’ve added a few more points and paragraphs based on current developments, but today's main question remains.

Indeed, the main question that kept popping up in my head was: If we all build tools on top of ChatGPT/OpenAI or a few similar models, how can we build competitive moats?

In other words, how can we build a company on top of AI with a long-term advantage that cannot be easily commoditized?

And just like two years back, my answer is the same, and it can be summarized as you can absolutely build AI Moats even if you’re not operating at the foundational layer!

I think there are a few things to take into account here.

As we went along this intense journey, where nearly two years have seemed a decade, what I’ll explain below has actually been confirmed.

We saw the emergence of startups like Perplexity AI - outside pure foundational players like OpenAI, who will need to spend billions on infrastructure alone - becoming precious companies.

In reality, as I’ll explain below, valuable companies will emerge in each layer of the AI industry, but each layer will have a completely different logic.

Let me explain!

In the three layers of AI theory in the AI Business Models book, I explained in detail what the AI business ecosystem looks like.

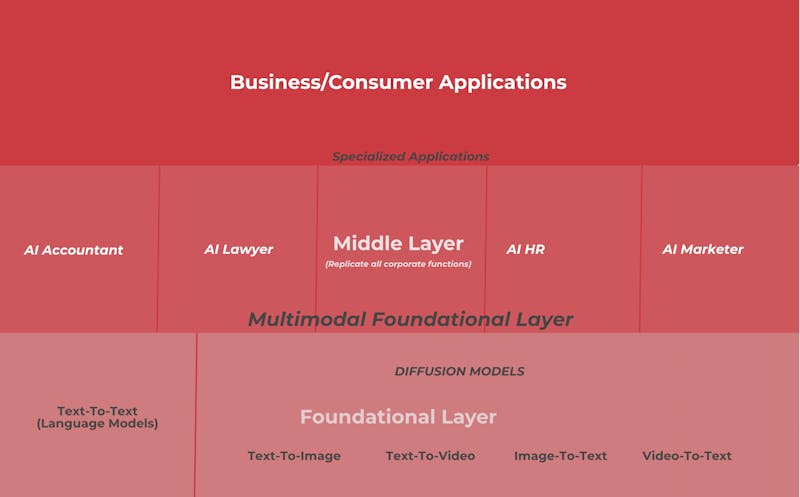

There, I explained how, on the software side, the industry is developing according to three layers:

Foundational Layer: General-purpose engines like GPT-3, with features such as being multi-modal, driven by natural language, and adapting in real-time.

Middle Layer: Comprised of specialized vertical engines replicating corporate functions and building differentiation on data moats.

App Layer: Rise of specialized applications built on top of the middle layer, focusing on scaling up user base and utilizing feedback loops to create network effects.

Now, once you've understood that, let's see what - I argue - can create a competitive moat in AI.

If you need more clarity on it, jump here and read it all!

This is part of a whole new Business Ecosystem.

In short:

Software: we moved from narrow and constrained to general and open-ended (the most powerful analogy at the consumer level is from search to conversational interfaces).

Hardware: we moved from CPUs to GPUs, powering up the current AI revolution.

Business/Consumer: we're moving from a software industry that is getting 10x better as we speak just by simply integrating OpenAI's API endpoints to any existing software application. Code is getting way cheaper, and barriers to entering the already competitive software industry are getting much, much lower. At the consumer level, first millions, and now hundreds of millions of consumers across the world are getting used to a different way to consume content online, which can be summarized as the move from indexed/static/non-personalized content to generative/dynamic/hyper-personalized experiences.

Remixing foundational models (GPT, Claude, Meta AI, DALL-E, Stable Diffusion, Midjourney, and so forth)

While there are still some arbitrage opportunities, those are quickly shrinking!

Legacy/foundational models (the general-purpose engines like GPT-4, Anthropic, Stable Diffusion, and so forth) are getting better and better at handling multiple modalities, at the point of becoming multimodal!

If you're building an AI-based product, you can use this multimodality to add impressive features at 1/100 the cost and effort...

So, for instance, if you're building an AI product, you can re-mix these models, too.

One example of this “agnosticism” is Perplexity AI, which, in the backend of its product, has multiple LLMs, which are shuffled based on what serves the best answers.

While also providing users with a choice of model to pick.

The interesting take here—as foundational players fiercely compete for a piece of the API massive cake—is that they are all slashing the prices of their APIs, thus making it extremely cheap for any startup to build a new AI tool, as long as you can create a compelling UX!

This window of opportunity might exist for the next five years as the API market consolidates around a few players, enjoying winner—take—all—effects!

Of course, chances are that this market might become an oligopoly, where a few players control most of it and thus capture a good chunk of the value, creating an incredible moat.

That's because if you're OpenAI, you can handle a large generative model like GPT-4 forward. And yet, even for those foundational players, it’ll not be easy to keep a moat, as they’ll need to invest in chip infrastructure.

Thus, while we see the development of the foundational industry as a matter of the symbiotic relationship between LLM players and large Big Tech (e.g., OpenAI/Microsoft ticket), in reality, for a company like OpenAI to strike a self-sustaining business model, it will need to control the whole chip supply chain!

That endeavor won’t be cheap, and it will require OpenAI to raise or generate over a hundred billion dollars to sustain it!

While many analysts today assume that we’ll live in a world where most big tech players will have swallowed LLM companies like OpenAI and Anthropic, in reality, assuming these companies manage to avoid bankruptcy in the next five years - OpenAI is burning cash much faster than it’s generating revenue, even if we take is hugely impressive growth! - we might see an opposite scenario where these foundational players have become trillion-dollar companies!

This is an exact case where the short term is absolutely unpredictable (we have no clue whether OpenAI will manage to stand its cash burn in the next 2-3 years). Yet, if survival ensues, the long term scenario is that these native generative AI players might swallow Big Tech in a phenomenon I like to call the “Reverse Kronos Effect.”

If you're a small startup, trying to do it from scratch might be much harder.

The more these foundational models evolve, the more difficult the barriers to entry will be to break, thus generating a leap ahead for foundational layer players like OpenAI, Antropic, and the rest.

OpenAI and other foundational layer organizations might capture value through open APIs, as they do today.

And those might really become a sort of App Store for AI applications, where they will be able to tax each of the AI tools developed on top of each ecosystem, thus capturing value from that, similar to what happens today with Apple's App Store.

For that to happen, though, foundational players will need to develop:

An integrated supply chain of chips they can control.

A combination of smaller and larger LLM models for several use cases (both horizontal and vertical) so to capture a good chunk of the value in the industry via their APIs and consumer applications.

A native AI hardware device that can be rethought for the new paradigm.

Data moats

AI models right now might have become extremely good at many tasks.

However, to make them relevant for companies at an enterprise level or for users at specific applications, data becomes critical to enabling the model to be customized based on the tech stack (for enterprise companies) and the context (for users) it sits on.

For instance, imagine an organization that wants to leverage AI to deliver custom experiences to its users, such as a company that wants to build a very specialized chatbot for support.

Of course, it can do that in many ways,

Train it on the content the company has built over the years.

Build a Q&A dataset based on users' most frequent requests.

Tackle with much more accurate questions tied to conversion based on CRM data.

In short, the enterprise organization will use this first-party data to integrate it into an AI model and make it as relevant as possible.

That is how AI applications become valuable.

For that, it becomes critical:

Data Integration: understand what data is really relevant for the AI to become way better at specific tasks.

Data Curation: to understand how to clean the relevant first-party proprietary data that can be used to train the model.

Fine-tuning: foundational models are very powerful. However, they have been trained to perform many tasks. You can fine-tune the foundational model (by feeding contextual data and by tweaking these models) to generate much better outputs. Fine-tuning becomes, therefore, critical to making sure you can build a valuable AI product on top of existing foundational layers.

Middle-layer AI engines: another interesting element is the fact that as a middle-layer AI company, you can still build refined engines on top of existing foundational models. For instance, take the case of a company that builds an AI tool for resume generation. You can still add an AI engine on top of it, which does rephrasing, further grammar checking, plagiarism, and more, which will be a value-added layer on top of the foundational layer! That is how you can transform a standardized output from the foundational, general-purpose engine into something way more specific.

While data will be a critical element of building an AI moat, companies will need to strike a balance between two main types of data:

Synthetic: data that is artificially generated by computer algorithms, simulations, or models rather than being collected from real-world events or measurements.

Curated: real-world data that has been carefully selected, cleaned, and organized by experts to meet specific quality and usability standards.

For instance, Scale AI has built a $14 billion company (so far) by specializing in providing high-quality data for AI models and using curated data extensively to power AI applications.

Their curated data process involves collecting, annotating, and refining real-world datasets to help organizations develop, train, and optimize machine learning models.

Understanding which data will be precious to progress, at a point where the incremental benefits of scaling these LLMs might be decreasing, will be a hundred billion race in a developing trillion-dollar industry!

For instance, a company like Google is experimenting a lot, by striking deals with Reddit to figure out what user-generated data, at scale, can be integrated into an AI experience for users, at scale! That’ll not be an easy feat…

From prompt engineering to agentic AI?

For years, neural nets had been stuck until they started to do incredible things.

And the most interesting part?

Most of these interesting things were the result of scaling these networks.

In other words, once a new architecture (transformer-based architecture) has been employed, the rest of the work is achieved through scaling.

Now, there is an unpredictable component to scaling.

Just like, when you scale a company, after a certain threshold, you don't know how that company might change and what properties might emerge.

Various properties emerge when scaling neural networks based on the same architectures.

In biology, emergence (or how a complex system shows completely different behaviors from its parts, as the overall system depends upon the interactions between its parts) is extremely powerful.

Indeed, even in a real-world that often looks fractal (the smaller resembles the larger), the much larger shows completely different properties!

This is one of the topics that fascinates me the most in business.

And this is also what makes AI so interesting to me right now.

By scaling AI systems, we get - unpredictable - emergent properties that, for better or for worse, might affect the evolution of AI.

For instance, prompting, or the ability to change the output of AI models based on a natural language, has been an emergent property.

None has coded it into the system; it just came out from scaling these AI models.

Another exciting aspect - I argue - is that prompting does look more like coding than searching or querying.

Indeed, those who compare prompting to search are getting it backward, in my opinion.

Prompting is way more powerful, and over time, it might become something hidden in the user interface rather than shown to final users.

In this context, prompt hacking, or the process of tweaking the natural language instruction to have the AI mod’s output, can be extremely powerful.

That is why I'm adding prompt hacking within the key elements to build an AI moat.

My main argument is that prompt hacking might be the core value of the software in a codebase that becomes much more commoditized (today, ChatGPT can generate code and also fix bugs in the code), as it will enable an AI model to improve its output slightly!

Of course, prompting might still be part of the interface for enterprise-level AI applications, as it allows the enterprise client to customize the input.

Yet, a piece of prompting (prompt hacking) might be hidden in the UI. For consumer applications, prompt hacking might be completely hidden in the interface, giving users standardized options to customize their outputs.

The remaining part of the customization will happen based on the context and interests of the user.

Indeed, as of today, with the release of new models like GPT-4o, we’re already seeing this transition: the Chain of Thought (CoT) prompting technique enhances its reasoning capabilities by making prompting still super relevant yet quite enhanced by this capability.

In fact, CoT has proved quite effective in reducing the number of prompts needed to get what you want, and this, I argue, is only the first step toward “agentic AI.”

In this process, foundational models start leveraging Self-Generated CoT.

This means that instead of relying solely on human-crafted examples, GPT-4 can generate its own chain-of-thought examples. This automation allows the model to create reasoning paths without needing extensive human input!

While the process is still embryonic, it does seem promising for moving us toward Agentic AI, as these agents might need to leverage the chain of thought to coordinate reliably, safely, and transparently.

The discovery path toward AI agents that can work reliably at scale will prompt the industry's development over the next few years.

Network effects and fast iteration loops

You might be using apps like Netflix, Uber, Airbnb, LinkedIn, YouTube, TikTok, and so forth, whose value lies in their ability to improve as more users join them.

This is known as network effects.

Would you still jump on YouTube if you did not have such a vast library of content and a discovery engine that keeps recommending interesting and engaging content?

So, just like digital businesses can build their moats via network effects, AI companies can do the same.

There is nothing new here, as companies like Meta, Google, Netflix, TikTok, and many others have been using human interactions combined with AI algorithms to improve their products at scale.

For instance, in 2019, I argued that TikTok was interesting not because it was a new social media app but because it was an AI company leveraging network effects.

At the time, TikTok moved beyond the social network, employing AI algorithms to help users discover content beyond their connections!

That is what made TikTok so sticky...

Conversely, for AI companies to be valuable, leveraging generative AI to create feedback loops that spur user adoption at scale and then capitalizing on these as a moat will be a critical component. For that sake, UX will become a core competitive advantage for these companies.

Yet, here, we’ll see the emergence of AI-ups or AI startups that will be so fast in terms of iterations that they might build valuable companies extremely quickly. They’ll need to figure out a self-sustaining business model to make their advantage sticky!

A Business Workflow combining the whole!

How a company combines all the above to create fast iterative loops to develop, launch, iterate, maintain, and grow AI applications will become its critical moat!

Each AI company working at scale will have its own workflow, which will act as a barrier to entry, opposite to economies of scale.

It will be the equivalent of the economies of scope (with a more effective workflow, AI companies can build more features and bundle various products to create a more comprehensive experience).

Thus making it harder and harder for other companies to replicate!

Creating a competitive advantage, or a "moat," through effective workflows in AI companies involves several critical elements. These elements help companies develop, launch, iterate, maintain, and grow AI applications efficiently, making it difficult for competitors to replicate their success.

Some factors that contribute to building such a moat are (we covered already some above):

Data Advantage: Access to vast and diverse datasets is crucial for training robust AI models. Companies can strengthen their data advantage by forming partnerships, acquiring data from various sources, and implementing effective data collection strategies. Proprietary data can lead to superior performance and insights that competitors cannot easily replicate.

Domain Expertise: Cultivating a highly skilled workforce with deep domain knowledge enables companies to tackle complex AI challenges and drive innovation. This expertise is essential for developing specialized AI solutions that address specific industry needs.

Vertical Integration: AI companies differentiate by offering end-to-end solutions combining hardware, software, and services.

Customer Feedback Loops: Engaging with customers and leveraging their feedback provides valuable insights that can be incorporated into product development processes.

Product Layer Moat: Integrating AI into product workflows to enhance its value proposition. The key take here is that AI completely enhances the product's core value to the point that it defines the whole business model!

Brand and Distribution

This has been true for tech companies of the web era, and it'll be even more relevant for AI companies, which will be an amplified version of tech companies.

In addition, just like distribution played a key role for early tech players (I covered deals like Google-AOL or Apple-AT&T at great lengths), it will be so for AI players.

Indeed, we've seen already how some key partnerships have developed already:

OpenAI/Microsoft

DeepMind/Google

Anthropic/Amazon AWS

Stability AI/Apple

And so on...

The way those partnerships will form will not only be important from a technological standpoint.

They will matter from a distribution standpoint.

Indeed, the paradox of these AI models right now is they work extremely well as general-purpose engines.

Yet, suppose we were to limit them by adding too many guardrails. In that case, they might also lose relevance at specific tasks (for instance, limiting ChatGTP's ability to give answers on topics where it can be misleading and factually worn answers might actually hamper its capabilities!).

Thus, this implies that we might see a different kind of distribution model with these AI companies, where for those models to become great at specific tasks, they will need to be employed first at much broader tasks.

This is a paradigm shift from a distribution standpoint.

Where in the past, we've seen tech players start as niche, then scale from there (Amazon was an online bookstore, Facebook was a social network for Harvard undergraduates), we might see these AI companies go broad, right on, and then narrow down their spectrum of applications!

For instance, ChatGPT might be a general-purpose engine right now.

Yet, once they figure out which applications are well-suited, they might also be released for specific verticals.

This broad distribution requires strong partnerships with other large tech players, which can take that burden!

Going back to branding, being able to being recognized as a new category, in any space will become a critical moat to:

Branding can significantly enhance a company's economic moat by increasing switching costs and providing other competitive advantages. Here are the key elements through which branding contributes to building a moat:

Increased Switching Costs: A strong brand can create high switching costs, making it difficult or inconvenient for customers to move to a competitor. This can be due to emotional attachment, familiarity, or the perceived risk associated with trying a new brand.

Customer Loyalty via Identity: Branding fosters customer loyalty by building trust and emotional connections. Loyal customers are less likely to switch to competitors, reducing churn and ensuring a stable customer base. This happens when you can help the user identify with your product (e.g., “I’m proud to be a Perplexity user, and I would not switch to other tools that do not represent me”)

Price Premium: A well-established brand can command higher prices due to perceived value and quality. Customers are often willing to pay more for a brand they trust, which enhances profitability and market positioning.

Barrier to Entry: Strong brand recognition is a barrier to entry for new competitors. Established brands have a significant advantage, making it challenging for newcomers to convince consumers to switch.

Network Effects Enhancement: A powerful brand can attract more customers, speeding up network effects.

Reduced Marketing Costs: Loyal customers reduce the need for extensive marketing efforts aimed at acquisition.

Valuable Customer Feedback: Loyal customers provide valuable feedback that helps improve products and services. Regular customers hardly provide valuable feedback, which entails a huge amount of trust. Only loyal customers will tell you the truth about your product to make it improve! And that happens primarily when you have a strong brand.

Capital Deployment

The whole new field might require substantial capital to scale, not to kick off, at a foundational level.

It will make building basic (initially) and more advanced (later on) applications much cheaper.

Thus substantially lowering the cost of doing business.

Yet, building powerful foundational AI engines might require massive capital.

It won’t be surprising to see companies like OpenAI/Antropic rise in the magnitude of dozens if not hundreds of billions.

Yet, as these players buzz up the foundational layer, capital also becomes available in the middle and application layers, thus making it possible for new players to easily access capital to launch a first scaling version of their product!

That's it for now!

It’s all coming together!

This is why this current AI paradigm is so powerful: it enables a confluence of various industries to become viable all at once. Thanks to it!

Augmented Reality (AR)

AI Impact: Generative AI enhances AR by dynamically generating and updating 3D models, objects, and environments in real-time, improving object recognition and interaction.

Viability: AI makes AR more interactive, context-aware, and adaptive, which opens opportunities in gaming, education, retail (e.g., virtual try-ons), and industrial training.

Scaling: AI drastically reduces the cost and effort to create AR content by automating asset generation and object tracking, enabling mass production and adoption of AR solutions across multiple industries.

General-Purpose Robotics

AI Impact: AI enables robots to autonomously understand and interact with their environments, learn from data, and perform complex tasks across varied industries.

Viability: Robotics can now function in a range of industries (manufacturing, logistics, healthcare) by adapting to different tasks and environments, making them increasingly versatile and deployable.

Scaling: AI eliminates the need for extensive task-specific programming, enabling quicker deployment of robots across industries. This flexibility leads to widespread, scalable robotics applications with lower costs and shorter deployment cycles.

Self-Driving (Autonomous Vehicles)

AI Impact: Generative AI processes vast amounts of sensory data to make real-time decisions, navigate complex environments, and optimize vehicle control.

Viability: Self-driving technology is now viable for use in passenger transport, logistics, and last-mile delivery due to improvements in safety, precision, and decision-making capabilities.

Scaling: AI reduces reliance on human intervention and accelerates the development of autonomous fleets. This helps scale autonomous driving in urban transportation systems, long-haul trucking, and automated deliveries, driving down operational costs over time.

Generative Design and 3D Printing

AI Impact: AI automates the design process by generating optimized structures or products that are tailored to specific parameters, such as strength, weight, or material.

Viability: Generative AI makes rapid prototyping and highly customized product development feasible in industries like aerospace, automotive, and consumer goods.

Scaling: AI shortens design cycles and reduces resource wastage, enabling scalable, on-demand production of goods and mass customization without the need for traditional manufacturing processes.

Synthetic Media (Virtual Influencers, AI-Generated Content)

AI Impact: AI autonomously generates video, audio, and digital characters, allowing for large-scale production of synthetic media for entertainment, marketing, and advertising.

Viability: AI-powered content creation is already being used by virtual influencers, automated ad campaigns, and AI-generated characters in video games and movies.

Scaling: AI enables content creation at a fraction of traditional costs, allowing businesses to scale media production across platforms without relying on large teams of creators, enhancing personalization and volume output.

Drug Discovery & Healthcare

AI Impact: Generative AI accelerates drug design and discovery by simulating molecular interactions, predicting compound efficacy, and streamlining clinical trial designs.

Viability: AI is transforming healthcare by making drug discovery more efficient, with applications in personalized medicine, diagnostics, and therapeutic interventions.

Scaling: AI reduces the time and cost of drug development, allowing for the large-scale production of tailored treatments and therapies. This enables pharmaceutical companies to bring more personalized, effective treatments to market faster and at scale.

Personalized Education

AI Impact: AI generates individualized learning paths, creating adaptive content tailored to each learner's progress and needs.

Viability: AI-powered personalized education platforms are now viable for mass adoption, offering adaptive learning in schools, online platforms, and corporate training environments.

Scaling: AI automates the personalization of learning materials and assessments, making it possible to deliver customized education to millions of students globally, democratizing access to quality education at scale.

To mention a few…

This convergence is generating the next multi-trillion-dollar industry!

Recap: In This Newsletter Issue!

Three Layers of AI Industry:

Foundational Layer: General-purpose engines like GPT, DALL-E.

Middle Layer: Specialized vertical AI engines with data differentiation.

Application Layer: Apps using AI engines with feedback loops and network effects.

AI Moats: AI moats can be built at any layer through data integration, fine-tuning, and prompt engineering, even without operating at the foundational layer. Companies like Perplexity AI are examples of non-foundational players succeeding.

API-Driven Innovation: The slashing of API prices makes it cheap for startups to build on top of foundational models, allowing for impressive features at lower costs. The market could consolidate into an oligopoly over the next five years.

Foundational Players & Infrastructure: To stay ahead, foundational players like OpenAI may need to develop full chip infrastructure and control their supply chain to maintain a moat. This demands capital investments on a massive scale.

Data Moats: AI applications gain value through data integration and curation, enabling customization. Proprietary datasets are key to training AI models effectively for specific tasks.

Emergence of Agentic AI: As AI scales, new emergent properties like Chain of Thought (CoT) prompting will evolve, leading to more autonomous systems. Self-generated CoT examples could move AI closer to autonomous "agentic" systems.

Network Effects & Fast Iteration: Similar to web apps, AI companies can leverage network effects to improve their products at scale. Fast iteration loops, user adoption, and feedback will be critical for AI startups to build sustainable competitive advantages.

Business Workflows as Moats: AI companies can create powerful competitive moats by optimizing workflows that integrate data, domain expertise, vertical integration, and rapid product development.

Brand & Distribution: Branding and strategic partnerships (e.g., OpenAI/Microsoft) will play a crucial role in scaling AI applications. Strong brands will enhance switching costs and enable better distribution, creating long-term advantages.

Future of AI Industry: The foundational AI market could either consolidate or witness foundational players like OpenAI growing into trillion-dollar companies, potentially outgrowing Big Tech through a "Reverse Kronos Effect."

Ciao!

With ♥️ Gennaro, FourWeekMBA